lidarPointCloudGenerator

Generate lidar point cloud data for driving scenario or RoadRunner Scenario using ray tracing

Description

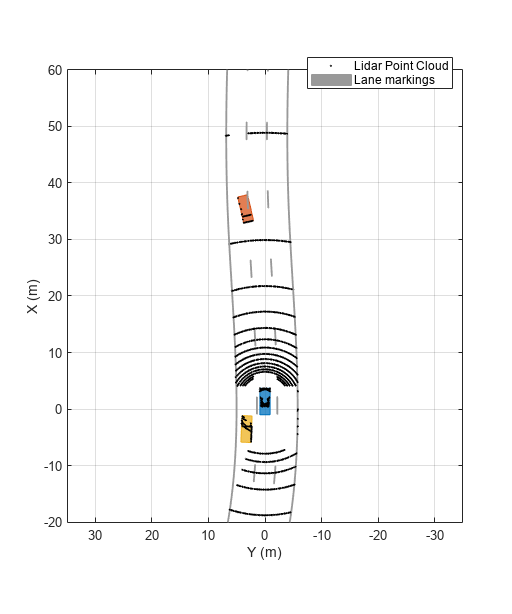

The lidarPointCloudGenerator

System object™ generates detections from a lidar sensor mounted on an ego vehicle. All

detections are referenced to the coordinate system of the ego vehicle or the vehicle-mounted

sensor. You can use the lidarPointCloudGenerator object in a

scenario containing actors and trajectories, which you can create by using a drivingScenario object. You can use the addSensors

object function of the drivingScenario object to register the lidar as a

sensor with the driving scenario. Then, you can call the lidar sensor object without any input

arguments during the simulation. For more information, see Syntax Description For No

Actor Pose Inputs.

You can also use the lidarPointCloudGenerator object with vehicle actors in RoadRunner Scenario simulation. First you must create a SensorSimulation object to interface sensors with RoadRunner Scenario, and then register the sensor model using the addSensors object function before simulation.

The lidar sensor model created with lidarPointCloudGenerator uses hardware accelerated ray tracing to produce a highly realistic and accurate representation of objects in the point cloud. Ray tracing allows for precise modeling of the interactions between light rays emitted by the lidar sensor and the surfaces of objects in the scene. Additionally, ray tracing considers factors such as surface texture and angles, which enhances the realism and accuracy of the output point cloud data. The sensor model can also add random noise to the detections.

To generate lidar point clouds:

Create the

lidarPointCloudGeneratorobject and set its properties.Call the object with or without arguments, as if it were a function.

To learn more about how System objects work, see What Are System Objects?

Creation

Description

lidar = lidarPointCloudGeneratorlidarPointCloudGenerator object with default property

values to generate a point cloud for a lidar sensor.

lidar = lidarPointCloudGenerator(Name,Value)lidarPointCloudGenerator('DetectionCoordinates','Sensor

Cartesian','MaxRange',200) creates a lidar point cloud generator that reports

detections in the sensor Cartesian coordinate system and has a maximum detection range of

200 meters. Enclose each property name in quotes.

Properties

Usage

Syntax

Description

No Actor Pose Inputs

[

generates a lidar point cloud, ptCloud,isValidTime] = lidar()ptCloud and returns

isValidTime, which indicates whether the point cloud is generated

at the current simulation time without any input arguments. Use this syntax after you

add the sensors to the driving scenario using the addSensors

function. This syntax provides significant performance improvements over syntaxes with

input arguments. For more information on performance improvements, see Improved Simulation

Performance Using New Syntax Without Actor Pose Inputs.

[

additionally returns ptCloud,isValidTime,clusters] = lidar()clusters, which contains the classification

data of the generated point cloud.

Manually Input Actor Poses

[

additionally returns ptCloud,isValidTime] = lidar(actors,rdMesh,simTime)isValidTime, which indicates whether the point

cloud is generated at the specified simulation time.

Input Arguments

Output Arguments

Object Functions

To use an object function, specify the

System object as the first input argument. For

example, to release system resources of a System object named obj, use

this syntax:

release(obj)

Examples

Version History

Introduced in R2020aSee Also

Objects

objectDetection|drivingScenario|laneMarking|lanespec|monoCamera|multiObjectTracker|drivingRadarDataGenerator|visionDetectionGenerator|extendedObjectMesh|insSensor