Generate RoadRunner Scene Using Processed Camera Data and GPS Data

This example shows how to generate a RoadRunner scene containing add or drop lanes using processed camera images containing tracked lane boundaries and GPS waypoints.

You can create a virtual scene from recorded sensor data that represents real-world roads and use it to perform safety assessments for automated driving applications. By combining camera images and GPS data, you can accurately reconstruct a road scene that contains lane information. Camera images enable you to easily identify scene elements, such as lane markings and road boundaries, while GPS waypoints enable you to place these elements in the world coordinate system. This example uses the tracked lane boundary data from camera images and fuses with GPS waypoints to generate scene with accurate lane information.

In this example, you:

Load sensor data and tracked lane boundary data from camera images. This example loads the tracked lane boundaries obtained from the Extract Lane Information from Recorded Camera Data for Scene Generation example.

Create lane boundary group in world frame from the loaded lane boundary data.

Generate a RoadRunner HD Map from the lane boundary group.

The workflow in this example requires tracked lane boundary data and GPS data. Both data must represent the same scenario and they must be time synchronized. For more information on how to obtain tracked lane boundary data from camera images, see the Extract Lane Information from Recorded Camera Data for Scene Generation example.

Load Sensor Data and Lane Boundary Data

This example requires the Scenario Builder for Automated Driving Toolbox™ support package. Check if the support package is installed and, if it is not installed, install it using the Get and Manage Add-Ons.

checkIfScenarioBuilderIsInstalled

Download a ZIP file containing the camera sensor data with camera parameters, and then unzip the file. This data set has been collected using a forward-facing camera mounted on an ego vehicle.

dataFolder = tempdir; dataFilename = "PolysyncSensorData_23a.zip"; url = "https://ssd.mathworks.com/supportfiles/driving/data/"+dataFilename; filePath = fullfile(dataFolder, dataFilename); if ~isfile(filePath) websave(filePath,url); end unzip(filePath, dataFolder); dataset = fullfile(dataFolder,"PolysyncSensorData");

Load the downloaded data set into the workspace using the helperLoadExampleCameraData helper function.

[imds,sensor,gps,gpstimes] = helperLoadExampleCameraData(dataset);

The output arguments of the helperLoadData function contains:

imds— Datastore for images, specified as aimageDatastoreobject.sensor— Camera parameters, specified as amonoCameraobject.gps— GPS coordinates of the ego vehicle, specified as a table.gpstimes — Time of capture, in seconds, for the gps data. Timestamps are synchronized w.r.t gps.

Load the tracked lane boundary data extracted from camera images. You can obtain lane boundary labels using a lane boundary detector and track them using a lane boundary tracker. For more information, see Extract Lane Information from Recorded Camera Data for Scene Generation example.

trackedLaneBoundaries = load("trackedBoundaries.mat");

trackedLaneBoundaries = trackedLaneBoundaries.trackedLaneBoundaries;Visualize the lane boundary tracks on the image sequence by using the helperPlotTracksOnImage helper function.

helperPlotTracksOnImage(sensor,imds,trackedLaneBoundaries);

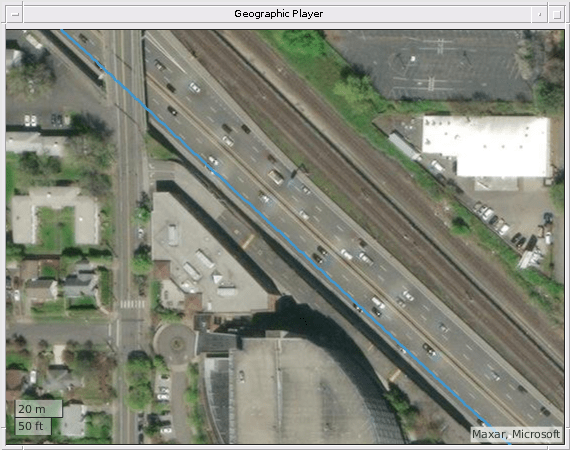

Visualize geographic map data with GPS trajectory of ego vehicle by using the helperPlotGPS function. Note that, in the figure the ego vehicle is moving from bottom-right to the top-left on the trajectory.

helperPlotGPS(gps);

Create Lane Boundary Groups from Loaded Data

Create a waypoint Trajectory from the GPS data.

lat = gps.lat; lon = gps.long; alt = gps.height; geoRef = [lat(1) lon(1) alt(1)]; [x,y,z] = latlon2local(lat,lon,alt,geoRef); waypoints = double([x y z]); egoTraj = waypointTrajectory(waypoints,gpstimes);

Create lane boundary segments from the tracked lane boundary data by using the egoToWorldLaneBoundarySegments function.

lbSegments = egoToWorldLaneBoundarySegments(trackedLaneBoundaries,egoTraj,GeoReference=geoRef)

lbSegments=3×1 laneBoundarySegment array with properties:

BoundaryIDs

BoundaryPoints

BoundaryInfo

NumBoundaries

NumPoints

GeoReference

Plot and view the first lane boundary segment. You can also plot the other segments and check for inaccuracies in the lane boundaries.

plot(lbSegments(1));

Group lane boundary segments by using the laneBoundaryGroup object. Plot the lane boundary points.

lbGroup = laneBoundaryGroup(lbSegments); plot(lbGroup)

Smooth the lane boundaries in the lane boundary group object, by using the smoothBoundaries object function.

% Plot the lane boundary points. plot(lbGroup); xlim([60 80]) ; ylim([-50 -20]); title("Before Smoothing");

% Plot the smoothed lane boundary points. lbGroup = smoothBoundaries(lbGroup,Degree=4,SmoothingFactor=0.2); plot(lbGroup) xlim([60 80]) ; ylim([-50 -20]); title("After Smoothing");

Create RoadRunner HD Map

Create a RoadRunner HD Map from the lane boundary group object by using the getLanesInRoadRunnerHDMap function.

rrMap = getLanesInRoadRunnerHDMap(lbGroup);

write(rrMap,"rrMap.rrhd");Visualize the generated RoadRunner HD Map along with the ego-vehicle waypoints.

plot(rrMap) hold on plot(waypoints(:,1),waypoints(:,2),"bo") legend("Lane Boundaries","Lane Centers","Ego-Vehicle Waypoints")

To open RoadRunner using MATLAB®, specify the path to your RoadRunner project. This code shows the path for a sample project folder location in Windows®.

rrProjectPath = "C:\RR\MyProject";Specify the path to your local RoadRunner installation folder. This code shows the path for the default installation location in Windows.

rrAppPath = "C:\Program Files\RoadRunner R2023a\bin\win64";Open RoadRunner using the specified path to your project.

rrApp = roadrunner(rrProjectPath,InstallationFolder=rrAppPath);

Specify the RoadRunner HD Map import options. Set the enableOverlapGroupsOptions to false to ensure that a junction is built at the two intersecting roads.

overlapGroupsOptions = enableOverlapGroupsOptions(IsEnabled=false); buildOptions = roadrunnerHDMapBuildOptions(EnableOverlapGroupsOptions=overlapGroupsOptions); importOptions = roadrunnerHDMapImportOptions(BuildOptions=buildOptions);

Import the rrMap.rrhd scene into RoadRunner.

importScene(rrApp,fullfile(pwd,"rrMap.rrhd"),"RoadRunner HD Map",importOptions);

Use Lane Marking Tool to mark lane boundaries in the imported road. This figure shows the built road in RoadRunner.

Note: Use of the Scene Builder Tool requires a RoadRunner Scene Builder license.

See Also

Functions

Objects

Topics

- Overview of Scenario Generation from Recorded Sensor Data

- Generate RoadRunner Scene Using Labeled Camera Images and Raw Lidar Data

- Extract Lane Information from Recorded Camera Data for Scene Generation

- Extract Vehicle Track List from Recorded Camera Data for Scenario Generation

- Extract Vehicle Track List from Recorded Lidar Data for Scenario Generation

- Smooth GPS Waypoints for Ego Localization

- Ego Vehicle Localization Using GPS and IMU Fusion for Scenario Generation

- Generate Scenario from Actor Track Data and GPS Data