Extract Vehicle Track List from Recorded Camera Data for Scenario Generation

This example shows how to extract the vehicle track-list information required for generating scenarios from raw camera data.

Track lists are crucial for interpreting the positions and trajectories of the vehicles in a scenario. You can use track lists to extract dynamic actor properties.

In this example, you:

Detect the vehicles from recorded camera data using a pretrained

yolov4ObjectDetectorobject.Apply a global nearest neighbour (GNN) tracker to generate a vehicle track list from the detected vehicles.

Transform the detected vehicle positions from image coordinates to real-world vehicle coordinates using monocular camera sensor parameters.

Store the extracted vehicle track list in

actorTracklistobject for use in the scenario generation workflow.

You can use the extracted vehicle track list to generate a scenario from recorded sensor data. For more information about creating scenarios from vehicle track lists, see the Generate Scenario from Actor Track Data and GPS Data example. For more information on generating scenarios for RoadRunner Scenario, see the Generate RoadRunner Scenario from Recorded Sensor Data example.

Load Camera Data

This example requires the Scenario Builder for Automated Driving Toolbox™ support package. Check if the support package is installed and, if it is not installed, install it using the Get and Manage Add-Ons.

checkIfScenarioBuilderIsInstalled

Download a ZIP file containing a subset of sensor data from the PandaSet data set, and then unzip the file. This data set, which contains camera images and camera sensor parameters has been collected using a forward-facing camera mounted on an ego vehicle.

dataFolder = tempdir; dataFilename = "PandasetSensorData.zip"; url = "https://ssd.mathworks.com/supportfiles/driving/data/" + dataFilename; filePath = fullfile(dataFolder,dataFilename); if ~isfile(filePath) websave(filePath,url) end unzip(filePath,dataFolder) dataset = fullfile(dataFolder,"PandasetSensorData"); data = load(fullfile(dataset,"sensorData.mat")); monocamData = data.CameraData;

monocamData is a table with two columns:

timeStamp— Time, in seconds, at which the image data was captured.fileName— Filenames of the images in the data set.

The images are located in the Camera folder in the dataset directory. Create a table that contains the file paths of these images with their relative timestamps by using the helperUpdateTable function.

imageFolder = "Camera";

monocamData = helperUpdateTable(monocamData,dataset,imageFolder);Display the first five entries of monocamData.

monocamData(1:5,:)

ans=5×2 table

timeStamp filePath

__________ _____________________________________________

1.5576e+09 {["/tmp/PandasetSensorData/Camera/0001.jpg"]}

1.5576e+09 {["/tmp/PandasetSensorData/Camera/0002.jpg"]}

1.5576e+09 {["/tmp/PandasetSensorData/Camera/0003.jpg"]}

1.5576e+09 {["/tmp/PandasetSensorData/Camera/0004.jpg"]}

1.5576e+09 {["/tmp/PandasetSensorData/Camera/0005.jpg"]}

Display the first image from monocamData.

image = imread(monocamData.filePath{1});

imshow(image)

Detect Vehicles

In this example, you use a pretrained deep neural network model to detect the vehicles from behind. Download the ZIP file containing the pretrained model, and then unzip it. The size of the downloaded model is 234 MB.

modelFilename = "CameraVehicleDetectorYOLOv4.zip"; modelUrl = "https://ssd.mathworks.com/supportfiles/driving/data/" + modelFilename; filePath = fullfile(dataFolder,modelFilename); if ~isfile(filePath) websave(filePath,modelUrl) end modelFolder = fullfile(dataFolder,"CameraVehicleDetectorYOLOv4"); unzip(filePath,dataFolder) model = load(fullfile(modelFolder,"cameraVehicleDetectorYOLOv4.mat")); vehicleDetector = model.detector;

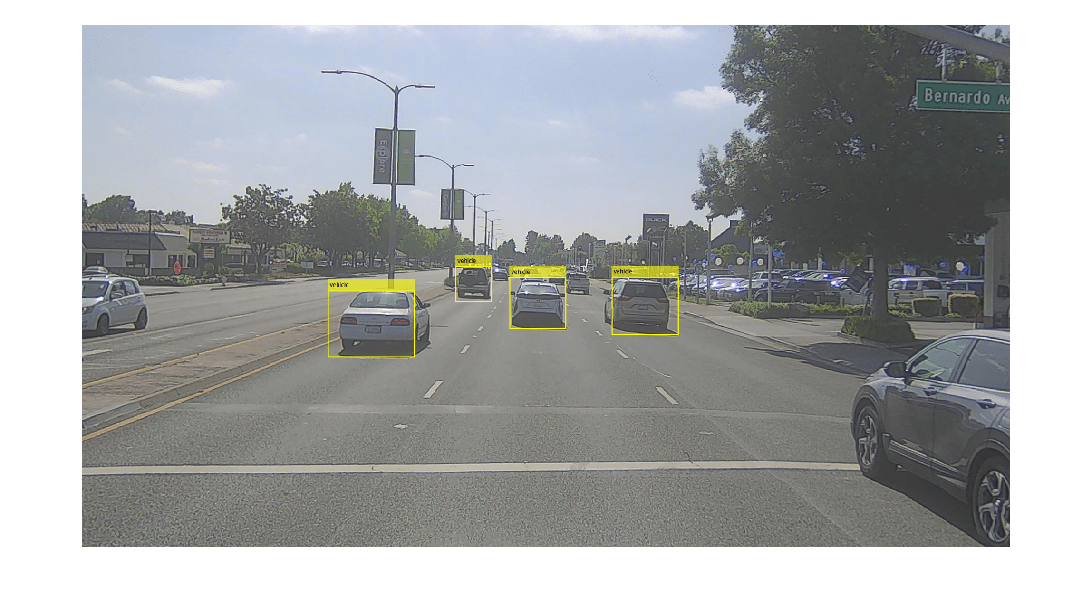

Detect the vehicles in the first image of monocamData.

[bboxes,scores,labels] = detect(vehicleDetector,image,Threshold=0.6);

Overlay the vehicle detections on the image, and display the overlaid image.

detectedImg = insertObjectAnnotation(image,"Rectangle",bboxes,labels,LineWidth=2);

imshow(detectedImg)

To generate a real-world scenario, you must transform the detected vehicle positions from image coordinates to vehicle coordinates using the camera parameters. If you do not know the camera parameters, you can estimate them. For more information about estimating camera parameters, see Calibrate a Monocular Camera. You can also use the estimateMonoCameraFromScene function to estimate approximate camera parameters directly from a camera image.

To create a monoCamera object, you specify the camera intrinsic parameters of focal length (fx, fy), principal point (cx, cy), and image size. Extract the components for focal length and principal point from the camera data.

intrinsics = data.Intrinsics

intrinsics = struct with fields:

fx: 1.9700e+03

fy: 1.9700e+03

cx: 970.0002

cy: 483.2988

Create a cameraIntrinsics object.

focalLength = [intrinsics.fx intrinsics.fy]; principalPoint = [intrinsics.cx intrinsics.cy]; imageSize = [size(image,1) size(image,2)]; intrinsics = cameraIntrinsics(focalLength,principalPoint,imageSize);

Create a monoCamera object using the camera intrinsic parameters and height. Display the object properties.

camHeight = data.CameraHeight; sensorParams = monoCamera(intrinsics,camHeight)

sensorParams =

monoCamera with properties:

Intrinsics: [1×1 cameraIntrinsics]

WorldUnits: 'meters'

Height: 1.6600

Pitch: 0

Yaw: 0

Roll: 0

SensorLocation: [0 0]

Configure the vehicle detector for use with the monocular camera mounted on the ego vehicle by using the configureDetectorMonoCamera function. Specify the objectSize as [1.5 2.5] to limit the width of detected objects to a typical range for vehicle widths.

objectSize = [1.5 2.5]; configuredDetector = configureDetectorMonoCamera(vehicleDetector,sensorParams,objectSize)

configuredDetector =

yolov4ObjectDetectorMonoCamera with properties:

Camera: [1×1 monoCamera]

WorldObjectSize: [1.5000 2.5000]

Network: [1×1 dlnetwork]

AnchorBoxes: {3×1 cell}

ClassNames: {'vehicle'}

InputSize: [608 608 3]

ModelName: 'csp-darknet53-coco'

To specify the position of the rotational center of a vehicle, this example extracts the midpoint of the vehicle rear axle by detecting rear view of that vehicle. Detect the rear views of the vehicles from the camera images.

% Create a placeholder for detections. vehicleDetections = []; % Get backside bounding boxes, scores, and labels. for i = 1:size(monocamData,1) image = imread(monocamData.filePath{i}); [bboxes,scores,labels] = detect(configuredDetector,image,Threshold=0.6); vehicleDetections(i,1).Bboxes = bboxes; vehicleDetections(i,1).Scores = scores; vehicleDetections(i,1).Labels = labels; end % Create detection results table. vehicleDetections = struct2table(vehicleDetections);

vehicleDetections is an M-by-3 table containing the detected bounding boxes for the vehicles, scores, and labels in each image. M is the number of images.

Generate Tracks from Detections

You must assign detections to distinct tracks to determine the entry and exit times of vehicles. If your detections contain noise, you can smooth the noisy detections by using a global nearest neighbor (GNN) tracker. To track vehicle detections in this example, you initialize the GNN tracker using a constant velocity Kalman filter.

Define a GNN tracker using the trackerGNN (Sensor Fusion and Tracking Toolbox) object, by specifying these properties.

ConfirmationThreshold— Specified as[5 5], to confirm the track if the vehicle is detected for at least 5 out of the past 5 detections.DeletionThreshlod— Specified as[5 5], to remove a track if the track is missing from the 5 most recent consecutive detections.AssignmentThreshold— Specified as120, this sets the normalized maximum distance at which to associate a detection to a track.

vehicleTrackerGNN = trackerGNN(FilterInitializationFcn=@helperInitBboxFilter, ... AssignmentThreshold=120, ... DeletionThreshold=[5 5], ... ConfirmationThreshold=[5 5]);

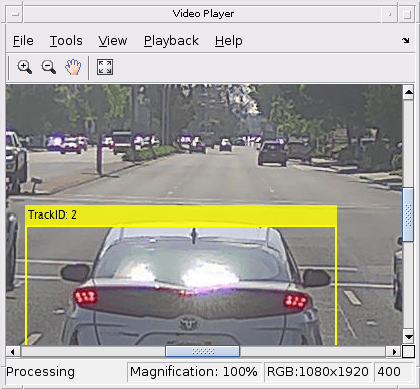

Create a video player object to visualize the tracked vehicles.

videoPlayer = vision.VideoPlayer;

Process vehicle detections for each frame, and assign tracks if a detection meets the assignment criteria. Extract the rear-axle information of each tracked vehicle for scenario generation.

% Initialize empty actorTracklist object to store the generated track list data. tracklist = actorTracklist; % Get the list of all backside bounding boxes for each frame from the detector output. backsideBboxes = vehicleDetections.Bboxes; % Initialize the current step for tracker. currentStep = 0; % Get the total number of frames. numFrames = size(monocamData,1); for i = 1:numFrames % Get the timestamp. timestamp = monocamData.timeStamp(i); % Update step counter. currentStep = currentStep + 1; % Read the current frame. frame = imread(monocamData.filePath{i}); % Get the backside bounding boxes for the current frame. bboxes = backsideBboxes{i}; % Pack detections as measurements for the tracker. measurements = helperPackAsObjectDetections(bboxes,currentStep); % Return the updated tracks for the current time step. confirmedTracks = vehicleTrackerGNN(measurements,currentStep); % Remove invalid tracks. imageSize = sensorParams.Intrinsics.ImageSize; confirmedTracks = helperRemoveInvalidTracks(confirmedTracks,imageSize); % Extract the backside bounding boxes from the confirmed tracks. vehicleBboxes = helperExtractBboxes(confirmedTracks); % Estimate the position of vehicles in the ego frame from the backside % bounding boxes, and create the track list. % Set the length of the vehicle in meters to offset the estimated x position to the % vehicle center. vehicleLength = 4.7; if ~isempty(vehicleBboxes) for j = 1:size(confirmedTracks,1) % Get the trackid of confirmed tracks. trackID = string(confirmedTracks(j).TrackID); bbox = vehicleBboxes(j, :); % Convert to vehicle coordinates using monoCamera object. xyPos = imageToVehicle(sensorParams,[bbox(1)+bbox(3)/2,bbox(2)+bbox(4)]); % Offset the x position of the vehicle to the vehicle center. xyPos(:,1) = xyPos(:,1)+vehicleLength/2; vehiclePosition = {[xyPos,0]}; % Set the class id to 1 for 'vehicle' class as the detector % can detect only cars. classID = 1; % Add the data to the actorTracklist object. tracklist.addData(timestamp,trackID,classID,vehiclePosition); end end % Insert tracking annotations. annotatedFrame = helperInsertTrackAnnotations(frame,confirmedTracks); % Display the annotated frame. videoPlayer(annotatedFrame) pause(0.1) end

Display properties of tracklist.

tracklist

tracklist =

actorTracklist with properties:

TimeStamp: [396×1 double]

TrackIDs: {396×1 cell}

ClassIDs: {396×1 cell}

Position: {396×1 cell}

Dimension: []

Orientation: []

Velocity: []

Speed: []

StartTime: 1.5576e+09

EndTime: 1.5576e+09

NumSamples: 396

UniqueTrackIDs: [24×1 string]

You can use the actorprops function to extract actor properties from the vehicle track lists for generating a driving scenario. For more information, see the Generate Scenario from Actor Track Data and GPS Data example. For more information on how to generate scenarios for RoadRunner Scenario from vehicle track lists, see the Generate RoadRunner Scenario from Recorded Sensor Data example.

Helper Functions

helperInitBboxFilter — Initialize 4-D constant velocity Kalman filter.

function filter = helperInitBboxFilter(Detection) % Step 1: Define the motion model and state. % Use a constant velocity model for a bounding box on the image. % The state is [x; vx; y; vy; w; wv; h; hv] % The state transition matrix is: % [1 dt 0 0 0 0 0 0; % 0 1 0 0 0 0 0 0; % 0 0 1 dt 0 0 0 0; % 0 0 0 1 0 0 0 0; % 0 0 0 0 1 dt 0 0; % 0 0 0 0 0 1 0 0; % 0 0 0 0 0 0 1 dt; % 0 0 0 0 0 0 0 1] % Assume dt = 1. This implementation does not consider a time-variant % transition model for a linear Kalman filter. dt = 1; cvel =[1 dt; 0 1]; A = blkdiag(cvel,cvel,cvel,cvel); % Step 2: Define the process noise. % The process noise represents the parts of the process that the model % does not take into account. For example, in a constant velocity model, % the acceleration is ignored. G1d = [dt^2/2; dt]; Q1d = G1d*G1d'; Q = blkdiag(Q1d,Q1d,Q1d,Q1d); % Step 3: Define the measurement model. % Only the position ([x; y; w; h]) is measured. % The measurement model is H = [1 0 0 0 0 0 0 0; ... 0 0 1 0 0 0 0 0; ... 0 0 0 0 1 0 0 0; ... 0 0 0 0 0 0 1 0]; % Step 4: Map the sensor measurements to an initial state vector. % Because there is no measurement of the velocity, the v components are % initialized to 0: state = [Detection.Measurement(1); ... 0; ... Detection.Measurement(2); ... 0; ... Detection.Measurement(3); ... 0; ... Detection.Measurement(4); ... 0]; % Step 5: Map the sensor measurement noise to a state covariance. % For the parts of the state that the sensor measured directly, use the % corresponding measurement noise components. For the parts that the % sensor does not measure, assume a large initial state covariance. That way, % future detections can be assigned to the track. L = 50; % Large value stateCov = diag([Detection.MeasurementNoise(1,1), ... L, ... Detection.MeasurementNoise(2,2), ... L, ... Detection.MeasurementNoise(3,3), ... L, ... Detection.MeasurementNoise(4,4), ... L]); % Step 6: Create the correct filter. filter = trackingKF(... StateTransitionModel=A, ... MeasurementModel=H, ... State=state, ... StateCovariance=stateCov, ... MeasurementNoise=Detection.MeasurementNoise, ... ProcessNoise=Q); end

References

[1] Hesai and Scale. PandaSet. Accessed September 18, 2025. https://pandaset.org/. The PandaSet data set is provided under the CC-BY-4.0 license.

See Also

Functions

Topics

- Overview of Scenario Generation from Recorded Sensor Data

- Smooth GPS Waypoints for Ego Localization

- Ego Vehicle Localization Using GPS and IMU Fusion for Scenario Generation

- Extract Lane Information from Recorded Camera Data for Scene Generation

- Generate High Definition Scene from Lane Detections and OpenStreetMap

- Extract Vehicle Track List from Recorded Lidar Data for Scenario Generation

- Generate Scenario from Actor Track Data and GPS Data