Extract Vehicle Track List from Recorded Lidar Data for Scenario Generation

This example shows how to extract the vehicle track-list information required for generating a scenarios from raw lidar data.

Track lists are crucial for interpreting the positions and trajectories of the vehicles in a scenario. You can use track lists to extract dynamic actor properties.

In this example, you:

Detect the vehicles from recorded lidar data using a pretrained

pointPillarsObjectDetector(Lidar Toolbox) object.Generate a vehicle track list from the detected vehicles using a joint probabilistic data association (JPDA) tracker.

Format the vehicle track list for use in the scenario generation workflow.

You can use the extracted vehicle track list to generate a scenario from recorded sensor data. For more information about creating scenarios from vehicle track lists, see the Generate Scenario from Actor Track Data and GPS Data example. For more information on generating scenarios for RoadRunner Scenario, see the Generate RoadRunner Scenario from Recorded Sensor Data example.

Load Lidar Data

This example requires the Scenario Builder for Automated Driving Toolbox™ support package. Check if the support package is installed and, if it is not installed, install it using the Get and Manage Add-Ons.

checkIfScenarioBuilderIsInstalled

Download a ZIP file containing a subset of sensor data from the PandaSet data set and then unzip the file. This data set contains five sequences of lidar and camera data along with their sensor information. The size of the downloaded data set is 1.29 GB.

tmpFolder = tempdir; dataFileName = "PandasetData090to094.zip"; url = "https://ssd.mathworks.com/supportfiles/driving/data/" + dataFileName; filePath = fullfile(tmpFolder,dataFileName); if ~isfile(filePath) websave(filePath,url) end dataFolder = fullfile(tmpFolder,"PandasetData090to094"); mkdir(dataFolder) unzip(filePath,dataFolder)

Get the lidar point cloud data, lidar timestamps, camera images, and camera timestamps by using the helperLoadDataForTracklist helper function. This function returns a table containing data for all the sequences in the dataFolder.

sensorData = helperLoadDataForTracklist(dataFolder);

sensorData is a table with these columns:

lidarTimestamps- Time, in seconds, at which the lidar point cloud data was collected.ptCldPath- Path of the lidar point cloud data in PCD format.lidarPosition - Position of the lidar sensor in world coordinates. Units are in meters.

lidarHeading- Heading of the lidar sensor specified as a quaternion, in world coordinates.frontCameraImagesPath- Path of the front camera images.cameraTimestamps- Time, in seconds, at which the camera data was collected.GPS- Coordinates of the ego vehicle, specified as a structure. The structure contains these fields: latitude, longitude, and altitude.

Display the first five entries of sensorData.

sensorData(1:5,:)

ans=5×7 table

lidarTimestamps ptCldPath lidarPosition lidarHeading frontCameraImagesPath cameraTimestamps GPS

_______________ _________________________________________________________________ _____________ ____________ _______________________________________________________________________________ ________________ __________

1.5576e+09 "/tmp/PandasetData090to094/PandasetData090to094/090/lidar/00.pcd" 1×1 struct 1×1 struct "/tmp/PandasetData090to094/PandasetData090to094/090/camera/front_camera/00.jpg" 1.5576e+09 1×1 struct

1.5576e+09 "/tmp/PandasetData090to094/PandasetData090to094/090/lidar/01.pcd" 1×1 struct 1×1 struct "/tmp/PandasetData090to094/PandasetData090to094/090/camera/front_camera/01.jpg" 1.5576e+09 1×1 struct

1.5576e+09 "/tmp/PandasetData090to094/PandasetData090to094/090/lidar/02.pcd" 1×1 struct 1×1 struct "/tmp/PandasetData090to094/PandasetData090to094/090/camera/front_camera/02.jpg" 1.5576e+09 1×1 struct

1.5576e+09 "/tmp/PandasetData090to094/PandasetData090to094/090/lidar/03.pcd" 1×1 struct 1×1 struct "/tmp/PandasetData090to094/PandasetData090to094/090/camera/front_camera/03.jpg" 1.5576e+09 1×1 struct

1.5576e+09 "/tmp/PandasetData090to094/PandasetData090to094/090/lidar/04.pcd" 1×1 struct 1×1 struct "/tmp/PandasetData090to094/PandasetData090to094/090/camera/front_camera/04.jpg" 1.5576e+09 1×1 struct

Create a combine object containing the lidar point clouds, lidar poses, lidar timestamps, camera images and camera timestamps by using the helperCreateCombinedDatastore helper function. The helper function also creates the lidar poses as a rigidtform3d object required to transform the point clouds from the lidar sensor coordinates to world coordinates by using the lidarPosition and lidarHeading information in sensorData.

combinedDs = helperCreateCombinedDatastore(sensorData);

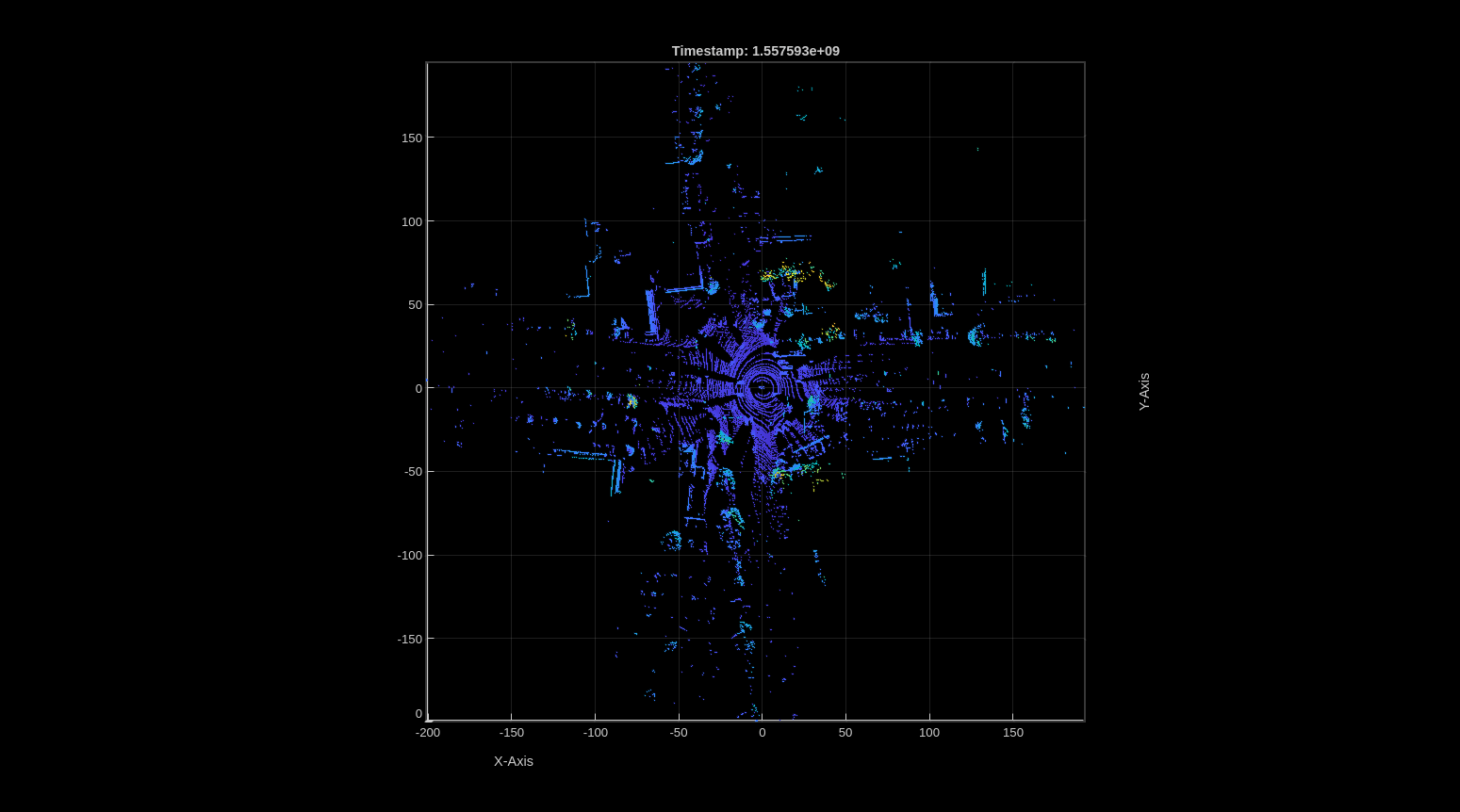

Read and display the first point cloud in the dataset.

cdsData = read(combinedDs);

ptCld = cdsData{1};

ax = pcshow(ptCld);

view(ax,2)

title(sprintf("Timestamp: %d",cdsData{3}))

xlabel("X-Axis")

ylabel("Y-Axis")

Display the image corresponding to the point cloud ptCld.

I = cdsData{4};

imshow(I)

Detect Vehicles from Lidar Data

In this example, you use a pretrained pointPillarsObjectDetector (Lidar Toolbox) deep neural network model to detect vehicles from lidar data. Download a ZIP file containing the pretrained model, and then unzip it. The size of the downloaded model is 9 MB.

tmpFolder = tempdir; modelFilename = "LidarVehicleDetectorPointPillars.zip"; modelUrl = "https://ssd.mathworks.com/supportfiles/driving/data/" + modelFilename; filePath = fullfile(dataFolder,modelFilename); if ~isfile(filePath) websave(filePath,modelUrl) end unzip(filePath,dataFolder) modelFolder = fullfile(dataFolder,"LidarVehicleDetectorPointPillars"); model = load(fullfile(modelFolder,"lidarVehicleDetectorPointPillars.mat")); lidarVehicleDetector = model.detector;

The downloaded pretrained pointPillarsObjectDetector (Lidar Toolbox) model requires the point cloud data in sensor coordinate system used in Automated Driving Toolbox™. For more information, see Coordinate Systems in Automated Driving Toolbox.

While, the lidar point clouds in the dataset are in world coordinate system, which you must transform into the lidar sensor frame of PandaSet using the lidar pose information. Then transform the point clouds from the lidar sensor frame to the sensor coordinate system in Automated Driving toolbox.

According to the sensor coordinate system in Automated Driving Toolbox, the positive x-axis must point forward from the ego vehicle, and the positive y-axis must point to the left of the ego vehicle for each point cloud. However, for the PandaSet data set, in the lidar sensor frame, the ego vehicle faces toward the positive y-axis and the left of the ego vehicle is along the negative x-axis. Thus, you must apply a rotation of –90 degrees around the positive z-axis.

% Create a 3-D rigid geometric transformation object to apply a rotation of % -90 degrees along positive z-axis rotationInLidarFrame = [0 0 -90]; translationInLidarFrame=[0 0 0]; tformRotationInLidarFrame = rigidtform3d(rotationInLidarFrame,translationInLidarFrame); % Transform the point clouds from the world coordinate system to the sensor coordinate system in the Automated Driving Toolbox. reset(combinedDs) ptCldInADT = pointCloud.empty(0,height(sensorData)); for i=1:height(sensorData) cdsData = read(combinedDs); ptCld = cdsData{1}; tformSensorToWorld = cdsData{2}; % Transform the point clouds from world coordinates to the lidar sensor frame in PandaSet dataset. ptCld = pctransform(ptCld,invert(tformSensorToWorld)); % Transform the point clouds from the lidar sensor frame in PandaSet dataset to the sensor coordinate system in the Automated % Driving Toolbox. ptCldInADT(i) = pctransform(ptCld,tformRotationInLidarFrame); end ptCldInADT = ptCldInADT';

Display the first point cloud.

ax = pcshow(ptCldInADT(1)); view(ax,2) title(sprintf("Timestamp: %d",cdsData{3})) xlabel("X-Axis") ylabel("Y-Axis")

Specify these parameters for the lidar vehicle detection model.

Threshold — Confidence score threshold for boundary detection. The model ignores detections with a confidence score less than the threshold value. If you observe false detections, try increasing this value.

xLimits — Range of coordinates along the X-axis.

yLimits — Range of coordinates along the Y-axis.

params.threshold = 0.4; params.xLimits = [0 60]; params.yLimits = [-12 12];

Detect vehicles for each point cloud in ptCldInADT by using the helperDetectVehicles helper function. This helper function uses the downloaded pretrained pointPillarsObjectDetector (Lidar Toolbox) deep neural network model lidarVehicleDetector to detect vehicles from lidar data. Note that, depending on your hardware configuration, this function can take a significant amount of time to run.

vehicleDetections = helperDetectVehicles(lidarVehicleDetector,ptCldInADT,params);

Starting parallel pool (parpool) using the 'Processes' profile ... Connected to parallel pool with 6 workers.

vehicleDetections is an M-by-3 table containing the detected vehicle bounding boxes for each point cloud, their detection scores, and their labels. M is the total number of point clouds.

Select a point cloud, and extract the detected vehicle bounding boxes for the corresponding point cloud.

idx = 1;

ptCloud = ptCldInADT(idx);

bboxes = vehicleDetections.Bboxes{idx};Overlay the vehicle detections on the point cloud, and display the overlaid point cloud.

posViewCrop = find(ptCloud.Location(:,:,1) < params.xLimits(2) ... & ptCloud.Location(:,:,1) > params.xLimits(1) ... & ptCloud.Location(:,:,2) < params.yLimits(2) ... & ptCloud.Location(:,:,2) > params.yLimits(1)); ptCloud = select(ptCloud,posViewCrop,OutputSize="full"); ax = pcshow(ptCloud.Location); showShape(cuboid=bboxes,Parent=ax,Opacity=0.1,Color="green",LineWidth=1)

Generate Tracks from Detections

You must assign detections to distinct tracks to determine the entry and exit times of vehicles. If your detections contain noise, you can smooth the noisy detections using a JPDA tracker. To track vehicle detections in this example, you initialize the JPDA tracker using an interacting multiple model (IMM) filter with constant velocity and constant turn motion models.

Define a JPDA tracker using a trackerJPDA (Sensor Fusion and Tracking Toolbox) System object™ with these properties:

ConfirmationThreshold— Specified as[4 7], to confirm the track if the lane is detected for at least 4 out of the past 7 detections.DeletionThreshlod— Specified as[3 3], to remove a track if the track is missing from the 3 most recent consecutive detections.AssignmentThreshold— Specified as[50 200], this sets the normalized maximum distance to associate a detection to a track.

vehicleTracker = trackerJPDA(FilterInitializationFcn=@helperMultiClassInitIMMFilter,... TrackLogic="History", ... AssignmentThreshold=[50 200], ... ClutterDensity=1e-12, ... ConfirmationThreshold=[4 7], ... DeletionThreshold=[3 3], ... InitializationThreshold=0.1, ... HitMissThreshold=0.2);

Specify the measurement noise measNoise. The measurement noise describes the uncertainty in the measurements.

measNoise = blkdiag(0.25*eye(3),25,eye(3));

Create an object to display the tracks overlaid on the point clouds, and the respective camera frames, by using the helperLidarVisualizer class.

displayObj = helperLidarVisualizer; initializeDisplay(displayObj)

Process the vehicle detections for each frame, and assign tracks if the detection meets the assignment criteria.

% Initialize empty actorTracklist object to store the track data. tracklist = actorTracklist; % Get the list of all bounding boxes for each frame from the detector output. Bboxes = vehicleDetections.Bboxes; reset(combinedDs) for i = 1:height(sensorData) % Get the current frame from ptCldInADT. ptCloud = ptCldInADT(i); % Get the bounding boxes for current frame. bboxes = Bboxes{i}; % Get the current timestamp and front view camera image from the combinedDs. cdsData = read(combinedDs); curTimeStamp = cdsData{3}; frontViewImage = cdsData{4}; % Convert the bounding boxes to object detections. measurements = helperAssembleDetections(bboxes,measNoise,curTimeStamp); % Return the updated tracks for the current timestamp. [confirmedTracks] = vehicleTracker(measurements,curTimeStamp); % Extract the bounding boxes and TrackID from the confirmed tracks. vehicleBboxes = helperExtractBboxes(confirmedTracks); if ~isempty(vehicleBboxes) for j = 1:numel(confirmedTracks) % Get the trackid of confirmed tracks. trackid = string(confirmedTracks(j).TrackID); % Set the class id to 1 for 'vehicle' class. classid = 1; % Get the position of vehicle. vehiclePosition = {[vehicleBboxes(j,1:3)]}; % Add the data to the actorTracklist object. tracklist.addData(curTimeStamp, trackid, classid, vehiclePosition); end end % Update the display with the current point cloud. ptCloudUnOrg = removeInvalidPoints(ptCloud); updatePointCloud(displayObj,ptCloudUnOrg) % Update the display with the current front-view image. updateImage(displayObj,frontViewImage) % Update the display, if the tracks are not empty. if ~isempty(confirmedTracks) updateTracks(displayObj,confirmedTracks) end drawnow("limitrate") end

Display properties of tracklist.

tracklist

tracklist =

actorTracklist with properties:

TimeStamp: [397×1 double]

TrackIDs: {397×1 cell}

ClassIDs: {397×1 cell}

Position: {397×1 cell}

Dimension: []

Orientation: []

Velocity: []

Speed: []

StartTime: 1.5576e+09

EndTime: 1.5576e+09

NumSamples: 397

UniqueTrackIDs: [47×1 string]

You can use the actorprops function to extract actor properties from tracklist, for use in generating a driving scenario. For more information on how to generate a scenario from tracklist, see the Generate Scenario from Actor Track Data and GPS Data example. For information on how to generate a scenario for RoadRunner Scenario, see the Generate RoadRunner Scenario from Recorded Sensor Data example.

Helper Functions

helperCreateCombinedDatastore— Create a combine object from sensor data, containing the lidar, lidar poses, lidar timestamps, camera images, and camera timestamps.

function combinedDs = helperCreateCombinedDatastore(sensorData) % Create a datastore to store the lidar data. pcFds = fileDatastore(sensorData.ptCldPath,ReadFcn=@pcread); % Create an arrayDatastore to store the 3-D rigid transformations. lidarPoses = rigidtform3d.empty; for i = 1:size(sensorData,1) rot = quat2rotm(quaternion([sensorData.lidarHeading(i).w,sensorData.lidarHeading(i).x,sensorData.lidarHeading(i).y,sensorData.lidarHeading(i).z])); tran = [sensorData.lidarPosition(i).x,sensorData.lidarPosition(i).y,sensorData.lidarPosition(i).z]; pose = rigidtform3d(rot,tran); lidarPoses(i,1) = pose; end posesAds = arrayDatastore(lidarPoses); % Create an arrayDatastore to store the lidar timestamps. lidarTimestampsAds = arrayDatastore(sensorData.lidarTimestamps); % Create an imageDatastore to store the front camera images. imds = imageDatastore(sensorData.frontCameraImagesPath); % Create an arrayDatastore to store the camera timestamps. cameraTimestampsAds = arrayDatastore(sensorData.cameraTimestamps); % Combine all the datastores into a combinedDatastore. combinedDs = combine(pcFds,posesAds,lidarTimestampsAds,imds,cameraTimestampsAds); end

helperExtractBboxes — Extract the vehicle bounding boxes from the tracks.

function bboxes = helperExtractBboxes(tracks) positionSelector = [1 0 0 0 0 0 0 0 0 0 0; 0 0 1 0 0 0 0 0 0 0 0; 0 0 0 0 0 1 0 0 0 0 0]; yawSelector = [0 0 0 0 0 0 0 1 0 0 0]; dimensionSelector = [0 0 0 0 0 0 0 0 0 1 0; 0 0 0 0 0 0 0 0 1 0 0; 0 0 0 0 0 0 0 0 0 0 1]; % Retrieve bounding boxes from the tracks. positions = getTrackPositions(tracks,positionSelector); yaws = getTrackPositions(tracks,yawSelector); dimensions = getTrackPositions(tracks,dimensionSelector); bboxes = [positions dimensions zeros(size(positions,1),2) yaws]; end

helperAssembleDetections — Convert the detected vehicle bounding boxes to object detections, for use in the tracker.

function mydetections = helperAssembleDetections(bboxes,measNoise,timestamp) % Assemble bounding boxes as a cell array of objectDetection objects. mydetections = cell(size(bboxes,1),1); for i = 1:size(bboxes,1) % This example assumes that all vehicles are cars. classid = 1; lidarModel = [bboxes(i,1:3) bboxes(i,end) bboxes(i,4:6)]; mydetections{i} = objectDetection(timestamp, ... lidarModel',MeasurementNoise=measNoise, ... ObjectAttributes=struct("ClassID",classid)); end end

References

[1] Hesai and Scale. PandaSet. Accessed September 18, 2025. https://pandaset.org/. The PandaSet data set is provided under the CC-BY-4.0 license.

See Also

Functions

Objects

pointPillarsObjectDetector(Lidar Toolbox) |trackerJPDA(Sensor Fusion and Tracking Toolbox)

Topics

- Overview of Scenario Generation from Recorded Sensor Data

- Generate Scenario from Actor Track Data and GPS Data

- Generate High Definition Scene from Lane Detections and OpenStreetMap

- Extract Lane Information from Recorded Camera Data for Scene Generation

- Generate RoadRunner Scene from Recorded Lidar Data

- Smooth GPS Waypoints for Ego Localization

- Ego Vehicle Localization Using GPS and IMU Fusion for Scenario Generation