vSLAM

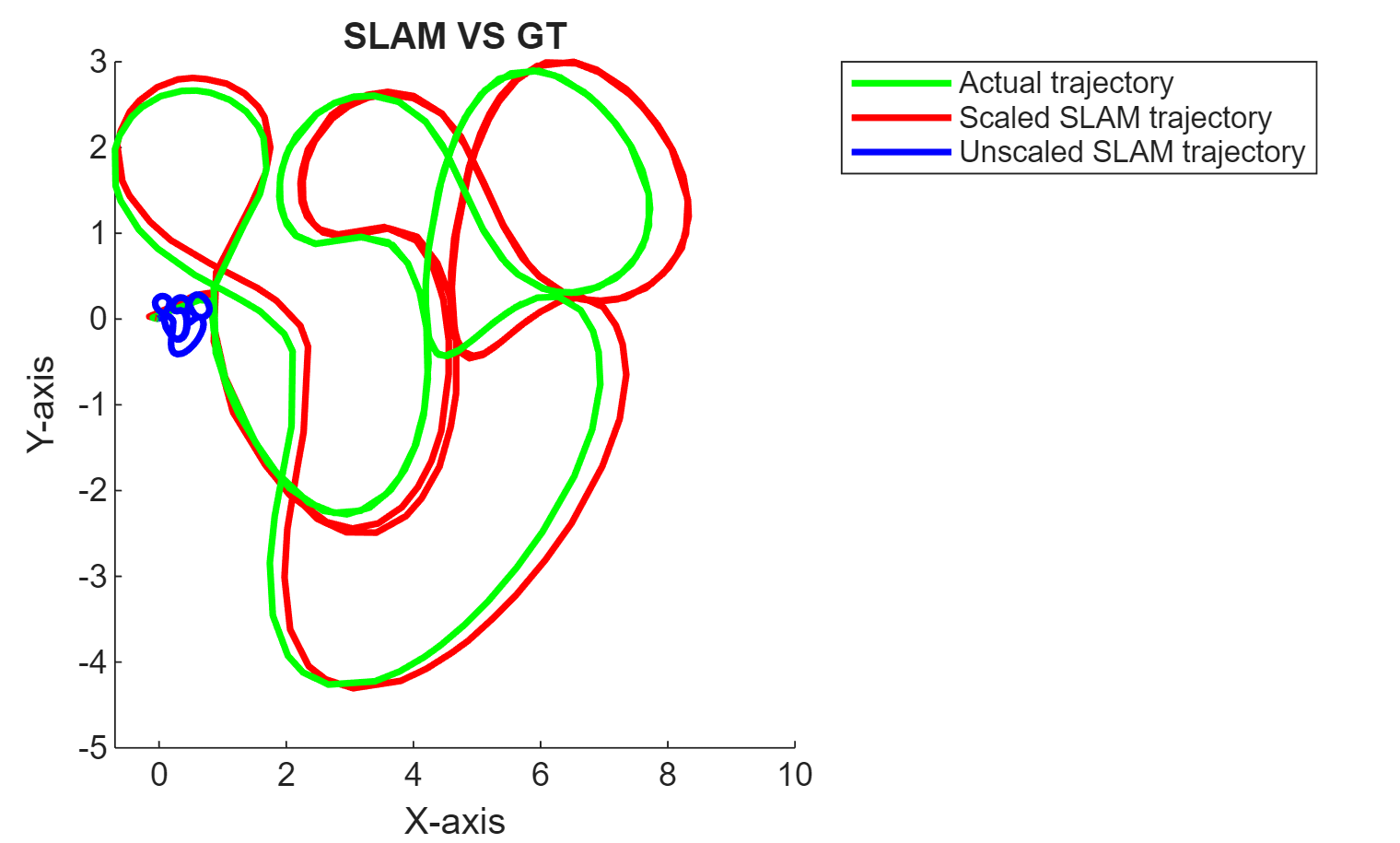

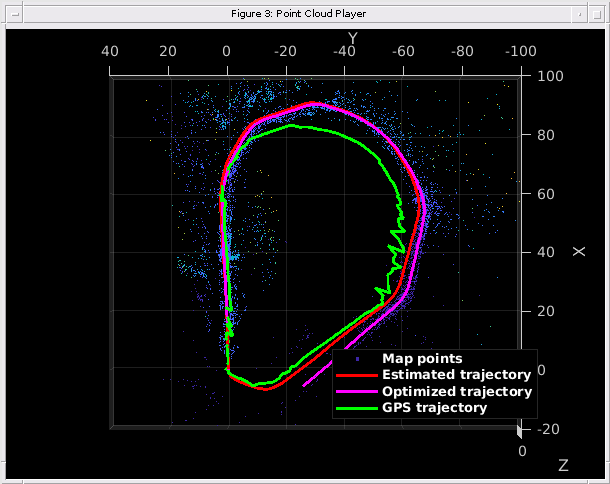

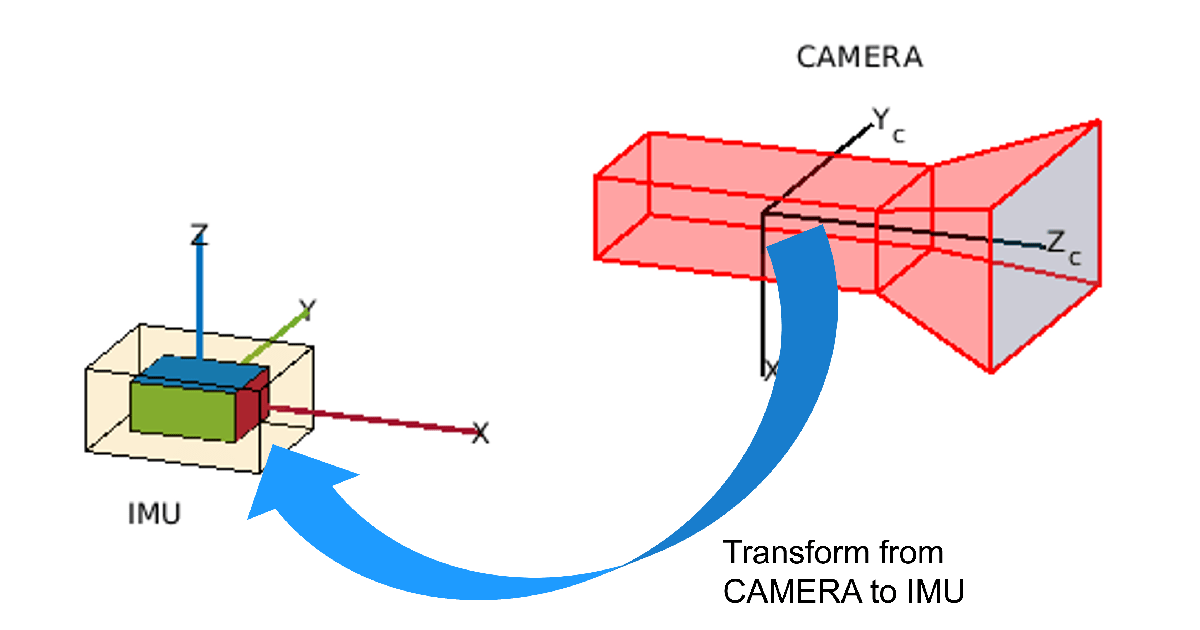

Visual simultaneous localization and mapping (vSLAM), refers to the process of calculating the position and orientation of a camera, with respect to its surroundings, while simultaneously mapping the environment. The process uses only visual inputs from the camera. Applications for visual SLAM include augmented reality, robotics, and autonomous driving. Visual-inertial SLAM (viSLAM) is the process of fusing visual inputs from a camera with positional data from an IMU to improve the SLAM the results. For more details, see Implement Visual SLAM in MATLAB.

Functions

Topics

- Implement Visual SLAM in MATLAB

Understand the visual simultaneous localization and mapping (vSLAM) workflow and how to implement it using MATLAB.

- Choose SLAM Workflow Based on Sensor Data

Choose the right simultaneous localization and mapping (SLAM) workflow and find topics, examples, and supported features.

- Develop Visual SLAM Algorithm Using Unreal Engine Simulation (Automated Driving Toolbox)

Develop a visual simultaneous localization and mapping (SLAM) algorithm using image data from the Unreal Engine® simulation environment.