Custom Training Using Automatic Differentiation

Train deep learning networks using custom training loops

If the trainingOptions function does not

provide the training options that you need for your task, or you have a loss

function that the trainnet function does not support, then you can define a custom

training loop. For models that cannot be specified as networks of layers, you

can define the model as a function. To learn more, see Define Custom Training Loops, Loss Functions, and Networks.

Functions

Topics

Custom Training Loops

- Train Deep Learning Model in MATLAB

Learn how to training deep learning models in MATLAB®. - Define Custom Training Loops, Loss Functions, and Networks

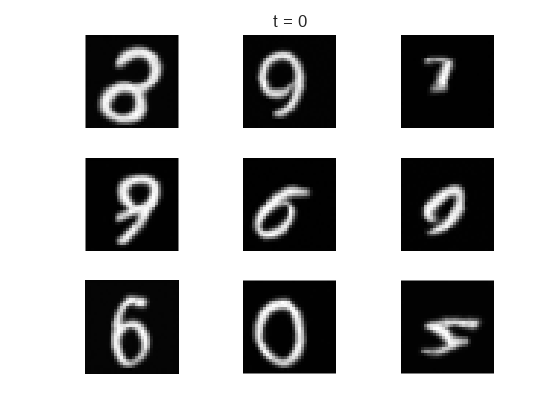

Learn how to define and customize deep learning training loops, loss functions, and models. - Train Network Using Custom Training Loop

This example shows how to train a network that classifies handwritten digits with a custom learning rate schedule. - Train Sequence Classification Network Using Custom Training Loop

This example shows how to train a network that classifies sequences with a custom learning rate schedule. - Specify Training Options in Custom Training Loop

Learn how to specify common training options in a custom training loop. - Define Model Loss Function for Custom Training Loop

Learn how to define a model loss function for a custom training loop. - Update Batch Normalization Statistics in Custom Training Loop

This example shows how to update the network state in a custom training loop. - Make Predictions Using dlnetwork Object

This example shows how to make predictions using adlnetworkobject by looping over mini-batches. - Monitor Custom Training Loop Progress

Track and plot custom training loop progress. - Compare Custom Solvers Using Custom Training Loop

This example shows how to train a deep learning network with different custom solvers and compare their accuracies. - Multiple-Input and Multiple-Output Networks

Learn how to define and train deep learning networks with multiple inputs or multiple outputs. - Train Network with Multiple Outputs

This example shows how to train a deep learning network with multiple outputs that predict both labels and angles of rotations of handwritten digits. - Train Network in Parallel with Custom Training Loop

This example shows how to set up a custom training loop to train a network in parallel. - Run Custom Training Loops on a GPU and in Parallel

Speed up custom training loops by running on a GPU, in parallel using multiple GPUs, or on a cluster. - Detect Issues During Deep Neural Network Training

This example shows how to automatically detect issues while training a deep neural network. - Speed Up Deep Neural Network Training

Learn how to accelerate deep neural network training.

Automatic Differentiation

- Automatic Differentiation Background

Learn how automatic differentiation works. - Deep Learning Data Formats

Learn about deep learning data formats. - List of Functions with dlarray Support

View the list of functions that supportdlarrayobjects. - Use Automatic Differentiation In Deep Learning Toolbox

How to use automatic differentiation in deep learning.

Generative Adversarial Networks

- Train Generative Adversarial Network (GAN)

This example shows how to train a generative adversarial network to generate images. - Train Conditional Generative Adversarial Network (CGAN)

This example shows how to train a conditional generative adversarial network to generate images. - Train Wasserstein GAN with Gradient Penalty (WGAN-GP)

This example shows how to train a Wasserstein generative adversarial network with a gradient penalty (WGAN-GP) to generate images.

Graph Neural Networks

- Multivariate Time Series Anomaly Detection Using Graph Neural Network

This example shows how to detect anomalies in multivariate time series data using a graph neural network (GNN). - Node Classification Using Graph Convolutional Network

This example shows how to classify nodes in a graph using a graph convolutional network (GCN). - Multilabel Graph Classification Using Graph Attention Networks

This example shows how to classify graphs that have multiple independent labels using graph attention networks (GATs).

Deep Learning Function Acceleration

- Deep Learning Function Acceleration for Custom Training Loops

Accelerate model functions and model loss functions for custom training loops by caching and reusing traces. - Accelerate Custom Training Loop Functions

This example shows how to accelerate deep learning custom training loop and prediction functions. - Check Accelerated Deep Learning Function Outputs

This example shows how to check that the outputs of accelerated functions match the outputs of the underlying function. - Evaluate Performance of Accelerated Deep Learning Function

This example shows how to evaluate the performance gains of using an accelerated function.