Build Deep Neural Networks

Create new deep networks for tasks such as classification, regression, and forecasting by defining the network architecture from scratch. Build networks using MATLAB or interactively using Deep Network Designer.

For most tasks, you can use built-in layers. If there is not a built-in layer that you need for your task, then you can define your own custom layer. You can define custom layers with learnable and state parameters. After you define a custom layer, you can check that the layer is valid, GPU compatible, and outputs correctly defined gradients. For a list of supported layers, see List of Deep Learning Layers.

For models that cannot be specified as networks of layers, you can define the model as a function. To learn more, see Define Custom Training Loops, Loss Functions, and Networks.

Apps

| Deep Network Designer | Design and visualize deep learning networks |

Functions

Topics

Built-In Layers

- Train Network with Numeric Features

This example shows how to create and train a simple neural network for deep learning feature data classification. - Sequence Classification Using Deep Learning

This example shows how to classify sequence data using a long short-term memory (LSTM) network. - Sequence-to-Sequence Classification Using Deep Learning

This example shows how to classify each time step of sequence data using a long short-term memory (LSTM) network. - Sequence-to-Sequence Regression Using Deep Learning

This example shows how to predict the remaining useful life (RUL) of engines by using deep learning. - Sequence-to-One Regression Using Deep Learning

This example shows how to predict the frequency of a waveform using a long short-term memory (LSTM) neural network. - Long Short-Term Memory Neural Networks

Learn about long short-term memory (LSTM) neural networks. - Example Deep Learning Networks Architectures

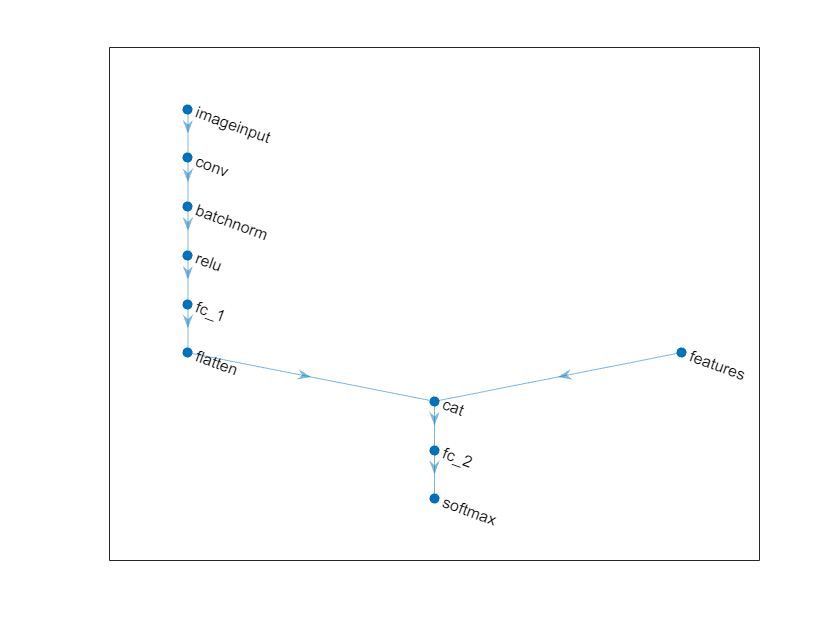

This example shows how to define simple deep learning neural networks for classification and regression tasks. - Multiple-Input and Multiple-Output Networks

Learn how to define and train deep learning networks with multiple inputs or multiple outputs. - List of Deep Learning Layers

Discover all the deep learning layers in MATLAB. - Build Networks with Deep Network Designer

Interactively build and edit deep learning networks in Deep Network Designer. - Deep Learning in MATLAB

Discover deep learning capabilities in MATLAB using convolutional neural networks for classification and regression, including pretrained networks and transfer learning, and training on GPUs, CPUs, clusters, and clouds. - Deep Learning Tips and Tricks

Learn how to improve the accuracy of deep learning networks. - Data Sets for Deep Learning

Discover data sets for various deep learning tasks.

Custom Layers

- Define Custom Deep Learning Layers

Learn how to define custom deep learning layers. - Check Custom Layer Validity

Learn how to check the validity of custom deep learning layers.