Get Started with Instance Segmentation Using Deep Learning

Instance segmentation is a computer vision technique that enables you to identify each instance of an object category as a unique entity and delineate its boundaries at the pixel level. For a given image, instance segmentation not only recognizes and classifies objects, but also provides a precise pixel-wise mask for each individual object. This enables you to differentiate between multiple objects of the same class, such as distinguishing two cars parked side by side.

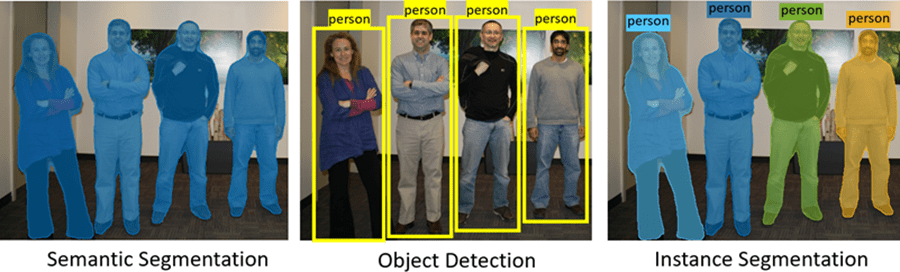

Use instance segmentation to implement the strengths of object detection and semantic segmentation while overcoming some of their limitations:

Object detection combines image classification and object localization. While object detection is effective at recognizing objects, it does not provide a detailed, pixel-level understanding, making it challenging to distinguish objects in close proximity to each other.

Semantic segmentation assigns a class label to each pixel in an image, effectively dividing the image into segments based on the semantic meaning of the objects. Although semantic segmentation provides pixel-level classification, it does not differentiate between instances of the same class, particularly when objects are touching or overlapping.

Instance segmentation predicts pixel-by-pixel segmentation masks of each object, treating individual objects as distinct entities, regardless of the class of the objects. It overcomes the limitations of object detection and semantic segmentation by offering precise object delineation and individual instance identification. However, it can be more computationally demanding than object detection or semantic segmentation, leading to longer inference and training times.

Applications for instance segmentation include autonomous driving, medical imaging, industrial inspection (such as agriculture, retail, and manufacturing), scientific analysis (such as the classification of terrain visible in satellite imagery or structures visible under a microscope), and robotics.

Computer Vision Toolbox™ provides these instance segmentation techniques for segmenting objects in an image:

SOLOv2 — Segmenting Objects by Locations version 2 (SOLOv2) is a single-stage instance segmentation model that directly predicts instance center and size, making it suitable for real-time applications.

Mask R-CNN — Mask R-CNN is a two-stage instance segmentation model that can predict instance masks and achieve good performance across various applications.

You can perform inference on a test image with default network options using a pretrained instance segmentation model, or train an instance segmentation network on a custom data set.

Additionally, the Pose Mask R-CNN model extends the capabilities of Mask R-CNN by combining instance segmentation with 6-DoF (six degrees-of-freedom) pose estimation. This technique is useful for tasks that require detailed pose information within the segmented instances, such as robotics bin-picking tasks. To learn more, see the Perform 6-DoF Pose Estimation for Bin Picking Using Deep Learning example.

Perform Inference Using a Pretrained Instance Segmentation Network

Follow these steps to perform instance segmentation on a test image using a pretrained instance segmentation model.

1. Download a pretrained model. The pretrained models

are Computer Vision Toolbox support packages that you can download and install using either the

visionSupportPackages function or the

Add-On Explorer. For more information about installing add-ons, see Get

and Manage Add-Ons.

The table describes the pretrained models for instance segmentation, lists their corresponding support packages, and indicates which Computer Vision Toolbox instance segmentation model to use with them. Because SOLOv2 predicts instance center and size directly, allowing for a single-stage end-to-end training process, you can use it to achieve faster inference times compared to Mask R-CNN.

| Instance Segmentation Model | Available Pretrained Models | Get Started Topic |

|---|---|---|

solov2 (Computer Vision

Toolbox Model for SOLOv2 Instance

Segmentation) |

| Get Started with SOLOv2 for Instance Segmentation |

maskrcnn (Computer Vision

Toolbox Model for Mask R-CNN Instance

Segmentation) | resnet50-coco — A pretrained Mask R-CNN deep

learning network, trained on the COCO data set, with a ResNet-50

network as the feature extractor. | Getting Started with Mask R-CNN for Instance Segmentation |

2. After you download a pretrained instance

segmentation model, configure the pretrained network using the associated object, and

load the test image into the workspace. For example, configure a pretrained SOLOv2

network using solov2

object.

model = solov2("resnet50-coco"); I = imread("kobi.png");

3. Segment objects in a test image using the

segmentObjects object function. The function returns the segmentation

masks and the confidence scores and labels associated with

them.

[masks,labels,scores] = segmentObjects(model,I);

3. Visualize the instance segmentation results using

the insertObjectMask function.

maskedImage = insertObjectMask(I,masks); imshow(maskedImage)

Train an Instance Segmentation Network

To perform transfer learning on a custom data set, you must prepare a labeled ground truth data set with annotations for each object instance, configure an instance segmentation network, and train the network.

1. Create the ground truth data

for training. To create the ground truth, define and annotate each object

instance within the training images. The format of your ground truth datastore must

match the trainingData input argument format of the training

function you use for training.

You can use the Image Labeler

app to interactively label images with polygon ROI labels. To learn more drawing polygon

ROI labels for instance segmentation networks, see Label Objects Using Polygons. The Image Labeler

app returns the ground truth labeled data as a groundTruth object. For more information, see Get Started with the Image Labeler. Convert polygon

coordinates to binary masks using the poly2mask function.

2.

Choose an instance segmentation model based on the requirements of

your use case. The two models available through the Computer Vision Toolbox support packages are SOLOv2 (solov2) and

Mask R-CNN (maskrcnn).

This table displays the training capabilities and data requirements of SOLOv2 and Mask R-CNN, including the training process, efficiency, annotation requirements, model complexity, computational cost, and training data size. While SOLOv2 is generally faster and more straightforward to train, and provides greater accuracy with a lower memory footprint than Mask R-CNN, which model fits your use case depends on your task and data set requirements [1].

| Training Aspect | SOLOv2 | Mask R-CNN |

|---|---|---|

| Training Efficiency | Generally faster training process | Longer training time due to two stages |

| Model Complexity | Simpler model architecture | More complex architecture |

| Ground Truth Data Annotation | Requires instance center and size | Requires instance masks for annotation |

| Training Data Size | Typically requires smaller training data | May require larger training data |

| Computational Cost | Generally lower computational cost | Higher computational cost |

3. Train the pretrained network using the corresponding training function.

To complete the training steps, use the network training and inference functionality for your selected model in the table below.

| Configure Network Architecture | Train Network | Perform Inference | Training Example |

|---|---|---|---|

solov2 | trainSOLOV2 | segmentObjects | Perform Instance Segmentation Using SOLOv2 |

maskrcnn | trainMaskRCNN | segmentObjects | Perform Instance Segmentation Using Mask R-CNN |

Evaluate Instance Segmentation Results

Evaluate the quality of your instance segmentation results against the ground truth by

using the evaluateInstanceSegmentation function. Ensure that your ground truth

datastore is set up so that using the read function on the datastore returns a cell array with at least two

elements in the format {masks labels}.

To calculate the prediction metrics, specify a datastore of the masks, labels, and

scores from your instance segmentation and your ground truth datastore as input to the

evaluateInstanceSegmentation function. The function calculates metrics

such as the confusion matrix and average precision, and stores the metrics in an instanceSegmentationMetrics object.

References

[1] Wang, Xinlong, Rufeng Zhang, Tao Kong, Lei Li, and Chunhua Shen. “SOLOv2: Dynamic and Fast Instance Segmentation.” ArXiv, October 23, 2020. https://doi.org/10.48550/arXiv.2003.10152.

See Also

Apps

Functions

solov2|trainSOLOV2|segmentObjects|evaluateInstanceSegmentation|maskrcnn|trainMaskRCNN|evaluateInstanceSegmentation|instanceSegmentationMetrics|posemaskrcnn