Tracking and Motion Estimation

Motion estimation and tracking are key activities in many computer vision applications, including activity recognition, traffic monitoring, automotive safety, and surveillance.

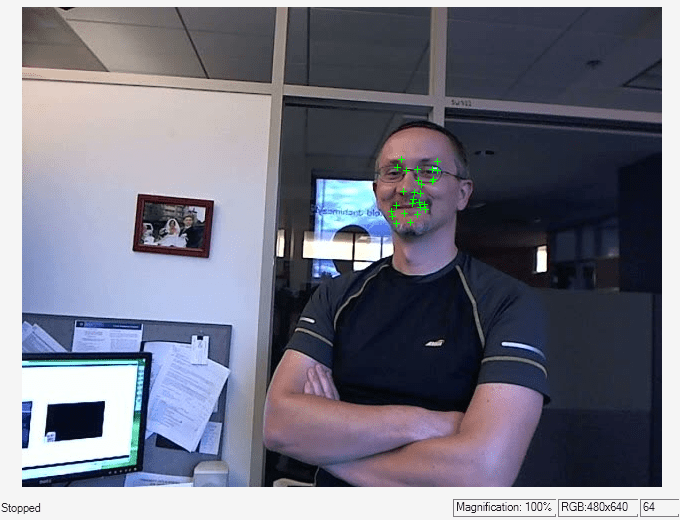

Computer Vision Toolbox™ provides video tracking algorithms, such as continuously adaptive mean shift (CAMShift) and Kanade-Lucas-Tomasi (KLT). You can use these algorithms for tracking a single object, or as building blocks in a more complex tracking system. The toolbox also provides a framework for multiple object tracking, which includes a Kalman filter and the Hungarian algorithm for assigning object detections to tracks.

Motion estimation is the process of determining the movement of blocks between adjacent video frames. This toolbox includes motion estimation algorithms, such as optical flow, block matching, and template matching. These algorithms create motion vectors, which can relate to the whole image, blocks, arbitrary patches, or individual pixels. For block and template matching, the evaluation metrics for finding the best match include mean square error (MSE), mean absolute deviation (MAD), maximum absolute difference (MaxAD), sum of absolute difference (SAD), and sum of squared difference (SSD).

Functions

Topics

- Multiple Object Tracking

Locate a moving object or multiple objects over time in a video stream.