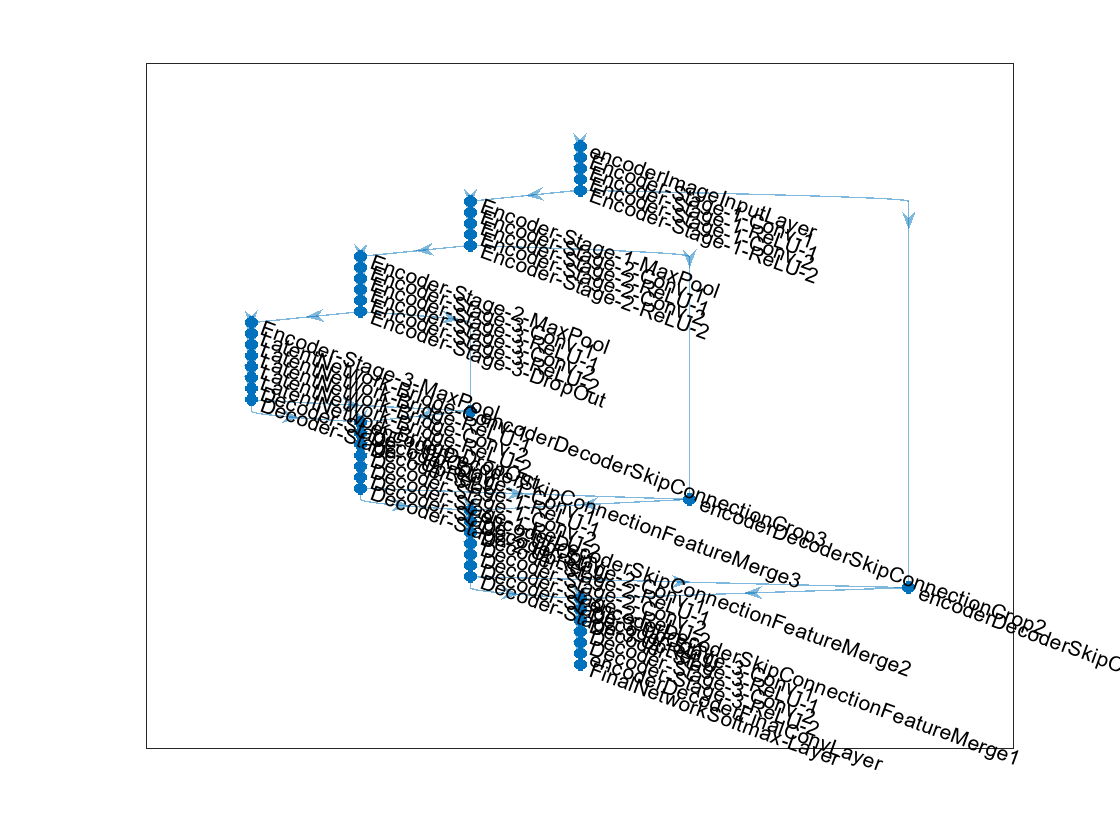

unet

Syntax

Description

unetNetwork = unet(imageSize,numClasses)

Use unet to create the U-Net network architecture. You must train

the network using the Deep Learning Toolbox™ function trainnet (Deep Learning Toolbox).

[

also returns the size of the output size from the U-Net network.unetNetwork,outputSize] = unet(imageSize,numClasses)

___ = unet(

specifies options using one or more name-value arguments. For example,

imageSize,numClasses,Name=Value)unet(imageSize,numClasses,NumFirstEncoderFilters=64) specifies the

number of output channels as 64 for the first encoder stage.

Examples

Input Arguments

Name-Value Arguments

Output Arguments

More About

Tips

Use

'same'padding in convolution layers to maintain the same data size from input to output and enable the use of a broad set of input image sizes.Use patch-based approaches for seamless segmentation of large images. You can extract image patches by using the

randomPatchExtractionDatastorefunction.Use

'valid'padding to prevent border artifacts while you use patch-based approaches for segmentation.You can use the network created using

unetfunction for GPU code generation after training withtrainnet(Deep Learning Toolbox). For details and examples, see Generate Code and Deploy Deep Neural Networks (Deep Learning Toolbox).

References

[1] Ronneberger, O., P. Fischer, and T. Brox. "U-Net: Convolutional Networks for Biomedical Image Segmentation." Medical Image Computing and Computer-Assisted Intervention (MICCAI). Vol. 9351, 2015, pp. 234–241.

[2] He, K., X. Zhang, S. Ren, and J. Sun. "Delving Deep Into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification." Proceedings of the IEEE International Conference on Computer Vision. 2015, 1026–1034.

Extended Capabilities

Version History

Introduced in R2024a

See Also

Objects

dlnetwork(Deep Learning Toolbox)

Functions

trainnet(Deep Learning Toolbox) |deeplabv3plus|unet3d|pretrainedEncoderNetwork|semanticseg|evaluateSemanticSegmentation