Main Content

Custom Training Loops

Customize deep learning training loops and loss functions for image

networks

If the trainingOptions function does

not provide the training options that you need for your task, or you have a

loss function that the trainnet function does not support, then you can define a

custom training loop. For models that cannot be specified as networks of

layers, you can define the model as a function. To learn more, see Define Custom Training Loops, Loss Functions, and Networks.

Functions

Topics

Custom Training Loops

- Train Deep Learning Model in MATLAB

Learn how to training deep learning models in MATLAB®. - Define Custom Training Loops, Loss Functions, and Networks

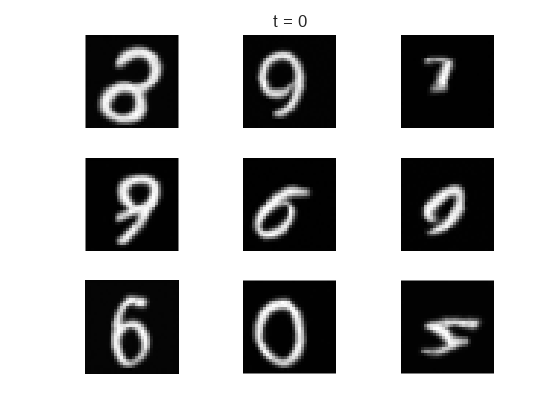

Learn how to define and customize deep learning training loops, loss functions, and models. - Train Network Using Custom Training Loop

This example shows how to train a network that classifies handwritten digits with a custom learning rate schedule. - Monitor Custom Training Loop Progress

Track and plot custom training loop progress. - Train Network with Multiple Outputs

This example shows how to train a deep learning network with multiple outputs that predict both labels and angles of rotations of handwritten digits. - Classify Videos Using Deep Learning with Custom Training Loop

This example shows how to create a network for video classification by combining a pretrained image classification model and a sequence classification network. - Train Image Classification Network Robust to Adversarial Examples

This example shows how to train a neural network that is robust to adversarial examples using fast gradient sign method (FGSM) adversarial training. - Speed Up Deep Neural Network Training

Learn how to accelerate deep neural network training.

Automatic Differentiation

- List of Functions with dlarray Support

View the list of functions that supportdlarrayobjects. - Automatic Differentiation Background

Learn how automatic differentiation works. - Use Automatic Differentiation In Deep Learning Toolbox

How to use automatic differentiation in deep learning.