Localize Industrial Defects Using PatchCore Anomaly Detector

This example shows how to detect and localize defects on printed circuit board (PCB) images using a PatchCore anomaly detection network.

The PatchCore model [1] uses features extracted from convolutional neural networks (CNNs) to distinguish normal and anomalous images based on the distribution of the extracted features in feature space. The patch representation defines a mapping from the original image space to the feature space. The PatchCore model generates per-pixel and per-image anomaly scores, which you can visualize as an anomaly heatmap. The anomaly heatmap displays the probability that each pixel is anomalous, providing a visual localization of defects.

This example uses these practical advantages of the PatchCore method:

PatchCore is a one-class learning technique. You train the model using only normal (non-defective) images. Training does not require images with anomalies, which, depending on the application and industrial setting, can be rare, expensive, or unsafe to obtain.

PatchCore uses memory bank subsampling, a technique that involves dividing large image patches into smaller sub-patches and precomputing the features for each sub-patch. This technique reduces the computational cost of processing large patches during inference and improves efficiency.

PatchCore can operate in low-shot training regimes, which is an advantage for real-world visual inspection applications where access to training data consisting of normal images is limited. Sampling as little as 1% of the patch representations to be in the memory bank is sufficient for good performance and competitive inference times.

In this example, you evaluate the classification decisions of the model by inspecting correctly classified normal and anomalous images, as well as false positive and false negative images. In industrial anomaly localization applications such as this one, understanding why a trained network misclassifies certain images as anomalies is crucial.

Download Pretrained PatchCore Detector

By default, this example downloads a pretrained version of the PatchCore anomaly detector using the helper function downloadTrainedNetwork. The function is attached to this example as a supporting file. You can use the pretrained network to run the entire example without waiting for training to complete.

trainedPCBDefectDetectorNet_url = "https://ssd.mathworks.com/supportfiles/"+ ... "vision/data/trainedVisAPCBDefectDetectorPatchCore.zip"; downloadTrainedNetwork(trainedPCBDefectDetectorNet_url,pwd); load("trainedVisAPCBDefectDetectorPatchCore.mat");

Download VisA Data Set

Load the Visual Anomaly (VisA) data set consisting of 10,821 high-resolution color images (9,621 normal and 1,200 anomalous samples) covering 12 different object subsets in 3 domains [2]. Four of the subsets correspond to four different types of PCBs, containing transistors, capacitors, chips, and other components. The anomalous images in the test set contain surface defects such as scratches, dents, color spots or cracks, as well as structural defects such as misplaced or missing parts.

This example uses one of the four PCB data subsets. This data subset contains train and test folders, which include the normal training images, and the normal and anomalous test images, respectively.

Specify dataDir as the location of the data set. Download the data set using the downloadVisAData helper function. This function, which is attached to the example as a supporting file, downloads a ZIP file and extracts the data.

dataDir = fullfile(tempdir,"VisA");

downloadVisAData(dataDir)Localize Defects in Image

Read a sample anomalous image with the "bad" label from the data set.

sampleImage = imread(fullfile(dataDir,"VisA",... "pcb4","test","bad","000.JPG")); sampleImage = imresize(sampleImage,[442 NaN]);

Visualize the localization of defects by displaying the original PCB image with the overlaid predicted per-pixel anomaly score map. Use the anomalyMap function to generate the anomaly score heatmap for the sample image.

anomalyHeatMap = anomalyMap(detector,sampleImage);

heatMapImage = anomalyMapOverlay(sampleImage,anomalyHeatMap);

montage({sampleImage, heatMapImage})

title("Heatmap of Anomalous Image")

Prepare Data for Training

Create imageDatastore objects that hold the training and test sets, from the train and test folders of the downloaded VisA data set.

dsTrain = imageDatastore(fullfile(dataDir,"VisA","pcb4","train"),IncludeSubfolders=true,LabelSource="foldernames"); summary(dsTrain.Labels)

good 904

dsTest = imageDatastore(fullfile(dataDir,"VisA","pcb4","test"),IncludeSubfolders=true,LabelSource="foldernames"); summary(dsTest.Labels)

bad 100

good 101

Display images of a normal PCB and an anomalous PCB from the test data set.

badImage = find(dsTest.Labels=="bad",1); badImage = read(subset(dsTest,badImage)); normalImage = find(dsTest.Labels=="good",1); normalImage = read(subset(dsTest,normalImage)); montage({normalImage,badImage}) title("Test PCB Images Without (Left) and With (Right) Defects")

Partition Data into Calibration and Test Sets

Use a calibration set to determine the threshold for the classifier. Using separate calibration and test sets avoids information leaking from the test set into the design of the classifier. The classifier labels images with anomaly scores above the threshold as anomalous.

To establish a suitable threshold for the classifier, allocate 50% of the original test set as the calibration set dsCal, which has equal numbers of normal and anomalous images.

[dsCal,dsTest] = splitEachLabel(dsTest,0.5,"randomized");Resize Images

Define an anonymous function, resizeFcn, that rescales the input images by a ratio of 0.4. Since the VisA data set images are fairly large, decreasing the input data resolution helps improve training and inference speed at the possible expense of missing detection of very small defects.

size(preview(dsTrain)); resizeFcn = @(x) imresize(x,0.4); dsTrain = transform(dsTrain,resizeFcn); dsCal = transform(dsCal,resizeFcn); dsTest = transform(dsTest,resizeFcn);

Downsample the Training Data

Allocate a subset of the original normal training data for training. It is preferable to downsample the training data to take advantage of PatchCore's performance in low-shot training regimes and decrease peak memory usage during training.

idx = splitlabels(dsTrain.UnderlyingDatastores{1}.Labels,0.2,"randomized");

dsTrainFinal = subset(dsTrain,idx{1});Define PatchCore Anomaly Detector Network Architecture

Set up the PatchCore detector [1] to extract mid-level features from a CNN backbone. During training, PatchCore adds these features to a memory bank, and subsamples them to compress the memory bank of feature embeddings. The backbone of PatchCore in this example is the ResNet-18 network [3], a CNN that has 18 layers and is pretrained on ImageNet [4].

Create a PatchCore anomaly detector network by using the patchCoreAnomalyDetector function with the ResNet-18 backbone.

patchcore = patchCoreAnomalyDetector(Backbone="resnet18");Train Detector

To train the detector, set the doTraining variable to true. Train the detector by using the trainPatchCoreAnomalyDetector function with the untrained patchcore network and the training data as inputs. Specify the CompressionRatio property of the PatchCore detector to 0.1, so that a small ratio of the original features (or memory bank) is preserved, and the model still shows satisfactory performance.

Train on one or more GPUs, if they are available. Using a GPU requires a Parallel Computing Toolbox™ license and a CUDA®-enabled NVIDIA® GPU. For more information, see GPU Computing Requirements (Parallel Computing Toolbox).

doTraining =false; if doTraining detector = trainPatchCoreAnomalyDetector(dsTrainFinal,patchcore,CompressionRatio=0.1); modelDateTime = string(datetime("now",Format="yyyy-MM-dd-HH-mm-ss")); save(string(tempdir)+filesep+"trainedVisAPCBDefectDetectorPatchCore_"+modelDateTime+".mat", ... "detector"); end

Set Anomaly Threshold

An important stage of semi-supervised anomaly detection is choosing an anomaly score threshold for separating normal images from anomalous images. Select an anomaly score threshold for the anomaly detector, which classifies images based on whether their scores are above or below the threshold value. This example uses a calibration data set (defined in the Load and Preprocess Data step) that contains both normal and anomalous images to select the threshold.

Obtain the mean anomaly score and ground truth label for each image in the calibration set.

scores = predict(detector,dsCal);

labels = dsCal.UnderlyingDatastores{1}.Labels ~= "good";Plot a histogram of the mean anomaly scores for the normal and anomalous classes. The distributions are well separated by the model-predicted anomaly score.

numBins = 20; [~,edges] = histcounts(scores,numBins); figure hold on hNormal = histogram(scores(labels==0),edges); hAnomaly = histogram(scores(labels==1),edges); hold off legend([hNormal,hAnomaly],"Normal","Anomaly") xlabel("Mean Anomaly Score") ylabel("Counts")

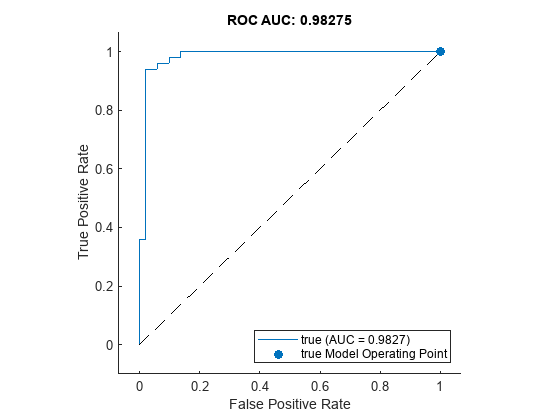

Calculate the optimal anomaly threshold by using the anomalyThreshold function. Specify the first two input arguments as the ground truth labels, labels, and predicted anomaly scores, scores, for the calibration data set. Specify the third input argument as true because true positive anomaly images have a labels value of true. The anomalyThreshold function returns the optimal threshold value as a scalar and the receiver operating characteristic (ROC) curve for the detector as an rocmetrics (Deep Learning Toolbox) object.

[thresh,roc] = anomalyThreshold(labels,scores,true,"MaxF1Score");Set the Threshold property of the anomaly detector to the optimal value.

detector.Threshold = thresh;

Plot the ROC curve by using the plot (Deep Learning Toolbox) object function of rocmetrics. The ROC curve illustrates the performance of the classifier for a range of possible threshold values. Each point on the ROC curve represents the false positive rate (x-coordinate) and true positive rate (y-coordinate) when the calibration set images are classified using a different threshold value. The solid blue line represents the ROC curve. The area under the ROC curve (AUC) metric indicates classifier performance, and the maximum ROC AUC corresponding to a perfect classifier is 1.0.

plot(roc)

title("ROC AUC: "+ roc.AUC)

Evaluate Classification Model

Classify each image in the test set as either normal or anomalous.

testSetOutputLabels = classify(detector,dsTest); testSetOutputLabels = testSetOutputLabels';

Get the ground truth labels of each test image.

testSetTargetLabels = dsTest.UnderlyingDatastores{1}.Labels;Evaluate the anomaly detector by calculating performance metrics by using the evaluateAnomalyDetection function. The function calculates several metrics that evaluate the accuracy, precision, sensitivity, and specificity of the detector for the test data set.

metrics = evaluateAnomalyDetection(testSetOutputLabels,testSetTargetLabels,"bad");Evaluating anomaly detection results

------------------------------------

* Finalizing... Done.

* Data set metrics:

GlobalAccuracy MeanAccuracy Precision Recall Specificity F1Score FalsePositiveRate FalseNegativeRate

______________ ____________ _________ ______ ___________ _______ _________________ _________________

0.94 0.94 0.90741 0.98 0.9 0.94231 0.1 0.02

The ConfusionMatrix property of metrics contains the confusion matrix for the test set. Extract the confusion matrix and display a confusion plot. The classification model in this example is very accurate and predicts a small percentage of false positives and false negatives.

M = metrics.ConfusionMatrix{:,:};

confusionchart(M,["Normal","Anomaly"])

acc = sum(diag(M)) / sum(M,"all");

title("Accuracy: "+acc)

Explain Classification Decisions

You can use the anomaly heatmap that the anomaly detector predicts to explain why the detector classifies an image as normal or anomalous. This approach is useful for identifying patterns in false negatives and false positives. You can use these patterns to identify strategies for increasing class balancing of the training data or improving the network performance.

Calculate Anomaly Heat Map Display Range

Calculate a display range that reflects the range of anomaly scores observed across the entire calibration set, including normal and anomalous images. By using the same display range across images, you can compare images more easily than if you scale each image to its own minimum and maximum. Apply the display range for all heatmaps in this example.

minMapVal = inf; maxMapVal = -inf; reset(dsCal) while hasdata(dsCal) img = read(dsCal); map = anomalyMap(detector,img); minMapVal = min(min(map,[],"all"),minMapVal); maxMapVal = max(max(map,[],"all"),maxMapVal); end displayRange = [minMapVal 0.7*maxMapVal];

View Heatmap of Anomalous Image

Select an image of a correctly classified anomaly. Display the image with the heatmap overlaid by using the anomalyMapOverlay function.

testSetAnomalyLabels = testSetTargetLabels ~= "good"; idxTruePositive = find(testSetAnomalyLabels & testSetOutputLabels,1); dsExample = subset(dsTest,idxTruePositive); img = read(dsExample); map = anomalyMap(detector,img); imshow(anomalyMapOverlay(img,map,MapRange=displayRange,Blend="equal"))

View Heatmap of Normal Image

Select and display an image of a correctly classified normal image, with the heatmap overlaid.

idxTrueNegative = find(~(testSetAnomalyLabels | testSetOutputLabels));

dsExample = subset(dsTest,idxTrueNegative);

img = read(dsExample);

map = anomalyMap(detector,img);

imshow(anomalyMapOverlay(img,map,MapRange=displayRange,Blend="equal"))

View Heatmap of False Positive Image

False positives are images without PCB defect anomalies, but which the network classifies as anomalous. Use the explanation from the PatchCore model [1] to gain insight into the misclassifications.

Select and display a false positive image with the heatmap overlaid. For this test image, anomalous scores are localized to image areas with uneven lightning as in this test image, so you may consider adjusting the image contrast during preprocessing, increasing the number of images used for training, or choosing a different threshold at the calibration step.

idxFalsePositive = find(~(testSetAnomalyLabels) & testSetOutputLabels); if ~isempty(idxFalsePositive) dsExample = subset(dsTest,idxFalsePositive); img = read(dsExample); map = anomalyMap(detector,img); figure imshow(anomalyMapOverlay(img,map,MapRange=displayRange,Blend="equal")); end

View Heatmap of False Negative Image

False negatives are images with PCB defect anomalies that the network classifies as normal. Use the explanation from the PatchCore model [1] to gain insights into the misclassifications.

Find and display a false negative image with the heatmap overlaid. To decrease false negative results, consider adjusting the anomaly threshold or CompressionRatio of the detector.

idxFalseNegative = find(testSetAnomalyLabels & (~testSetOutputLabels)); if ~isempty(idxFalseNegative) dsExample = subset(dsTest,idxFalseNegative); img = read(dsExample); map = anomalyMap(detector,img); figure imshow(anomalyMapOverlay(img,map,MapRange=displayRange,Blend="equal")) end

References

[1] Roth, Karsten, Latha Pemula, Joaquin Zepeda, Bernhard Schölkopf, Thomas Brox, and Peter Gehler. “Towards Total Recall in Industrial Anomaly Detection.” arXiv, May 5, 2022. https://arxiv.org/abs/2106.08265.

[2] Zou, Yang, Jongheon Jeong, Latha Pemula, Dongqing Zhang, and Onkar Dabeer. "SPot-the-Difference Self-supervised Pre-training for Anomaly Detection and Segmentation." In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXX, pp. 392-408. Cham: Springer Nature Switzerland, 2022. https://arxiv.org/pdf/2207.14315v1.

[3] He, Kaiming, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. “Deep Residual Learning for Image Recognition.” In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 770–78. Las Vegas, NV, USA: IEEE, 2016. https://doi.org/10.1109/CVPR.2016.90.

[4] "ImageNet." Accessed July 3, 2023. https://www.image-net.org.

See Also

patchCoreAnomalyDetector | trainPatchCoreAnomalyDetector | anomalyMap | viewAnomalyDetectionResults | anomalyMapOverlay | anomalyThreshold | evaluateAnomalyDetection | splitAnomalyData | rocmetrics (Deep Learning Toolbox) | confusionchart (Deep Learning Toolbox)