Semantic Segmentation of Orthophotos

After converting the central perspective images captured by your UAV into orthophotos, by the following Obtain Orthophotos from Central Perspective Images example, perform semantic segmentation of your orthophotos

This example shows how to perform semantic segmentation of orthophoto images using a Deeplab v3+ network [1].

Background

Semantic segmentation involves labeling each pixel in an image with a class. You can use segmented aerial orthophotos to create a map or see how the infrastructure of city is changing over time.

Deep-learning based semantic segmentation can yield a precise measurement of building cover from high-resolution aerial photographs. For other applications of semantic segmentation, see Semantic Segmentation (Computer Vision Toolbox).

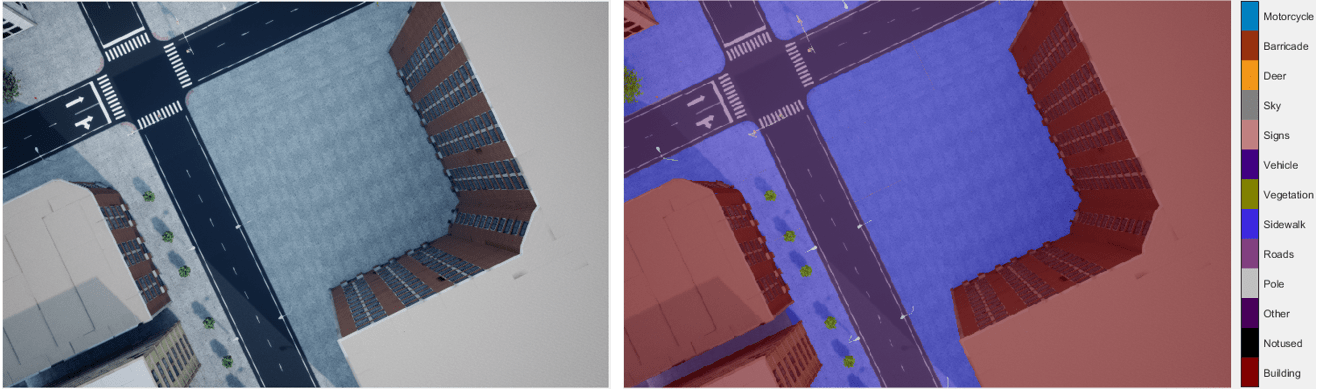

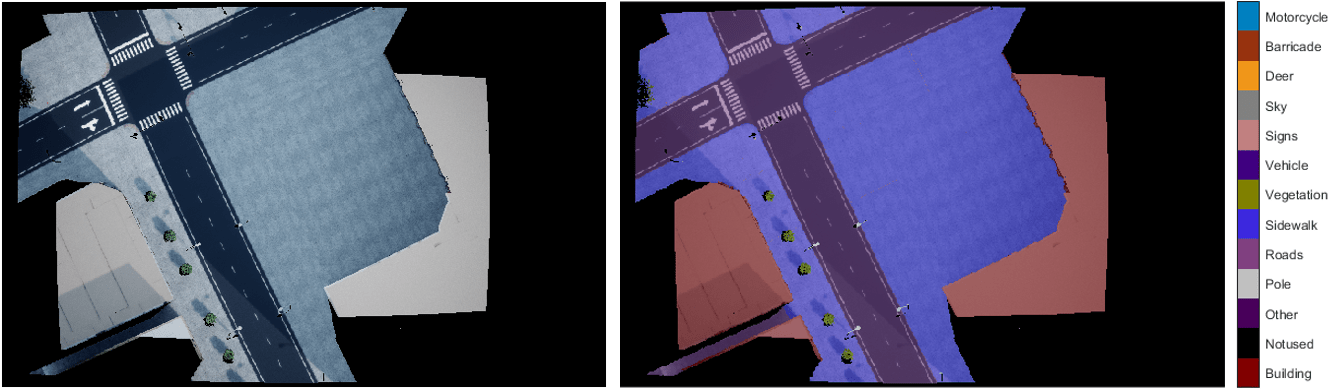

This example uses a Deeplab v3+ network [1], a convolutional neural network (CNN) designed for semantic image segmentation. Use the provided pretrained network to segment the orthophotos. If you prefer, you can train a network on your own data set by using the procedure in the Semantic Segmentation Using Deep Learning (Computer Vision Toolbox) example. This figure shows the type of synthetic data that you can use to train such networks, before and then after obtaining an orthophoto.

Setup

This example uses a pretrained Deeplab v3+ network with a Resnet-18 backbone. ResNet-18 is an efficient network that is well suited for applications with limited processing resources. You can use other pretrained networks, such as MobileNet v2 or ResNet-50, depending on your application requirements. For more details, see Pretrained Deep Neural Networks (Deep Learning Toolbox).

The pretrained network has been trained on a data set of Unreal Engine® US City Block scene data. The Building class in Unreal Engine® simulation data is the Roof class. All other class labels in the Unreal Engine simulation have been marked as NonRoof.

As a result, the network has been trained for binary semantic segmentation using the Roof and NonRoof labels.

If you have not run the Obtain Orthophotos from Central Perspective Images example, you can download that data for this example by setting ranPrevEx to false. Otherwise, set ranPrevEx to true.

ranPrevEx =  "false";

"false";If ranPrevEx is false, download the required data.

if strcmp(ranPrevEx,"false") preprocessedDataComponent = "uav/data/R2023b"; preprocessedDataFilename = "orthophotoData.zip"; disp("Downloading previously processed orthophoto data (50 MB)..."); orthoDataFolder = exampleHelperDownloadData(preprocessedDataComponent,preprocessedDataFilename); end

Downloading previously processed orthophoto data (50 MB)...

Download the pre-trained network.

pretrainedNetworkComponent = "uav/data"; pretrainedNetworkFilename = "deeplabv3plusResnet18Unreal.zip"; disp("Downloading pretrained network (60 MB) ...");

Downloading pretrained network (60 MB) ...

pretrainedNetworkFolder = exampleHelperDownloadData(pretrainedNetworkComponent,pretrainedNetworkFilename);

A CUDA-capable NVIDIA™ GPU is highly recommended for running this example. Use of a GPU requires Parallel Computing Toolbox™. For more information about the supported parallel computing capabilities, see Parallel Computing Toolbox.

Load Orthophotos and Pretrained Network

Use an imageDatastore object to load and store the orthophotos from a UAV from a previous Unreal Engine simulated UAV flight. The imageDatastore object enables you to efficiently load a large collection of images into the workspace.

If you ran the previous Obtain Orthophotos from Central Perspective Images example, select the path to the orthophotos folder that you generated from that example and load the orthophotos. If you have not run the previous example, open the orthophotos folder included in the zip file that you downloaded earlier in this example.

if strcmp(ranPrevEx,"true") orthophotoFolderPath = uigetdir(".","Select orthophotos folder to be read"); imds = imageDatastore(orthophotoFolderPath); else orthophotoFolderPath = fullfile(orthoDataFolder,"orthophotos"); imds = imageDatastore(orthophotoFolderPath); end

Load the pretrained network pretrainedNetwork MAT file that you intend to use. This network obtains the ortholabels.

pretrainedNetwork = fullfile(pretrainedNetworkFolder,"pretrainedNetwork.mat");

load(pretrainedNetwork);Sort the files in the image datastore by their index. This ensures that the images are read in the order of their UAV-flight acquisition time, enabling you to visualize the frames in the correct order.

imds.Files = exampleHelperSortFilepathsByIndex(imds.Files);

Initialize the colormap, class names, and class indices.

cmap = [60,40,222;... 128,0,0]./255; classNames = ["NonRoof","Roof"]; classIndexes = [0 1];

Classify One Orthophoto

Display orthophoto frame 20 prior to classification to verify that the orthophotos have read properly.

numSamples = size(imds.Files,1);

sampleIndex = 20;

orthophoto = readimage(imds,sampleIndex);

imshow(orthophoto);

title(sprintf("Orthophoto Frame Number [%d/%d]",sampleIndex,numSamples));![Figure contains an axes object. The axes object with title Orthophoto Frame Number [20/95] contains an object of type image.](../../examples/uav/win64/SemanticSegmentationOfOrthophotosExample_03.png)

To verify the effectiveness of the pretrained network, run it on orthophoto frame 20 to obtain the ortholabels for the orthophoto.

ortholabel = semanticseg(orthophoto, net);

Then, display the predicted ortholabels using a colormap.

labelsOverlaid = labeloverlay(orthophoto,ortholabel,Colormap=cmap,Transparency=0.4);

imshow(labelsOverlaid)

exampleHelperPixelLabelColorbar(cmap, classNames);

title(sprintf("Ortholabels for Frame Number [%d/%d]",sampleIndex,numSamples));![Figure contains an axes object. The axes object with title Ortholabels for Frame Number [20/95] contains an object of type image.](../../examples/uav/win64/SemanticSegmentationOfOrthophotosExample_04.png)

Visually confirm that the Roof regions correspond to roofs in the orthophoto. If there is a significant discrepancy in classification, the pretrained network is not a good fit for your data. To train a network, refer to the Semantic Segmentation Using Deep Learning (Computer Vision Toolbox) example. Once you have a network that returns accurate labels for your sample image, you can use it to classify your related orthophotos.

Classify All Orthophotos and Save Predicted Ortholabels

Use the same procedure as for the sample image to classify all orthophotos using the pretrained network, and save the output ortholabels.

If an ortholabels folder does not already exist in the current working directory, create one.

ortholabelFolderName = "ortholabels";

exampleHelperMakeFolder(ortholabelFolderName);Create a cell array to store the paths to the saved ortholabels. Then obtain the ortholabels for each orthophoto, and save them to the ortholabels folder.

% Variable to store paths to saved orthophotos pathToOrtholabels = cell(1,numSamples); % Show progress bar f = waitbar(0,"Please wait while ortholabel images are being written ..."); % Obtain ortholabel for each orthophoto for idx=1:numSamples % Get current orthophoto orthophoto = readimage(imds,idx); % Compute ortholabel for current frame as categorical matrix ortholabelCat = semanticseg(orthophoto, net); % Convert ortholabel from categorical matrix to numeric matrix ortholabelNum = exampleHelperCategoricalToClassIndex(ortholabelCat,classNames,classIndexes); % Save ortholabel as image file pathToOrtholabels{idx} = fullfile(ortholabelFolderName,"frame_"+string(idx)+".png"); imwrite(ortholabelNum,pathToOrtholabels{idx}); % Update progress bar progress = idx/numSamples; waitbar(progress,f,sprintf("Obtaining and saving ortholabel for frame [%d/%d] - %.2f%%",idx,numSamples,progress*100)); end % Close progress bar close(f);

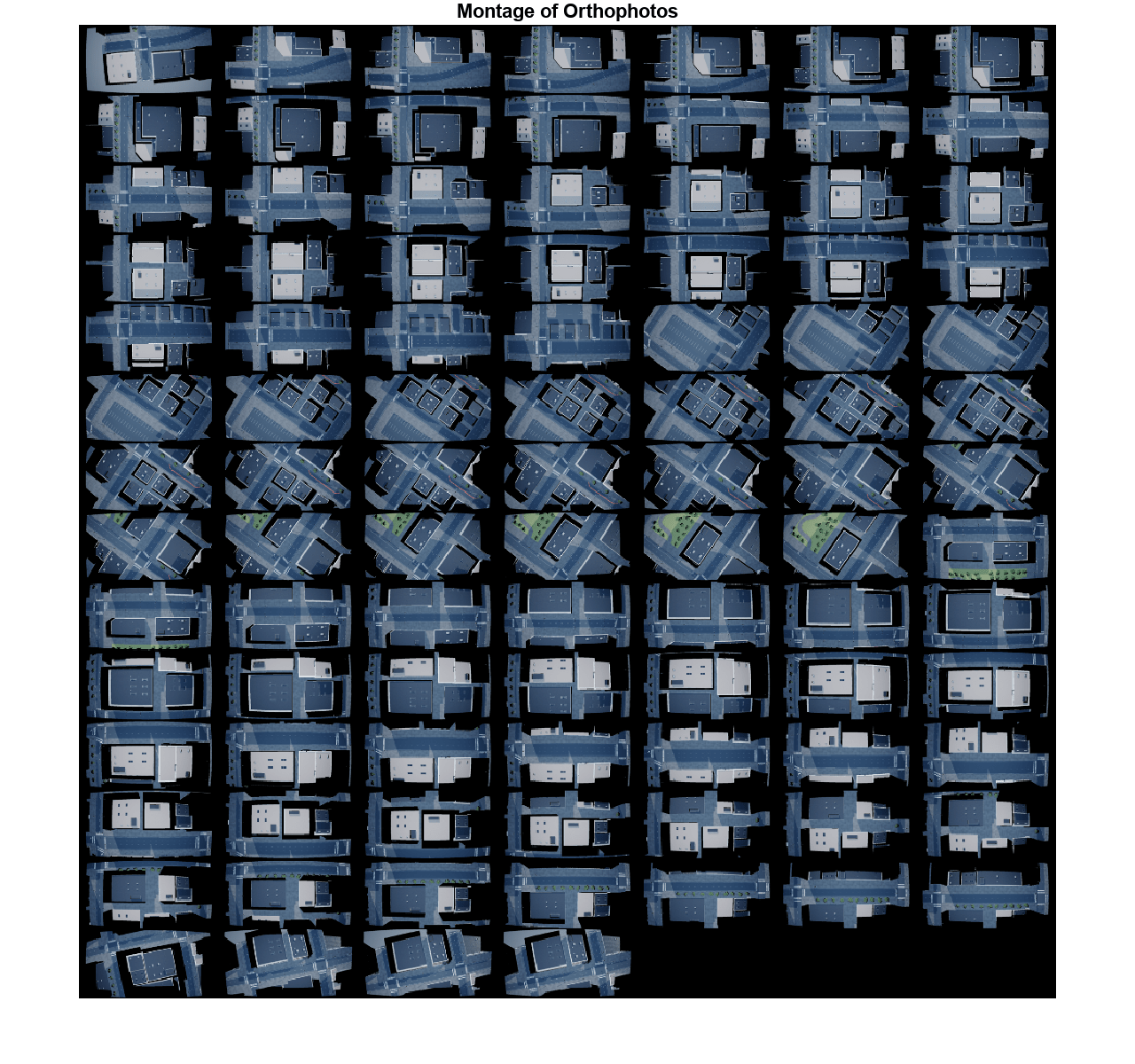

Show a montage of all the orthophotos.

figure;

orthophotoMontage = double(montage(imds).CData);

title("Montage of Orthophotos");

Show a montage of all the predicted ortholabels.

figure("Visible","off"); ortholabelMontage = montage(pathToOrtholabels,cmap).CData;

Create and display a montage of the ortholabels overlaid onto the orthophotos.

Set the transparency for the overlay and create the montage.

transparency = 0.4; orthosOverlaid = transparency*orthophotoMontage/255 + (1-transparency)*ortholabelMontage;

Display the montage of the orthophotos with the ortholabels overlay.

figure;

imshow(orthosOverlaid);

title("Montage of Predicted Ortholabels Overlaying Orthophotos");

Conclusion

This example showed you how to perform semantic segmentation of aerial images.

In the final step of the Map and Classify Urban Environment Using UAV Camera and Deep Learning workflow, Create Orthomosaic from Orthophotos, you will stitch these orthophotos and their ortholabels to create a semantically segmented map of the city.

References

[1] Chen, Liang-Chieh, Yukun Zhu, George Papandreou, Florian Schroff, and Hartwig Adam. “Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation,” 2018. https://doi.org/10.48550/ARXIV.1802.02611.