Train Regression Model Using Hyperparameter Optimization in Regression Learner App

This example shows how to tune hyperparameters of a regression ensemble by using hyperparameter optimization in the Regression Learner app. Compare the test set performance of the trained optimizable ensemble to that of the best-performing preset ensemble model.

In the MATLAB® Command Window, load the

carbigdata set, and create a table containing most of the variables.load carbig cartable = table(Acceleration,Cylinders,Displacement, ... Horsepower,Model_Year,Weight,Origin,MPG);

Open Regression Learner. Click the Apps tab, and then click the arrow at the right of the Apps section to open the apps gallery. In the Machine Learning and Deep Learning group, click Regression Learner.

On the Learn tab, in the File section, select New Session > From Workspace.

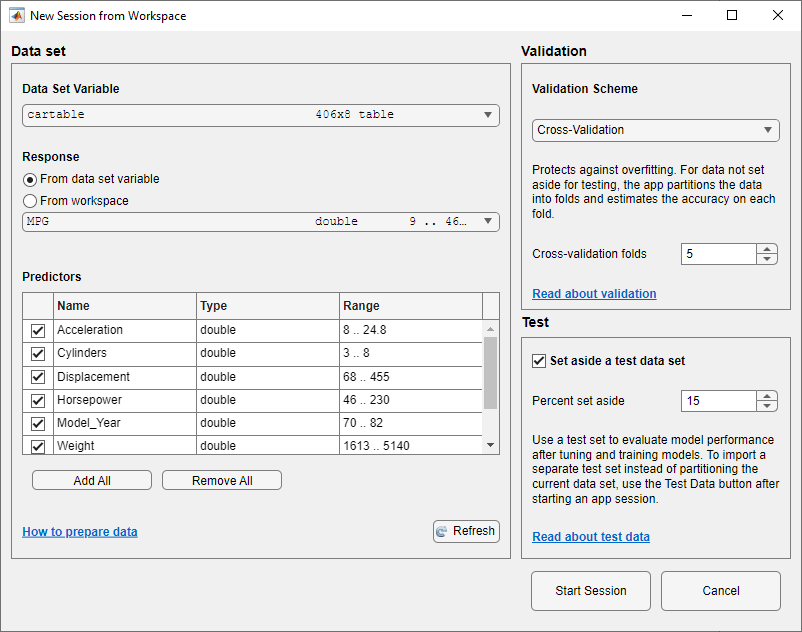

In the New Session from Workspace dialog box, select

cartablefrom the Data Set Variable list. The app selects the response and predictor variables. The default response variable isMPG. The default validation option is 5-fold cross-validation, to protect against overfitting.In the Test section, click the check box to set aside a test data set. Specify to use

15percent of the imported data as a test set.

To accept the options and continue, click Start Session.

Train all preset ensemble models. On the Learn tab, in the Models section, click the arrow to open the gallery. In the Ensembles of Trees group, click All Ensembles. In the Train section, click Train All and select Train All. The app trains one of each ensemble model type, as well as the default fine tree model, and displays the models in the Models pane.

Note

If you have Parallel Computing Toolbox™, then the Use Parallel button is selected by default. After you click Train All and select Train All or Train Selected, the app opens a parallel pool of workers. During this time, you cannot interact with the software. After the pool opens, you can continue to interact with the app while models train in parallel.

If you do not have Parallel Computing Toolbox, then the Use Background Training check box in the Train All menu is selected by default. After you select an option to train models, the app opens a background pool. After the pool opens, you can continue to interact with the app while models train in the background.

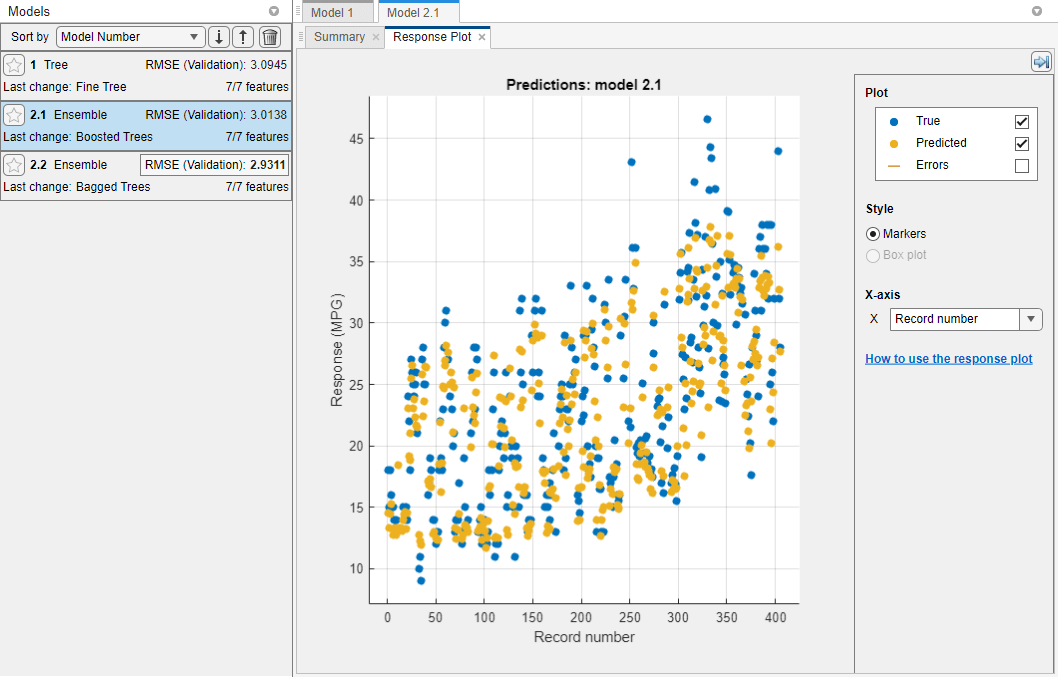

The app displays a response plot for the first ensemble model (model 2.1). Blue points are true values, and yellow points are predicted values. The Models pane on the left shows the validation RMSE for each model.

Note

Validation introduces some randomness into the results. Your model validation results can vary from the results shown in this example.

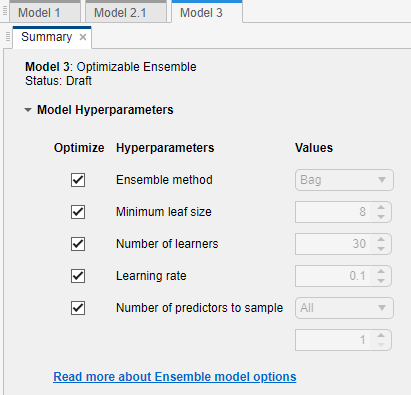

Select an optimizable ensemble model to train. On the Learn tab, in the Models section, click the arrow to open the gallery. In the Ensembles of Trees group, click Optimizable Ensemble.

Select the model hyperparameters to optimize. In the Summary tab, you can select Optimize check boxes for the hyperparameters that you want to optimize. By default, all the check boxes are selected. For this example, accept the default selections.

Train the optimizable model. In the Train section of the Learn tab, click Train All and select Train Selected.

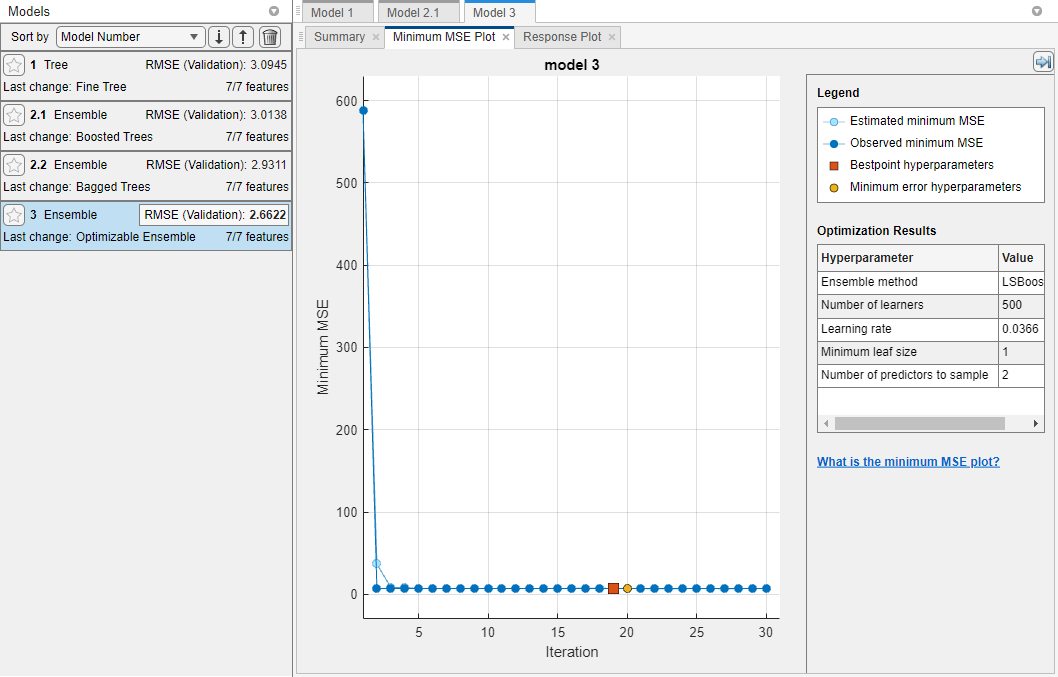

The app displays a Minimum MSE Plot as it runs the optimization process. At each iteration, the app tries a different combination of hyperparameter values and updates the plot with the minimum validation mean squared error (MSE) observed up to that iteration, indicated in dark blue. When the app completes the optimization process, it selects the set of optimized hyperparameters, indicated by a red square. For more information, see Minimum MSE Plot.

The app lists the optimized hyperparameters in both the Optimization Results section to the right of the plot and the Optimizable Ensemble Model Hyperparameters section of the model Summary tab.

Note

In general, the optimization results are not reproducible.

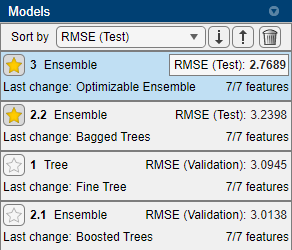

Compare the trained preset ensemble models to the trained optimizable model. In the Models pane, the app highlights the lowest RMSE (Validation) (validation root mean squared error) by outlining it in a box. In this example, the trained optimizable ensemble outperforms the two preset models.

A trained optimizable model does not always have a lower RMSE than the trained preset models. If a trained optimizable model does not perform well, you can try to get better results by running the optimization for longer. On the Learn tab, in the Options section, click Optimizer. In the dialog box, increase the Iterations value. For example, you can double-click the default value of

30and enter a value of60. Then click Save and Apply. The options will be applied to future optimizable models created using the Models gallery.Because hyperparameter tuning often leads to overfitted models, check the performance of the optimizable ensemble model on a test set and compare it to the performance of the best preset ensemble model. Use the data you reserved for testing when you imported data into the app.

First, in the Models pane, click the star icons next to the Bagged Trees model and the Optimizable Ensemble model.

For each model, select the model in the Models pane. In the Test section of the Test tab, click Test Selected. The app computes the test set performance of the model trained on the rest of the data, namely the training and validation data.

Sort the models based on the test set RMSE. In the Models pane, open the Sort by list and select

RMSE (Test).In this example, the trained optimizable ensemble still outperforms the trained preset model on the test set data. More importantly, the test set RMSE is comparable to the validation RMSE for the optimizable model.

See Also

Topics

- Hyperparameter Optimization in Regression Learner App

- Train Regression Models in Regression Learner App

- Select Data for Regression or Open Saved App Session

- Choose Regression Model Options

- Visualize and Assess Model Performance in Regression Learner

- Export Regression Model to Predict New Data

- Bayesian Optimization Workflow