Simulink Based Intelligent Bin Picking Using Universal Robots UR5e for PVC Fittings

This example shows you how to implement semi-structured intelligent bin picking of four different shapes of standard PVC fittings. This example uses a Universal Robots UR5e to perform the bin picking task, enabling successful detection and classification of objects.Then, it uses the suction gripper connected to the cobot to sort the picked up items into four different bins.

This example is packaged as a Simulink® project and similar to the featured example Pick Standard PVC Fittings of Different Shapes Using Semi-Structured Intelligent Bin Picking for UR5e.

Toolbox Dependency (Optional)

Computer Vision Toolbox™ Model for YOLO v4 Object Detection support package is required if you want to train a detector model.

Additional Resources

YOLO Object Detector MAT files and Dataset: This example uses a pretrained YOLOV4 object detector for identifying the PVC objects. Download the detector MAT-file from this link.

Object detector and data set for UR5e Hardware

Note: This script below demonstrates how to train a YOLOv4 object detector for PVC Fittings objects.

open("trainYoloV4ForPVCObject.mlx");

Introduction to Bin Picking Application

This example focuses on semi-structured bin picking. This diagram shows the classification of bin picking operations based on complexity.

At a high level, the bin picking task can be divided into two major modules:

Vision processing / Perception module

Motion or Trajectory planning module

Vision Processing or Perception Module

The workflow in this module involves these steps:

Object detection and classification using RGB data, involving object segmentation based on RGB and depth (RGBD) data using deep learning.

Object pose estimation using 3D point cloud data, which is utilized to estimate the pose of the identified object for motion planning.

The perception step involves these two sub-steps:

Training and validation: Train the RGB-based object detection and classification network (YOLO v4) and its validation against the test dataset.

Online object detection: Detect objects using real-time raw RGBD data using a pre-trained YOLO v4 network, and estimate object pose using Principle Component Analysis (PCA) and Iterative Closest Point (ICP) algorithm.

Motion Planning Module

The workflow in this module involves these steps:

Smart motion planning - selects the part to be picked for motion planning from the detected parts

Goal execution - executes trajectory planning for pick and place operation using UR5e cobot

The motion planning algorithm in this example is designed using the manipulatorCHOMP planner. This algorithm optimizes trajectories for smoothness and collision avoidance by minimizing a cost function consisting of a smoothness cost and a collision cost.

Hardware Requirements

Universal Robots UR5e

Robotiq Epick Suction Gripper or Robotiq 2F-85 Two Finger Gripper

Interface for Universal Robots UR5e

This example uses functionality from Robotics System Toolbox™ Support Package for Universal Robots UR Series Manipulators for trajectory and joint control of the Universal Robots UR5e hardware. This support package provides the universalrobot class, which you can use to control the ROS interface. For more information, see universalrobot documentation.

Interface for Robotiq Gripper

In most real-world cobot applications, operations such as picking and placing, dispensing, and so on, require attaching and using an external end-effector tool customized to the specific application. The ROS drivers from the Universal Robots offer a hand-back control ROS service, which you can use to gain control back from the external control program node. In this example, a helper function is created using this ROS service that you can use in the UR program node execution to run gripper operations along with motion planning.

Interface for Intel RealSense D415

This example uses Intel RealSense D415 camera depth module for perception. The MATLAB® connection is established over the ROS using the IntelRealSense ROS driver.

The data set that this example uses for training the YOLOv4 deep learning network has been created using this sensor.

For more information on how to install the necessary ROS drivers for the connection.

Dimensions of Bin and PVC Fittings

This example uses PVC fittings in four shapes, shown in this diagram, to demonstrate the intelligent bin picking workflow.

The example also uses a rectangular bin.

The initializeParametersForBinPickingSimulationSL.m file contains the dimensions of the cuboid shapes and the bin.

Set Up Rigid Body Tree Environment for Motion Planning

In this example, RigidBodyTree environment is created for motion planning algorithm for manipulatorCHOMP.

This flow chart shows the complete workflow that uses the Live Scripts available in Perception, Motion Planning, and Integration folders of the MATLAB project.

Gripper Types

This example supports the following grippers:

Robotiq Epick Suction Gripper

Robotiq 2F-85 Two-Finger Gripper

Known Limitations

If the objects are very close and attached to the bin wall, the perception algorithm might fail to compute the pose, causing an error in the Simulink model.

In certain edge cases, an object might come into contact with the top surface of the bin wall during place trajectory execution. This might happen due to modeling errors in the collision environment for the rigid body tree.

Open the Simulink Project for Intelligent Bin Picking

To get started, open the example live script and access the supporting files by either clicking Open Live Script in the documentation or using the openExample command.

openExample('urseries/SimulinkIntelligentBinPickUR5ePVCExample');

Open the Simulink™ project file.

prj = openProject('SimulinkBinPickPVCUR5e/IntelligentBinPickingExampleWithSimulink.prj');

Open Simulink Model for Intelligent Bin Picking

Open the IntelligentBinPicking Simulink model by running this command in the command prompt.

open_system('IntelligentBinPicking')

Run Intelligent Bin Picking Simulink Model for UR5e Cobot Hardware

Step1: Make sure that the Robot is powered on and running. Create a Polyscope program on teach pendant. Make sure that the teach pedent is in remote control mode.

Create a program, to execute motion and suction gripper commands in a synchronized manner.

If you want to use the Robotiq 2F-85 Two Finger Gripper, create a program as shown here.

Step 2: Click the Initialize Parameters button to set up parameters in the base workspace.

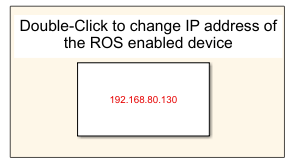

Step 3: You can manually change the IP address of the ROS-enabled machine. Along with the IP address, update other details of the ROS-enabled device as well.

Step 4: Manually update the IP address of the Robot

Step 5: Provide the path to the object detector. Browse and select the path to the downloaded Yolo Object detector MAT file. (Yolo Object detector download link)

Step 6: Ensure that the camera and robot hardware is connected to the host machine. Click the Setup for Hardware block to initiate a connection to the camera and robot hardware.

Click the button to open a dialog box in which you can select the gripper type.

If you want to use Robotiq Epick Suction Gripper, click Vacuum. If you want to use Robotiq 2F-85 Two-Finger Gripper, click Two Finger.

Step 7: Start Simulation.

Run Model with Faster Motion Planning

You can reduce the time taken by the motion planning algorithm by creating a MEX function. Generating a MEX function using C/C++ code generation helps to reduce the computation time and hence reduces the pick and place cycle time.

For more information on how to create a MEX function for the manipulatorRRT algorithm-based planner, see the Generate Code for Manipulator Motion Planning in Perceived Environment example.

For more information on generating MEX function to accelerate your MATLAB program execution, see the Accelerate MATLAB Algorithm by Generating MEX Function example.

Step 1: Create MEX for the exampleHelperCHOMPMotionPlanner function.

Step 2: Select the Enable MEX option in MotionPlannerCHOMPSO block in the Motion Planner subsystem.