Create Egocentric Occupancy Map from Driving Scenario Designer

This example shows how to create an egocentric occupancy map from the Driving Scenario Designer app. This example uses obstacle information from the vision detection generator to update the egocentric occupancy map.

This example:

Gets obstacle information and road geometry using vision detection generator.

Creates an ego centric occupancy map using binary occupancy map.

Updates the ego centric occupancy map using lane boundaries and obstacle information.

Introduction

Automated driving systems use multiple on-board sensors on the ego vehicle like radar, cameras, and lidar. These sensors are used to perceive information from the surroundings and environment. It is important to collate information from these heterogeneous sensors into a common temporal frame of reference. This is usually done using an egocentric occupancy map. This map contains information about the surrounding environment like road geometry, free space, and obstacles. This egocentric occupancy map is used by planning algorithms for navigation. The ego vehicle can respond to dynamic changes in the environment by periodically updating the information in this ego centric occupancy map.

This example shows how to use lane and obstacle information obtained from a scenario to create and update an ego centric occupancy map.

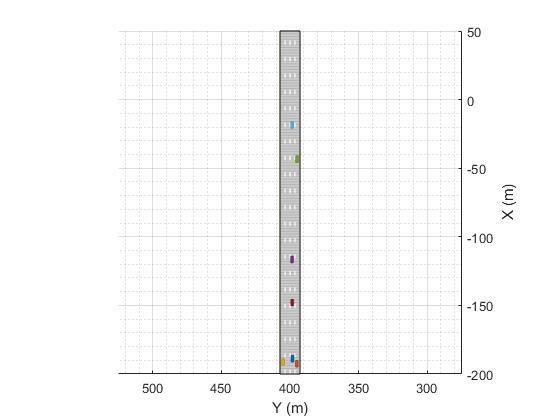

This example also uses a straight road scenario designed using Driving Scenario Designer (DSD). For more details, see Driving Scenario Designer (Automated Driving Toolbox). You can also create a Driving Scenario programmatically. For more details, refer to Create Driving Scenario Programmatically (Automated Driving Toolbox).

Get Lane Boundaries and Obstacle Information from Driving Scenario

The scenario used in this example is a straight road with four lanes. This scenario has one ego vehicle and six target vehicles which follow their respective predefined paths. Create a scenario using the helper function, exampleHelperCreateStraightRoadScenario.

The scenario used in this example is shown in the following figure.

[scenario, egoVehicle] = exampleHelperCreateStraightRoadScenario;

This example uses a vision detection generator which synthesizes camera sensor mounted at the front of the ego vehicle in the driving scenario. This generator is configured to detect lane boundaries and obstacles.

For more details, see visionDetectionGenerator (Automated Driving Toolbox).

The update interval of the vision detection generator is configured to generate detections at 0.1 second intervals, which is consistent with the update rate of typical automotive vision sensors.

Create the actorProfiles for the scenario, which contain physical and radar profiles of all driving scenario actors including the ego vehicle. Specify those actors and other configuration parameters for the vision detection generator.

profiles = actorProfiles(scenario); % Configure vision detection generator to detect lanes and obstacles sensor = visionDetectionGenerator('SensorIndex', 1, ... 'SensorLocation', [1.9 0], ... 'DetectionProbability', 1, ... 'MinObjectImageSize', [5 5], ... 'FalsePositivesPerImage', 0, ... 'DetectorOutput', 'Lanes and objects', ... 'Intrinsics', cameraIntrinsics([700 1814],[320 240],[480 640]), ... 'ActorProfiles', profiles,... 'UpdateInterval', 0.1);

To get detections, the sensor object is called for each simulation step. This sensor object call occurs in the helper function exampleHelperGetObstacleDataFromSensor.

Create an Egocentric Occupancy Map

This example uses a binaryOccupancyMap object to create an egocentric occupancy map.

Create a square occupancy map with 100 meters per side and a resolution of 2 cells per meter. Set all the cells to occupied state by default.

egoMap = binaryOccupancyMap(100, 100, 2); setOccupancy(egoMap,ones(200, 200));

By default, the map origin is at bottom-left. Move the egoMap origin to the center of the occupancy map. This converts the occupancy map to an egocentric occupancy map.

egoMap.GridOriginInLocal = [-egoMap.XLocalLimits(2)/2, ...

-egoMap.YLocalLimits(2)/2];Update the Egocentric Occupancy Map with Obstacle Information

Before updating the egoMap, initialize the visualization window. Obstacles in the egoMap visualization are represented as black (occupied) and free space as white (unoccupied).

hAxes = exampleHelperSetupVisualization(scenario);

Setup a loop for executing the scenario using advance(scenario). This loop should move the egoVehicle along the road, updating the pose as it moves.

Call move on the egoMap using the updated pose to get updated detections. Clear the map of all obstacles for each update.

% Advancing the scenario while advance(scenario) % Ego vehicle position egoPose = egoVehicle.Position; egoYaw = deg2rad(egoVehicle.Yaw); % Move the origin of grid to the face of the ego vehicle. move(egoMap, [egoPose(1), egoPose(2)]); %Reset the egoMap before updating with obstacle information setOccupancy(egoMap, ones(egoMap.GridSize));

Lane boundaries and obstacles information generated from a vision detection generator are used to find occupied space that needs to be updated in the egoMap.

This example uses road boundaries information extracted from the lane boundaries. The region outside road boundaries is also considered occupied.

The exampleHelperGetObstacleDataFromSensor helper function extracts road boundaries and obstacle information generated from the vision detection generator.

[obstacleInfo, roadBorders, isValidLaneTime] = ... exampleHelperGetObstacleDataFromSensor(scenario, egoMap, ... egoVehicle, sensor);

Depending upon the range of the vision detection generator, the detections may fall outside the egoMap bounds. Using the egoMap bounds, the exampleHelperFitlerObstacles function extracts the obstacles and free space that are within the egocentric map.

[obstaclePoints, unoccupiedSpace] = exampleHelperFilterObstacles(... egoMap, obstacleInfo, ... roadBorders, ... isValidLaneTime, egoVehicle);

Use the filtered obstacle and free space locations to update the egocentric map. Update the visualization.

% Set the occupancy of free space to 0 if ~isempty(unoccupiedSpace) setOccupancy(egoMap, unoccupiedSpace, 0); end % Set the occupancy of occupied space to 1 if ~isempty(obstaclePoints) setOccupancy(egoMap, obstaclePoints, 1); end % Updating the visualization exampleHelperUpdateVisualization(hAxes, egoVehicle, egoPose, egoYaw, egoMap); end

![Figure contains 2 axes objects and other objects of type uipanel. Axes object 1 with title Binary Occupancy Grid, xlabel X [meters], ylabel Y [meters] contains 2 objects of type image, patch. Axes object 2 with xlabel X (m), ylabel Y (m) contains 10 objects of type patch, line.](../../examples/nav/win64/CreateEgoCentricOccupancyMapUsingBinaryOccupancyMapExample_02.png)

![Figure contains 2 axes objects and other objects of type uipanel. Axes object 1 with title Binary Occupancy Grid, xlabel X [meters], ylabel Y [meters] contains 2 objects of type image, patch. Axes object 2 with xlabel X (m), ylabel Y (m) contains 10 objects of type patch, line.](../../examples/nav/win64/CreateEgoCentricOccupancyMapUsingBinaryOccupancyMapExample_03.png)