Create Occupancy Grid Using Monocular Camera and Semantic Segmentation

This example shows how to estimate free space around a vehicle and create an occupancy grid using semantic segmentation and deep learning. You then use this occupancy grid to create a vehicle costmap, which can be used to plan a path.

About Free Space Estimation

Free space estimation identifies areas in the environment where the ego vehicle can drive without hitting any obstacles such as pedestrians, curbs, or other vehicles. A vehicle can use a variety of sensors to estimate free space such as radar, lidar, or cameras. This example focuses on estimating free space from an image sensor using semantic segmentation.

In this example, you learn how to:

Use semantic image segmentation to estimate free space.

Create an occupancy grid using the free space estimate.

Visualize the occupancy grid on a bird's-eye plot.

Create a vehicle costmap using the occupancy grid.

Check whether locations in the world are occupied or free.

Download Pretrained Network

This example uses a pretrained semantic segmentation network, which can classify pixels into 11 different classes, including Road, Pedestrian, Car, and Sky. The free space in an image can be estimated by defining image pixels classified as Road as free space. All other classes are defined as non-free space or obstacles.

The complete procedure for training this network is shown in the Semantic Segmentation Using Deep Learning (Computer Vision Toolbox) example. Download the pretrained network.

% Download and load the pretrained network. data = downloadPretrainedNetwork('vision/data','segnetVGG16CamVid'); net = data.net;

Note: Download time of the data depends on your Internet connection. The commands used above block MATLAB® until the download is complete. Alternatively, you can use your web browser to first download the data set to your local disk. In this case, to use the file you downloaded from the web, change the pretrainedFolder variable above to the location of the downloaded file.

Estimate Free Space

Estimate free space by processing the image using downloaded semantic segmentation network. The network returns classifications for each image pixel in the image. The free space is identified as image pixels that have been classified as Road.

The image used in this example is a single frame from an image sequence in the CamVid data set[1]. The procedure shown in this example can be applied to a sequence of frames to estimate free space as a vehicle drives along. However, because a very deep convolutional neural network architecture is used in this example (SegNet with a VGG-16 encoder), it takes about 1 second to process each frame. Therefore, for expediency, process a single frame.

% Read the image. I = imread('seq05vd_snap_shot.jpg'); % Segment the image. [C,scores,allScores] = semanticseg(I,net); % Overlay free space onto the image. B = labeloverlay(I,C,'IncludedLabels',"Road"); % Display free space and image. figure imshow(B)

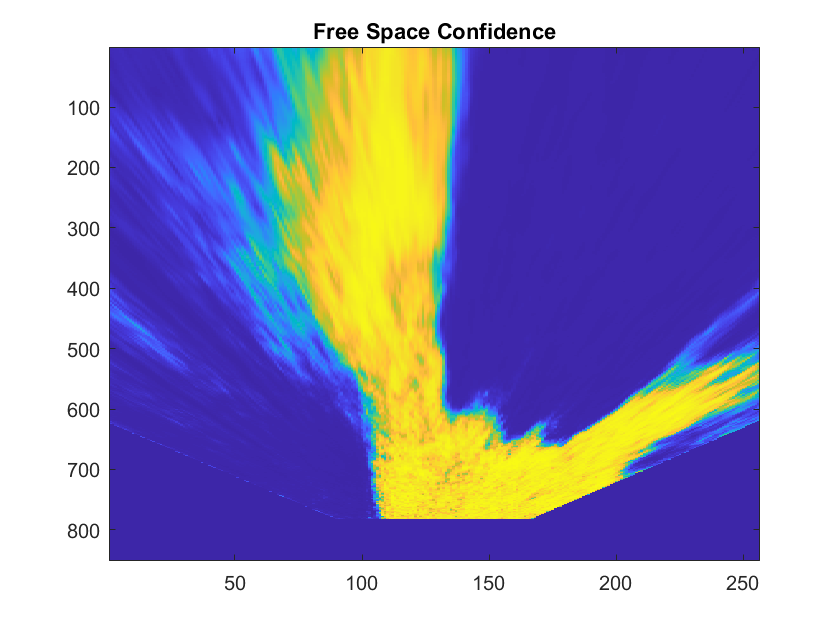

To understand the confidence in the free space estimate, display the output score for the Road class for every pixel. The confidence values can be used to inform downstream algorithms of the estimate's validity. For example, even if the network classifies a pixel as Road, the confidence score may be low enough to disregard that classification for safety reasons.

% Use the network's output score for Road as the free space confidence. roadClassIdx = 4; freeSpaceConfidence = allScores(:,:,roadClassIdx); % Display the free space confidence. figure imagesc(freeSpaceConfidence) title('Free Space Confidence Scores') colorbar

Although the initial segmentation result for Road pixels showed most pixels on the road were classified correctly, visualizing the scores provides richer detail on the classifier's confidence in those classifications. For example, the confidence decreases as you get closer to the boundary of the car.

Create Bird's-Eye-View Image

The free space estimate is generated in the image space. To facilitate generation of an occupancy grid that is useful for navigation, the free space estimate needs to be transformed into the vehicle coordinate system. This can be done by transforming the free space estimate to a bird's-eye-view image.

To create the bird's-eye-view image, first define the camera sensor configuration. The supporting function listed at the end of this example, camvidMonoCameraSensor, returns a monoCamera (Automated Driving Toolbox) object representing the monocular camera used to collect the CamVid[1] data. Configuring the monoCamera (Automated Driving Toolbox) requires the camera intrinsics and extrinsics, which were estimated using data provided in the CamVid data set. To estimate the camera intrinsics, the function used CamVid checkerboard calibration images and the Camera Calibrator (Computer Vision Toolbox) app. Estimates of the camera extrinsics, such as height and pitch, were derived from the extrinsic data estimated by the authors of the CamVid data set.

% Create monoCamera for CamVid data.

sensor = camvidMonoCameraSensor();Given the camera setup, the birdsEyeView (Automated Driving Toolbox) object transforms the original image to the bird's-eye view. This object lets you specify the area that you want transformed using vehicle coordinates. Note that the vehicle coordinate units were established by the monoCamera (Automated Driving Toolbox) object, when the camera mounting height was specified in meters. For example, if the height was specified in millimeters, the rest of the simulation would use millimeters.

% Define bird's-eye-view transformation parameters. distAheadOfSensor = 20; % in meters, as previously specified in monoCamera height input spaceToOneSide = 3; % look 3 meters to the right and left bottomOffset = 0; outView = [bottomOffset, distAheadOfSensor, -spaceToOneSide, spaceToOneSide]; outImageSize = [NaN, 256]; % output image width in pixels; height is chosen automatically to preserve units per pixel ratio birdsEyeConfig = birdsEyeView(sensor,outView,outImageSize);

Generate bird's-eye-view image for the image and free space confidence.

% Resize image and free space estimate to size of CamVid sensor. imageSize = sensor.Intrinsics.ImageSize; I = imresize(I,imageSize); freeSpaceConfidence = imresize(freeSpaceConfidence,imageSize); % Transform image and free space confidence scores into bird's-eye view. imageBEV = transformImage(birdsEyeConfig,I); freeSpaceBEV = transformImage(birdsEyeConfig,freeSpaceConfidence); % Display image frame in bird's-eye view. figure imshow(imageBEV)

Transform the image into a bird's-eye view and generate the free space confidence.

figure

imagesc(freeSpaceBEV)

title('Free Space Confidence')

The areas farther away from the sensor are more blurry, due to having fewer pixels and thus requiring greater amount of interpolation.

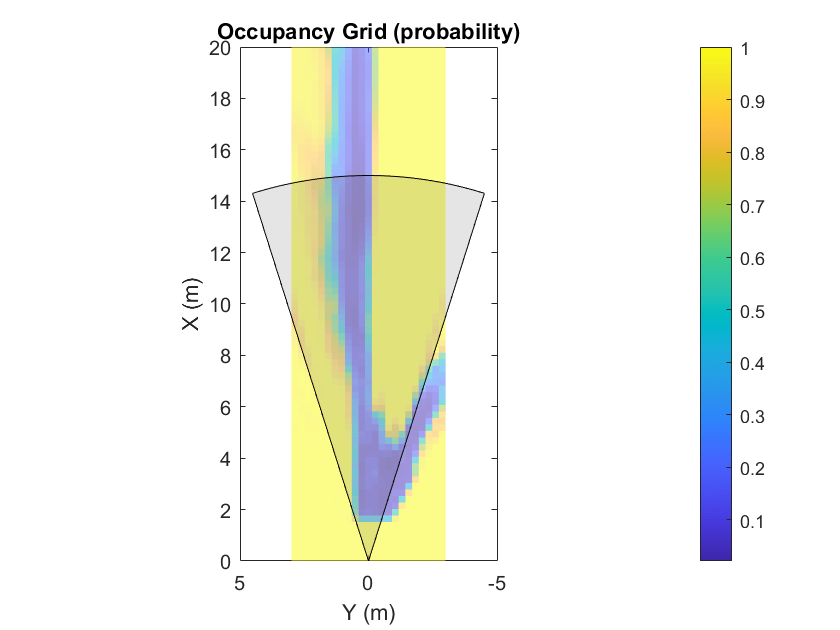

Create Occupancy Grid Based on Free Space Estimation

Occupancy grids are used to represent a vehicle's surroundings as a discrete grid in vehicle coordinates and are used for path planning. Each cell in the occupancy grid has a value representing the probability of the occupancy of that cell. The estimated free space can be used to fill in values of the occupancy grid.

The procedure to fill the occupancy grid using the free space estimate is as follows:

Define the dimensions of the occupancy grid in vehicle coordinates.

Generate a set of (X,Y) points for each grid cell. These points are in the vehicle's coordinate system.

Transform the points from the vehicle coordinate space (X,Y) into the bird's-eye-view image coordinate space (x,y) using the

vehicleToImage(Automated Driving Toolbox) transform.Sample the free space confidence values at (x,y) locations using

griddedInterpolantto interpolate free space confidence values that are not exactly at pixel centers in the image.Fill the occupancy grid cell with the average free space confidence value for all (x,y) points that correspond to that grid cell.

For brevity, the procedure shown above is implemented in the supporting function, createOccupancyGridFromFreeSpaceEstimate, which is listed at the end of this example. Define the dimensions of the occupancy grid in terms of the bird's-eye-view configuration and create the occupancy grid by calling createOccupancyGridFromFreeSpaceEstimate.

% Define dimensions and resolution of the occupancy grid. gridX = distAheadOfSensor; gridY = 2 * spaceToOneSide; cellSize = 0.25; % in meters to match units used by CamVid sensor % Create the occupancy grid from the free space estimate. occupancyGrid = createOccupancyGridFromFreeSpaceEstimate(... freeSpaceBEV, birdsEyeConfig, gridX, gridY, cellSize);

Visualize the occupancy grid using birdsEyePlot (Automated Driving Toolbox). Create a birdsEyePlot (Automated Driving Toolbox) and add the occupancy grid on top using pcolor.

% Create bird's-eye plot. bep = birdsEyePlot('XLimits',[0 distAheadOfSensor],'YLimits', [-5 5]); % Add occupancy grid to bird's-eye plot. hold on [numCellsY,numCellsX] = size(occupancyGrid); X = linspace(0, gridX, numCellsX); Y = linspace(-gridY/2, gridY/2, numCellsY); h = pcolor(X,Y,occupancyGrid); title('Occupancy Grid (probability)') colorbar delete(legend) % Make the occupancy grid visualization transparent and remove grid lines. h.FaceAlpha = 0.5; h.LineStyle = 'none';

The bird's-eye plot can also display data from multiple sensors. For example, add the radar coverage area using coverageAreaPlotter (Automated Driving Toolbox).

% Add coverage area to plot. caPlotter = coverageAreaPlotter(bep, 'DisplayName', 'Coverage Area'); % Update it with a field of view of 35 degrees and a range of 60 meters mountPosition = [0 0]; range = 15; orientation = 0; fieldOfView = 35; plotCoverageArea(caPlotter, mountPosition, range, orientation, fieldOfView); hold off

Displaying data from multiple sensors is useful for diagnosing and debugging decisions made by autonomous vehicles.

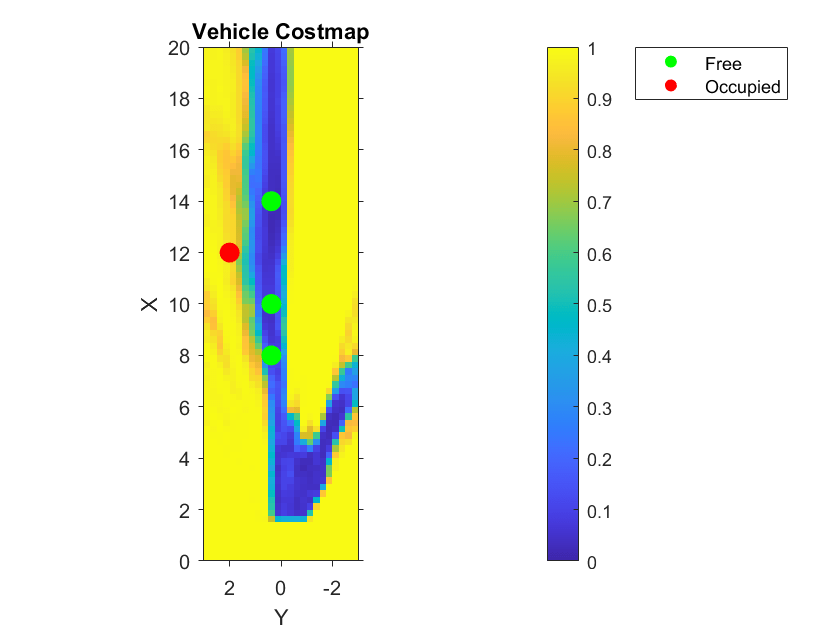

Create Vehicle Costmap Using the Occupancy Grid

The vehicleCostmap (Automated Driving Toolbox) provides functionality to check if locations, in vehicle or world coordinates, are occupied or free. This check is required for any path-planning or decision-making algorithm. Create the vehicleCostmap (Automated Driving Toolbox) using the generated occupancyGrid.

% Create the costmap. costmap = vehicleCostmap(flipud(occupancyGrid), ... 'CellSize',cellSize, ... 'MapLocation',[0,-spaceToOneSide]); costmap.CollisionChecker.InflationRadius = 0; % Display the costmap. figure plot(costmap,'Inflation','off') colormap(parula) colorbar title('Vehicle Costmap') % Orient the costmap so that it lines up with the vehicle coordinate % system, where the X-axis points in front of the ego vehicle and the % Y-axis points to the left. view(gca,-90,90)

To illustrate how to use the vehicleCostmap (Automated Driving Toolbox), create a set of locations in world coordinates. These locations represent a path the vehicle could traverse.

% Create a set of locations in vehicle coordinates.

candidateLocations = [

8 0.375

10 0.375

12 2

14 0.375

];Use checkOccupied (Automated Driving Toolbox) to check whether each location is occupied or free. Based on the results, a potential path might be impossible to follow because it collides with obstacles defined in the costmap.

% Check if locations are occupied. isOccupied = checkOccupied(costmap,candidateLocations); % Partition locations into free and occupied for visualization purposes. occupiedLocations = candidateLocations(isOccupied,:); freeLocations = candidateLocations(~isOccupied,:); % Display free and occupied points on top of costmap. hold on markerSize = 100; scatter(freeLocations(:,1),freeLocations(:,2),markerSize,'g','filled') scatter(occupiedLocations(:,1),occupiedLocations(:,2),markerSize,'r','filled'); legend(["Free" "Occupied"]) hold off

The use of occupancyGrid, vehicleCostmap (Automated Driving Toolbox), and checkOccupied (Automated Driving Toolbox) shown above illustrate the basic operations used by path planners such as pathPlannerRRT (Automated Driving Toolbox). Learn more about path planning in the Automated Parking Valet (Automated Driving Toolbox) example.

References

[1] Brostow, Gabriel J., Julien Fauqueur, and Roberto Cipolla. "Semantic Object Classes in Video: A high-definition ground truth database." Pattern Recognition Letters. Vol. 30, Issue 2, 2009, pp. 88-97.

Supporting Functions

function sensor = camvidMonoCameraSensor() % Return a monoCamera camera configuration based on data from the CamVid % data set[1]. % % The cameraCalibrator app was used to calibrate the camera using the % calibration images provided in CamVid: % % http://web4.cs.ucl.ac.uk/staff/g.brostow/MotionSegRecData/data/CalibrationSeq_and_Files_0010YU.zip % % Calibration pattern grid size is 28 mm. % % Camera pitch is computed from camera pose matrices [R t] stored here: % % http://web4.cs.ucl.ac.uk/staff/g.brostow/MotionSegRecData/data/EgoBoost_trax_matFiles.zip % References % ---------- % [1] Brostow, Gabriel J., Julien Fauqueur, and Roberto Cipolla. "Semantic Object % Classes in Video: A high-definition ground truth database." _Pattern Recognition % Letters_. Vol. 30, Issue 2, 2009, pp. 88-97. calibrationData = load('camera_params_camvid.mat'); % Describe camera configuration. focalLength = calibrationData.cameraParams.FocalLength; principalPoint = calibrationData.cameraParams.PrincipalPoint; imageSize = calibrationData.cameraParams.ImageSize; % Camera height estimated based on camera setup pictured in [1]. height = 0.5; % height in meters from the ground % Camera pitch was computed using camera extrinsics provided in data set. pitch = 0; % pitch of the camera, towards the ground, in degrees camIntrinsics = cameraIntrinsics(focalLength,principalPoint,imageSize); sensor = monoCamera(camIntrinsics,height,'Pitch',pitch); end

function occupancyGrid = createOccupancyGridFromFreeSpaceEstimate(... freeSpaceBEV,birdsEyeConfig,gridX,gridY,cellSize) % Return an occupancy grid that contains the occupancy probability over % a uniform 2-D grid. % Number of cells in occupancy grid. numCellsX = ceil(gridX / cellSize); numCellsY = ceil(gridY / cellSize); % Generate a set of (X,Y) points for each grid cell. These points are in % the vehicle's coordinate system. Start by defining the edges of each grid % cell. % Define the edges of each grid cell in vehicle coordinates. XEdges = linspace(0,gridX,numCellsX); YEdges = linspace(-gridY/2,gridY/2,numCellsY); % Next, specify the number of sample points to generate along each % dimension within a grid cell. Use these to compute the step size in the % X and Y direction. The step size will be used to shift the edge values of % each grid to produce points that cover the entire area of a grid cell at % the desired resolution. % Sample 20 points from each grid cell. Sampling more points may produce % smoother estimates at the cost of additional computation. numSamplePoints = 20; % Step size needed to sample number of desired points. XStep = (XEdges(2)-XEdges(1)) / (numSamplePoints-1); YStep = (YEdges(2)-YEdges(1)) / (numSamplePoints-1); % Finally, slide the set of points across both dimensions of the grid % cells. Sample the occupancy probability along the way using % griddedInterpolant. % Create griddedInterpolant for sampling occupancy probability. Use 1 % minus the free space confidence to represent the probability of occupancy. occupancyProb = 1 - freeSpaceBEV; sz = size(occupancyProb); [y,x] = ndgrid(1:sz(1),1:sz(2)); F = griddedInterpolant(y,x,occupancyProb); % Initialize the occupancy grid to zero. occupancyGrid = zeros(numCellsY*numCellsX,1); % Slide the set of points XEdges and YEdges across both dimensions of the % grid cell. for j = 1:numSamplePoints % Increment sample points in the X-direction X = XEdges + (j-1)*XStep; for i = 1:numSamplePoints % Increment sample points in the Y-direction Y = YEdges + (i-1)*YStep; % Generate a grid of sample points in bird's-eye-view vehicle coordinates [XGrid,YGrid] = meshgrid(X,Y); % Transform grid of sample points to image coordinates xy = vehicleToImage(birdsEyeConfig,[XGrid(:) YGrid(:)]); % Clip sample points to lie within image boundaries xy = max(xy,1); xq = min(xy(:,1),sz(2)); yq = min(xy(:,2),sz(1)); % Sample occupancy probabilities using griddedInterpolant and keep % a running sum. occupancyGrid = occupancyGrid + F(yq,xq); end end % Determine mean occupancy probability. occupancyGrid = occupancyGrid / numSamplePoints^2; occupancyGrid = reshape(occupancyGrid,numCellsY,numCellsX); end