Download and unzip the air compressor data set [1]. This data set consists of recordings from air compressors in a healthy state or one of 7 faulty states.

Create an audioDatastore object to manage the data and split it into train and validation sets.

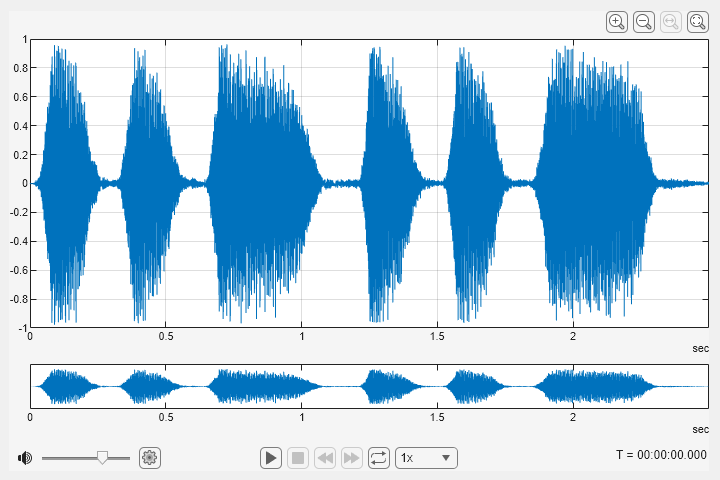

Read an audio file from the datastore and save the sample rate for later use. Reset the datastore to return the read pointer to the beginning of the data set. Listen to the audio signal and plot the signal in the time domain.

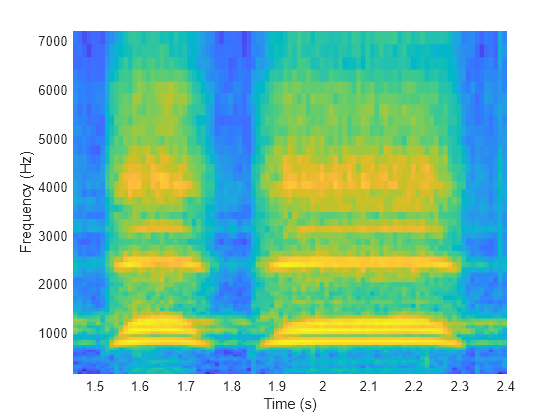

Extract Mel spectrograms from the train set using yamnetPreprocess. There are multiple spectrograms for each audio signal. Replicate the labels so that they are in one-to-one correspondence with the spectrograms.

Extract features from the validation set and replicate the labels.

The air compressor data set has only 8 classes. Call audioPretrainedNetwork with NumClasses set to 8 to load a pretrained YAMNet network with the desired number of output classes for transfer learning.

To define training options, use trainingOptions.

To train the network, use trainnet.

Save the trained network to airCompressorNet.mat. You can now use this pre-trained network by loading the airCompressorNet.mat file.

References

[1] Verma, Nishchal K., et al. “Intelligent Condition Based Monitoring Using Acoustic Signals for Air Compressors.” IEEE Transactions on Reliability, vol. 65, no. 1, Mar. 2016, pp. 291–309. DOI.org (Crossref), doi:10.1109/TR.2015.2459684.