retrieveImages

Search image set for similar image

Syntax

Description

imageIDs = retrieveImages(queryImage,imageIndex)imageIDs that correspond to images within

imageIndex that are visually similar to the query image. The

imageIDs are returned in ranked order, from the most to least similar

match.

[ optionally

returns the similarity scores used to rank the image retrieval results.

The imageIDs,scores]

= retrieveImages(queryImage,imageIndex)scores output contains the corresponding

scores from 0 to 1.

[ optionally

returns the visual words in imageIDs,scores,imageWords]

= retrieveImages(queryImage,imageIndex)queryImage that are

used to search for similar images.

[ uses

additional options specified by one or more imageIDs,___] =

retrieveImages(queryImage,imageIndex,Name,Value)Name,Value pair

arguments, using any of the preceding syntaxes.

Examples

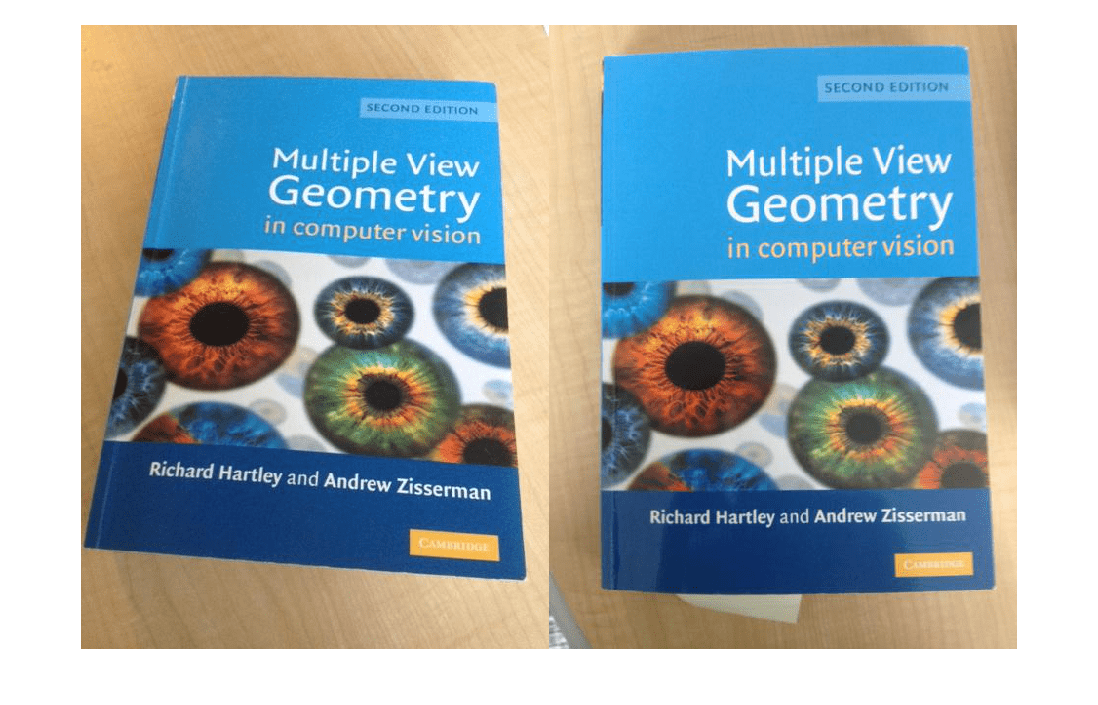

Create an image set of book covers.

dataDir = fullfile(toolboxdir('vision'),'visiondata','bookCovers'); bookCovers = imageDatastore(dataDir);

Display the data set.

thumbnailGallery = []; for i = 1:length(bookCovers.Files) I = readimage(bookCovers,i); thumbnail = imresize(I,[300 300]); thumbnailGallery = cat(4,thumbnailGallery,thumbnail); end figure montage(thumbnailGallery);

Index the image set. This step may take a few minutes.

imageIndex = indexImages(bookCovers);

Creating an inverted image index using Bag-Of-Features. ------------------------------------------------------- Creating Bag-Of-Features. ------------------------- * Selecting feature point locations using the Detector method. * Extracting SURF features from the selected feature point locations. ** detectSURFFeatures is used to detect key points for feature extraction. * Extracting features from 58 images...done. Extracted 29216 features. * Keeping 80 percent of the strongest features from each category. * Balancing the number of features across all image categories to improve clustering. ** Image category 1 has the least number of strongest features: 23373. ** Using the strongest 23373 features from each of the other image categories. * Creating a 20000 word visual vocabulary. * Number of levels: 1 * Branching factor: 20000 * Number of clustering steps: 1 * [Step 1/1] Clustering vocabulary level 1. * Number of features : 23373 * Number of clusters : 20000 * Initializing cluster centers...100.00%. * Clustering...completed 5/100 iterations (~0.51 seconds/iteration)...converged in 5 iterations. * Finished creating Bag-Of-Features Encoding images using Bag-Of-Features. -------------------------------------- * Encoding 58 images...done. Finished creating the image index.

Select and display the query image.

queryDir = fullfile(dataDir,'queries',filesep); queryImage = imread([queryDir 'query3.jpg']); imageIDs = retrieveImages(queryImage,imageIndex);

Show the query image and its best match, side-by-side.

bestMatch = imageIDs(1);

bestImage = imread(imageIndex.ImageLocation{bestMatch});

figure

imshowpair(queryImage,bestImage,'montage')

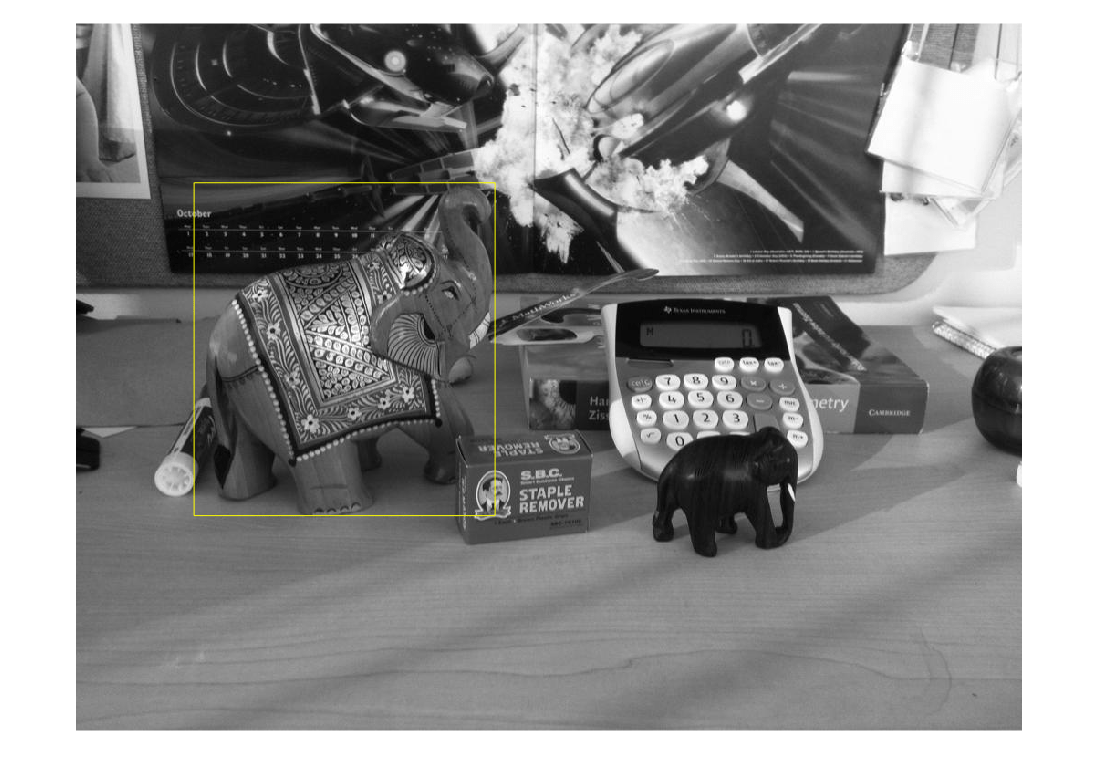

Search an image set for an object using a region of interest (ROI) for the query image.

Define a set of images to search.

imageFiles = ... {'elephant.jpg', 'cameraman.tif', ... 'peppers.png', 'saturn.png',... 'pears.png', 'stapleRemover.jpg', ... 'football.jpg', 'mandi.tif',... 'kids.tif', 'liftingbody.png', ... 'office_5.jpg', 'gantrycrane.png',... 'moon.tif', 'circuit.tif', ... 'tape.png', 'coins.png'}; imds = imageDatastore(imageFiles);

Create a search index.

imageIndex = indexImages(imds);

Creating an inverted image index using Bag-Of-Features. ------------------------------------------------------- Creating Bag-Of-Features. ------------------------- * Selecting feature point locations using the Detector method. * Extracting SURF features from the selected feature point locations. ** detectSURFFeatures is used to detect key points for feature extraction. * Extracting features from 16 images...done. Extracted 3680 features. * Keeping 80 percent of the strongest features from each category. * Balancing the number of features across all image categories to improve clustering. ** Image category 1 has the least number of strongest features: 2944. ** Using the strongest 2944 features from each of the other image categories. * Creating a 2944 word visual vocabulary. * Number of levels: 1 * Branching factor: 2944 * Number of clustering steps: 1 * [Step 1/1] Clustering vocabulary level 1. * Number of features : 2944 * Number of clusters : 2944 * Initializing cluster centers...100.00%. * Clustering...completed 1/100 iterations (~0.02 seconds/iteration)...converged in 1 iterations. * Finished creating Bag-Of-Features Encoding images using Bag-Of-Features. -------------------------------------- * Encoding 16 images...done. Finished creating the image index.

Specify a query image and an ROI. The ROI outlines the object, an elephant, for the search.

queryImage = imread('clutteredDesk.jpg'); queryROI = [130 175 330 365]; figure imshow(queryImage) rectangle('Position',queryROI,'EdgeColor','yellow')

You can also use the imrect function to select an ROI interactively. For example, queryROI = getPosition(imrect)

Find images that contain the object.

imageIDs = retrieveImages(queryImage,imageIndex,'ROI',queryROI)imageIDs = 12×1 uint32 column vector

1

11

6

12

2

3

8

5

14

13

10

16

⋮

Display the best match.

bestMatch = imageIDs(1);

figure

imshow(imageIndex.ImageLocation{bestMatch})

Use the locations of visual words to verify the best search result. To rerank the search results based on geometric information, repeat this procedure for the top N search results.

Specify the location of the images.

dataDir = fullfile(toolboxdir('vision'),'visiondata','bookCovers'); bookCovers = imageDatastore(dataDir);

Index the image set. This process can take a few minutes.

imageIndex = indexImages(bookCovers);

Creating an inverted image index using Bag-Of-Features. ------------------------------------------------------- Creating Bag-Of-Features. ------------------------- * Selecting feature point locations using the Detector method. * Extracting SURF features from the selected feature point locations. ** detectSURFFeatures is used to detect key points for feature extraction. * Extracting features from 58 images...done. Extracted 29216 features. * Keeping 80 percent of the strongest features from each category. * Balancing the number of features across all image categories to improve clustering. ** Image category 1 has the least number of strongest features: 23373. ** Using the strongest 23373 features from each of the other image categories. * Creating a 20000 word visual vocabulary. * Number of levels: 1 * Branching factor: 20000 * Number of clustering steps: 1 * [Step 1/1] Clustering vocabulary level 1. * Number of features : 23373 * Number of clusters : 20000 * Initializing cluster centers...100.00%. * Clustering...completed 5/100 iterations (~1.42 seconds/iteration)...converged in 5 iterations. * Finished creating Bag-Of-Features Encoding images using Bag-Of-Features. -------------------------------------- * Encoding 58 images...done. Finished creating the image index.

Select and display the query image.

queryDir = fullfile(dataDir,'queries',filesep); queryImage = imread([queryDir 'query3.jpg']); figure imshow(queryImage)

Retrieve the best matches. The queryWords output contains visual word locations information for the query image. Use this information to verify the search results.

[imageIDs, ~, queryWords] = retrieveImages(queryImage,imageIndex);

Find the best match for the query image by extracting the visual words from the image index. The image index contains the visual word information for all images in the index.

bestMatch = imageIDs(1);

bestImage = imread(imageIndex.ImageLocation{bestMatch});

bestMatchWords = imageIndex.ImageWords(bestMatch);Generate a set of tentative matches based on visual word assignments. Each visual word in the query can have multiple matches due to the hard quantization used to assign visual words.

queryWordsIndex = queryWords.WordIndex; bestMatchWordIndex = bestMatchWords.WordIndex; tentativeMatches = []; for i = 1:numel(queryWords.WordIndex) idx = find(queryWordsIndex(i) == bestMatchWordIndex); matches = [repmat(i, numel(idx), 1) idx]; tentativeMatches = [tentativeMatches; matches]; end

Show the point locations for the tentative matches. There are many poor matches.

points1 = queryWords.Location(tentativeMatches(:,1),:);

points2 = bestMatchWords.Location(tentativeMatches(:,2),:);

figure

showMatchedFeatures(queryImage,bestImage,points1,points2,'montage')

Remove poor visual word assignments using estimateGeometricTransform2D function. Keep the assignments that fit a valid geometric transform.

[tform,inlierIdx] = ... estimateGeometricTransform2D(points1,points2,'affine',... 'MaxNumTrials',2000); inlierPoints1 = points1(inlierIdx, :); inlierPoints2 = points2(inlierIdx, :);

Rerank the search results by the percentage of inliers. Do this when the geometric verification procedure is applied to the top N search results. Those images with a higher percentage of inliers are more likely to be relevant.

percentageOfInliers = size(inlierPoints1,1)./size(points1,1); figure showMatchedFeatures(queryImage,bestImage,inlierPoints1,... inlierPoints2,'montage')

Apply the estimated transform.

outputView = imref2d(size(bestImage)); Ir = imwarp(queryImage, tform, 'OutputView', outputView); figure imshowpair(Ir,bestImage,'montage')

Use the evaluateImageRetrieval function to help select proper search parameters.

Create an image set.

setDir = fullfile(toolboxdir('vision'),'visiondata','imageSets','cups'); imds = imageDatastore(setDir, 'IncludeSubfolders', true, 'LabelSource', 'foldernames');

Index the image set.

imageIndex = indexImages(imds,'Verbose',false);Tune image search parameters.

imageIndex.MatchThreshold = 0.2; imageIndex.WordFrequencyRange = [0 1]

imageIndex =

invertedImageIndex with properties:

ImageLocation: {6×1 cell}

ImageWords: [6×1 vision.internal.visualWords]

WordFrequency: [0.1667 0.1667 0.1667 0.3333 0.1667 0.1667 0.1667 0.5000 0.3333 0.1667 0.3333 0.1667 0.1667 0.1667 0.1667 0.1667 0.1667 0.1667 0.1667 0.3333 0.1667 0.1667 0.1667 0.1667 0.1667 0.1667 0.1667 0.1667 0.1667 0.1667 … ] (1×1366 double)

BagOfFeatures: [1×1 bagOfFeatures]

ImageID: [1 2 3 4 5 6]

MatchThreshold: 0.2000

WordFrequencyRange: [0 1]

queryImage = readimage(imds, 1); indices = retrieveImages(queryImage,imageIndex);

Input Arguments

Input query image, specified as either an M-by-N-by-3 truecolor image or an M-by-N 2-D grayscale image.

Data Types: single | double | int16 | uint8 | uint16 | logical

Image search index, specified as an invertedImageIndex object. The indexImages function creates the invertedImageIndex object, which

stores the data used for the image search.

Name-Value Arguments

Specify optional pairs of arguments as

Name1=Value1,...,NameN=ValueN, where Name is

the argument name and Value is the corresponding value.

Name-value arguments must appear after other arguments, but the order of the

pairs does not matter.

Before R2021a, use commas to separate each name and value, and enclose

Name in quotes.

Example: 'NumResults',25 sets

the 'NumResults' property to 25

Maximum number of results to return, specified as the comma-separated

pair consisting of 'NumResults' and a numeric

value. Set this value to Inf to return as many

matching images as possible.

Query image search region, specified as the comma-separated

pair consisting of 'ROI' and an [x y width height]

vector.

Similarity metric used to rank the image retrieval results, specified as

'cosine' or 'L1'

[3].

Output Arguments

Ranked index of retrieved images, returned as an M-by-1 vector. The image IDs are returned in ranked order, from the most to least similar matched image.

Object for storing visual word assignments, returned as a visualWords object.

The object stores the visual word assignments of queryImage and

their locations within that image.

References

[1] Sivic, J. and A. Zisserman. Video Google: A text retrieval approach to object matching in videos. ICCV (2003) pg 1470-1477.

[2] Philbin, J., O. Chum, M. Isard, J. Sivic, and A. Zisserman. Object retrieval with large vocabularies and fast spatial matching. CVPR (2007).

[3] Gálvez-López, Dorian, and Juan D. Tardos. Bags of binary words for fast place recognition in image sequences. IEEE Transactions on Robotics 28.5 (2012): 1188-1197.

Extended Capabilities

C/C++ Code Generation

Generate C and C++ code using MATLAB® Coder™.

Version History

Introduced in R2015a

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Select a Web Site

Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select: .

You can also select a web site from the following list

How to Get Best Site Performance

Select the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

Americas

- América Latina (Español)

- Canada (English)

- United States (English)

Europe

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)