Train Deep-Learning-Based CHOMP Optimizer for Motion Planning

This example shows you how to train a dlCHOMP optimizer for motion planning in a complex spherical obstacle environment.

This example shows you how to set data generation parameters to generate a spherical obstacle environment, generate a training dataset using these parameters, train the dlCHOMP optimizer and then generate code to use it for motion planning for the KUKA LBR iiwa 7 robot in a new obstacle environment.

To see pretrained networks for other robots, see Pretrained Optimizers.

Overview

Deep Learning guess-powered Covariant Hamiltonian Optimization for Motion Planning (DLCHOMP) is a trajectory-optimization-based motion planner that optimizes neural-network-predicted trajectories to make the trajectories smoother and avoid obstacles in the environment. It does this by minimizing a cost function, comprised of a smoothness cost and a collision cost. It is similar to the manipulatorCHOMP object but dlCHOMP enables you to achieve faster optimization times for robots operating in relatively-static environments by training the optimizer.

This example shows you how to load a manipulator robot, generate a complex collision geometries as spherical obstacles around the robot for DLCHOMP, and visualize the resulting optimized trajectories. This example assumes that you have some familiarity with the manipulatorCHOMP object.

Create dlCHOMP Optimizer

To create a dlCHOMP object that meets our requirements, first determine the robot to work with. For instance, let's consider the kukaIiwa7 robot.

kuka = loadrobot("kukaIiwa7",DataFormat="row");

Then, determine the basis point set encoding parameters to be used to encode the obstacle environment of interest and create a bpsEncoder object accordingly. In this instance, use the uniform-ball-3d arrangement of 10,000 points for the encoder. Associate a seed value with a random number generator to ensure that each run gives the same random arrangement of points for the encoder.

seed = 100; rng(seed,"twister"); encoder = bpsEncoder("uniform-ball-3d",10000);

Finally, set the number of waypoints desired in the optimized trajectory output of dlCHOMP.

numWaypoints = 40;

Now construct a dlCHOMP object using the loaded robot kuka, the environment encoder encoder and the desired number of waypoints numWaypoints.

dlchomp = dlCHOMP(kuka,encoder,numWaypoints);

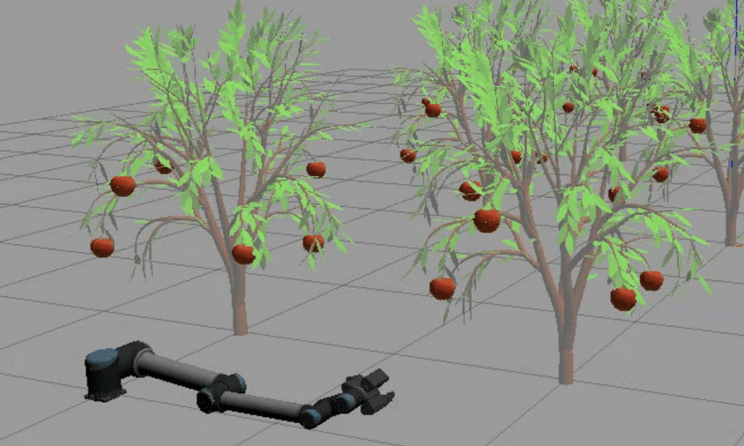

Assume that a manipulatorCHOMP optimizer object is available to work in our apple-picking target environment of interest.

So, set the CollisionOptions, SmoothnessOptions and SolverOptions properties of the dlCHOMP optimizer to be the same as that of the equivalent manipulatorCHOMP optimizer that works. For more information on choosing the right optimizer options, refer to the Tune Optimizer section of the Pick-And-Place Workflow Using CHOMP for Manipulators example.

dlchomp.CollisionOptions = chompCollisionOptions(CollisionCostWeight=120, ... IgnoreSelfCollision=false); dlchomp.SolverOptions = chompSolverOptions(LearningRate=2, ... MaxIterations=200, ... FunctionTolerance=1e-4); dlchomp.SmoothnessOptions = chompSmoothnessOptions(SmoothnessCostWeight=0.001);

Determine Dataset Generation Parameters

For generating data to train the DLCHOMP optimizer, you must use a dlCHOMPDataOptions object to specify dataset generation parameters. Consider the problem of fruit-picking using a manipulator arm. In this application, you must determine parameters based on the size of tree branches and fruits.

In this image, assume the fruits to be spheres having a radius range between 5 cm to 10 cm.

Create a dlCHOMPDataOptions object.

dataOptions = dlCHOMPDataOptions;

Next, you must set obstacles that determine environment complexity.

Set a range of possible radii for the spherical obstacles to be between 5cm and 10cm.

dataOptions.RadiusRangeOfObstacles = [0.05 0.10];

Set the number of obstacles to be between 20 and 25 spheres. This is assuming that there are about 20 to 25 fruits within reach of the manipulator.

dataOptions.CountRangeOfObstacles = [20 25];

Set the minimum distance that spheres can be from the base to 21 cm.

dataOptions.MinDistanceFromBase = 0.21;

Set the number of samples to generate to 5,000 samples.

dataOptions.NumSamples = 5000;

Set the validation split such that you keep 20% of the generated data set for validating the trained optimizer and using the rest for training the optimizer.

dataOptions.ValidationSplit = 0.2;

For more information about these parameters, see dlCHOMP.

Generate Training and Validation Data Samples

Next, you need training and validation data sets. Note that generating training and validation data takes a long time. In this example, generating 5,000 samples takes approximately 21 hours on a Windows 10 system with 48 GB of RAM. In this section you have the option of either downloading a dataset, generated specifically for this example, or generating the data yourself.

Set generateData to false to download a generated dataset.

generateData = false; dataSamplesFolder = "allData"; if generateData == false dlCHOMPComponent = "rst/data/dlCHOMP/R2024a"; dataSamplesFilename = "kukaIiwa7DataForTrainingDLCHOMP.zip"; disp("Downloading previously generated kukaIiwa7 DLCHOMP data (1 GB)..."); dataSamplesFolder = exampleHelperDownloadData(dlCHOMPComponent, ... dataSamplesFilename, 'DataFolder',dataSamplesFolder); validIndices = 1:floor(dataOptions.ValidationSplit*5e3); trainIndices = (numel(validIndices)+1):5e3; validDS = dlCHOMPDatastore(dataSamplesFolder,validIndices); trainDS = dlCHOMPDatastore(dataSamplesFolder,trainIndices); else rng(seed,"twister"); [trainDS,validDS] = generateSamples(dlchomp,dataOptions,dataSamplesFolder); end

Downloading previously generated kukaIiwa7 DLCHOMP data (1 GB)...

Train dlCHOMP Optimizer

Next step is to train the dlCHOMP optimizer object using the data that has been obtained.

Create training options using the validation datastore object validDS obtained earlier. Choose appropriate training options to train the regression network present inside the dlCHOMP optimizer object. For more information on choosing the right training options, see trainingOptions (Deep Learning Toolbox). Also identify the appropriate loss type for training the dlCHOMP object. For more information about the supported loss types, see trainDLCHOMP.

These values work best for the current dataset.

trainOptions = trainingOptions("adam", ... "MaxEpochs",200, ... "MiniBatchSize",256, ... "InitialLearnRate",0.001, ... "LearnRateDropFactor",0.9, ... "LearnRateDropPeriod",10, ... "LearnRateSchedule","piecewise", ... "ValidationData",validDS, ... "ExecutionEnvironment","parallel", ... "Plots","training-progress", ... 'Shuffle','every-epoch'); lossType = "mean-squared-error";

Finally, train the dlCHOMP optimizer object using the training datastore trainDS, loss type lossType and the training options trainOptions.

Set doTraining to false to download the trained dlCHOMP object. To train the dlCHOMP object yourself, set doTraining to true. Note that training takes around 40 mins on a Windows 10 system with 48 GB RAM using 8 parallel pool workers. So download this pretrained dlCHOMP object by default as the doTraining is set to false below.

doTraining = false; if doTraining == false pretrainedDLCHOMPFilename = "kukaIiwa7DLCHOMPTrained.zip"; disp("Downloading previously trained kukaIiwa7 dlCHOMP optimizer (46 MB)..."); pretrainedDLCHOMPFolder = exampleHelperDownloadData(dlCHOMPComponent,pretrainedDLCHOMPFilename); pretrainedDLCHOMPMATPath = fullfile(pretrainedDLCHOMPFolder,"trainedDLCHOMP.mat"); loadedData = load(pretrainedDLCHOMPMATPath,"trainedDLCHOMP","trainInfo"); dlchomp = loadedData.trainedDLCHOMP; trainInfo = loadedData.trainInfo; else trainInfo = trainDLCHOMP(dlchomp,trainDS,lossType,trainOptions); end

Downloading previously trained kukaIiwa7 dlCHOMP optimizer (46 MB)...

Infer Using Trained dlCHOMP Optimizer

Now use the trained optimizer to infer on a target environment. For the purpose of this example, use generate another data sample to get a target environment that is similar to the training data.

Generate Target Environment

Generate a target environment of spherical obstacles by using the generateSamples function with the same dlCHOMPDataOptions that you used for the training.

First, set the number of samples to generate to 1. Then set the number of samples to save for validation to 0.

dataOptions.NumSamples = 1; dataOptions.ValidationSplit = 0;

Next, to ensure that you are generating a data sample that you did not previously generate during the sample generation step, set the random number generator seed a different value than used for generating the training and validation data previously. Set the Seed property to 500 now.

dataOptions.Seed = 500;

Set a new location for saving the generated unique sample and generate a unique target environment.

folderForTargetEnvData = "targetEnvData"; rng(dataOptions.Seed,"twister"); testDS = dlchomp.generateSamples(dataOptions,folderForTargetEnvData);

------------------------ Starting data generation [1/1] Data Samples Written | ┃┃┃┃┃┃┃┃┃┃┃┃┃┃┃┃┃┃┃┃ 100.00% complete | Total Run Time: 00:00:14 secs Estimated Time Remaining: 00:00:00 secs ------------------------

filePathToUnseenSample = resolve(testDS.FileSet).FileName(1); [unseenStart,unseenGoal,env,chompTrajectory] = exampleHelperExtractDataFromDLCHOMPSample(filePathToUnseenSample);

Next, to generate code for shorter planning times, you first need to obtain certain MAT files.

Set Up MAT Files for Code Generation

To speed up the motion planning, you can use code generation. To generate code for motion planning, you need two MAT files. One MAT file should contain only the pretrained neural network named trainedNetwork that you load using the coder.loadDeepLearningNetwork (MATLAB Coder) function. The other MAT file should include numWaypoints, encoder, smoothnessOptions, collisionOptions, and solverOptions, that you load using the coder.load (MATLAB Coder) function for constructing the dlCHOMP optimizer object during code generation.

Create the pretrained neural network MAT file.

pretrainedDLCHOMPNetOnlyMATPath = "trainedDLCHOMPNetOnly.mat"; if ~isfile(pretrainedDLCHOMPNetOnlyMATPath) trainedNetwork = dlchomp.Network; save(pretrainedDLCHOMPNetOnlyMATPath,"trainedNetwork"); end

Next, create the MAT file containing the dlCHOMP object properties.

pretrainedDLCHOMPOtherPropertiesMATPath = "trainedDLCHOMPOtherProperties.mat"; if ~isfile(pretrainedDLCHOMPOtherPropertiesMATPath) numWaypoints = dlchomp.NumWaypoints; encoder = dlchomp.BPSEncoder; smoothnessOptions = dlchomp.SmoothnessOptions; collisionOptions = dlchomp.CollisionOptions; solverOptions = dlchomp.SolverOptions; save(pretrainedDLCHOMPOtherPropertiesMATPath, ... "numWaypoints","encoder", ... "smoothnessOptions","collisionOptions","solverOptions"); end

Generate Code for Planning

In order to generate a MEX function, exampleHelperPretrainedDLCHOMPOptimize_mex, from the MATLAB function exampleHelperPretrainedDLCHOMPOptimize, run this command:

codegen("exampleHelperPretrainedDLCHOMPOptimize","-args",{unseenStart,unseenGoal,coder.typeof(env,[4 inf],[0 1])})

The variable_dims argument of coder.typeof (MATLAB Coder) specifies that the input matrix environmentObstacles of the entry-point planning function can have an unbounded and variable-sized second dimension.

Plan Collision-Free Path

Next, use the planner entry-point helper function to output a geometric collision-free plan via inference on the unseen target environment env that was generated earlier.

[optimWptsDLCHOMP1,optimTptsDLCHOMP1,solninfoDLCHOMP1] = exampleHelperPretrainedDLCHOMPOptimize(unseenStart,unseenGoal,env);

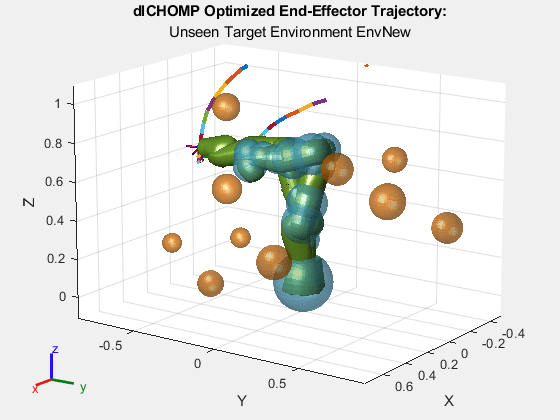

You can also reuse the exampleHelperPretrainedDLCHOMPOptimize for a different obstacle environment. Plan in a new environment, envNew, created by only keeping the last 10 spherical obstacles in env.

envNew = env(:,end-9:end); [optimWptsDLCHOMP2,optimTptsDLCHOMP2,solninfoDLCHOMP2] = exampleHelperPretrainedDLCHOMPOptimize(unseenStart,unseenGoal,envNew);

Use this code to run the MEX function in the original environment and new environment. The MEX function has the same behavior as exampleHelperPretrainedDLCHOMPOptimize, but results in shorter planning times.

[optimWptsDLCHOMP1,optimTptsDLCHOMP1,solninfoDLCHOMP1] = exampleHelperPretrainedDLCHOMPOptimize_mex(unseenStart,unseenGoal,env); envNew = env(:,end-9:end); [optimWptsDLCHOMP2,optimTptsDLCHOMP2,solninfoDLCHOMP2] = exampleHelperPretrainedDLCHOMPOptimize_mex(unseenStart,unseenGoal,envNew);

Visualize Planned Path

Visualize the planned output for both the original environment and new environment.

Visualize the trajectory as an animation. Rotate the figure during the animation to make the robot motion easier to see.

Compute camera angles for camera rotation.

numPredWaypoints = size(optimWptsDLCHOMP1,1); azRange = [90 125]; elRange = [0 15]; azimuths = linspace(azRange(1),azRange(2),numPredWaypoints); elevations = linspace(elRange(1),elRange(2),numPredWaypoints);

Show animated robot trajectory in env.

figure dlchomp.SphericalObstacles = env; ax1 = show(dlchomp,unseenStart); title("dlCHOMP Optimized End-Effector Trajectory:","Unseen Target Environment Env") axis equal hold on exampleHelperShowAnimatedRobotTrajectory(ax1,kuka,optimWptsDLCHOMP1,azimuths,elevations); hold off

Warning: An error occurred while drawing the scene: GraphicsView error in command: interactionsmanagermessage: TypeError: Cannot read properties of null (reading '_gview')

at new g (https://127.0.0.1:31521/toolbox/matlab/uitools/figurelibjs/release/bundle.mwBundle.gbtfigure-lib.js?mre=https

Show animated robot trajectory in envNew.

figure dlchomp.SphericalObstacles = envNew; ax2 = show(dlchomp,unseenStart); title("dlCHOMP Optimized End-Effector Trajectory:","Unseen Target Environment EnvNew"); axis equal hold on exampleHelperShowAnimatedRobotTrajectory(ax2,kuka,optimWptsDLCHOMP2,azimuths,elevations); hold off

Warning: An error occurred while drawing the scene: GraphicsView error in command: interactionsmanagermessage: TypeError: Cannot read properties of null (reading '_gview')

at new g (https://127.0.0.1:31521/toolbox/matlab/uitools/figurelibjs/release/bundle.mwBundle.gbtfigure-lib.js?mre=https

Supporting Functions

Planner Entry Point Function

exampleHelperPretrainedDLCHOMPOptimize plans motion in a spherical obstacle environment of a KUKA LBR iiwa 7 R800 7-axis robot workspace. The helper function accepts the spherical obstacles as the sphObstacles argument, which is a 4-by-N matrix where each column contains the information used to represent a spherical obstacle.

Within the helper function, in order to create the pretrained dlCHOMP optimizer object, you must:

Load the pretrained network by providing the path to its MAT file using the

coder.loadDeepLearningNetwork(MATLAB Coder) function.Load the other properties needed to initialize the

dlCHOMPoptimizer object from a separate MAT file using thecoder.load(MATLAB Coder) function.

Make all the loaded values and the rigid body tree of the robot persistent. This improves the speed of the mexed function because you only need to load the values and create the robot once when you use the function multiple times.

The input 4-by-N array sphObstacles is variable in size and consists of columns that each represent one spherical obstacle. For more information on how to represent a spherical obstacle, see the SphericalObstacles.

function [optimWpts,optimTpts,solinfo] = exampleHelperPretrainedDLCHOMPOptimize(start,goal,sphObstacles) %exampleHelperPretrainedDLCHOMPOptimize Plan in a spherical obstacle environment of a KUKA LBR iiwa 7 R800 7-axis robot % [optimWpts,optimTpts,solinfo] = exampleHelperVariableHeterogeneousPlanner(START,GOAL,SPHOBSTACLES) % Outputs a collision free geometric plan, OPTIMWPTS, of a KUKA LBR iiwa 7 % R800 7-axis Gen3 robot in an environment defined by SPHOBSTACLES which is % a variable sized 4-by-N-matrix that captures the information of spherical % obstacles in the environment. Each column is of the form [r; x; y; z], % defined with respect to the base frame of the robot. r is the radius of % the sphere, and x, y, and z are the x-, y-, and z-coordinates of the center % of the sphere, respectively. % START and GOAL are the start and goal joint configurations of the % robot, respectively, and are specified as a row vector. % This function is for internal use only and may be removed in a future release of MATLAB. %Copyright 2024 The MathWorks, Inc. %#codegen persistent trainednet kuka trainedDLCHOMP props % Load previously trained dlnetwork and other dlCHOMP properties % Then create dlCHOMP object from these loaded properties if isempty(trainednet) || isempty(kuka) || isempty(trainedDLCHOMP) trainednet = coder.loadDeepLearningNetwork(... 'trainedDLCHOMPNetOnly.mat', 'trainedNetwork'); props = coder.load('trainedDLCHOMPOtherProperties.mat', ... 'numWaypoints', ... % Load numWaypoints 'encoder', ... % Load bpsEncoder 'smoothnessOptions','collisionOptions','solverOptions'); % Load optimization parameters kuka = loadrobot('kukaIiwa7','DataFormat','row'); % Create rigid body tree from robot name trainedDLCHOMP = dlCHOMP( ... kuka, ... props.encoder, ... props.numWaypoints, ... SmoothnessOptions=props.smoothnessOptions, ... CollisionOptions=props.collisionOptions, ... SolverOptions=props.solverOptions, ... Network=trainednet); end % Set obstacles, then optimize between start and goal trainedDLCHOMP.SphericalObstacles = sphObstacles; [optimWpts,optimTpts,solinfo] = trainedDLCHOMP.optimize(start,goal); end

See Also

dlCHOMP | generateSamples | optimize