Fuse Inertial Sensor Data Using insEKF-Based Flexible Fusion

Framework

The insEKF filter object provides a flexible framework that you can use to

fuse inertial sensor data. You can fuse measurement data from various inertial sensors by

selecting or customizing the sensor models used in the filter, and estimate different

platform states by selecting or customizing the motion model used in the filter. The insEKF object is based on a continuous-discrete extended Kalman filter, in

which the state prediction step is continuous, and the measurement correction or fusion step

is discrete.

Overall, the object contains 7 properties and 13 object functions. The object also interacts with other objects and functions to a support regular filter workflow, as well as filter tuning.

Object Properties

The insEKF object maintains major filter variables and

information of the motion model and sensor models using these properties:

State— Platform state saved in the filter, specified as a vector. This property maintains the whole state vector, including the platform states defined by the motion model, such as orientation, position, and velocity, as well as the sensor states defined by sensor models, such as sensor biases.You can interact with this property in various ways:

Display the state information saved in the vector by using the

stateinfoobject function.Directly specify this property. This may not be convenient since the dimension of the state vector can be large.

Get or set a part or component of the state vector by using the

statepartsobject function.

StateCovariance— State estimate error covariance, specified as a matrix. This property maintains the whole covariance matrix corresponding to the state vector. You can interact with this property in various ways:Directly specify this property. This may not be convenient since the dimension of the covariance matrix can be large.

Get or set a part of the covariance matrix by using the

statecovpartsobject function.

AdditiveProcessNoise— Additive process noise covariance, specified as a matrix. This property maintains the whole additive process noise covariance matrix corresponding to the state vector.MotionModel— Motion model to predict the filter state, specified as an object. You can use one of these options to specify this property when constructing the filter:insMotionOrientation— Model orientation-only platform motion assuming a constant angular velocity. Using this object adds the quaternion and angular velocity state to theStateproperty.insMotionPose— Model 3-D motion assuming constant angular velocity and constant linear acceleration. Using this model adds the quaternion, angular velocity, position, linear velocity, and acceleration state to theStateproperty.positioning.INSMotionModel— An interface class to implement a motion model object used with the filter. To customize a motion model object, you must inherit from this interface class and implement at least two methods:modelstatesandstateTransition.

Sensors— Sensor models that model the corresponding sensor measurement based on the platform state, specified as a cell array of INS sensor objects. You can specify each INS sensor object using one of these options:insAccelerometer— Model accelerometer readings, including the gravitational acceleration and sensor bias by default. The model also includes the sensor acceleration if theStateproperty of the filter includes theAccelerationstate.insMagnetometer— Model magnetometer readings, including the geomagnetic vector and the sensor bias.insGyroscope— Model gyroscope readings, including the angular velocity vector and the sensor bias.insGPS— Model GPS readings, including the latitude, longitude, and altitude coordinates. If you fuse velocity data from the GPS sensor, the object additionally models velocity measurements.positioning.INSSensorModel— An interface class to implement a sensor model object used with the filter. To customize a sensor model object, you must inherit from this interface class and implement at least themeasurementmethod.

SensorNames— Names of the sensors added to the filter, specified as a cell array of character vector. The filter object assigns default names to the added sensors. These sensor names are useful when you use various object functions of the filter.To customize the sensor names, you must use the

insOptionsobject.

ReferenceFrame— Reference frame of the extended Kalman filter object, specified as"NED"for the north-east-down frame by default. To customize it as"ENU"for the east-north-up frame, you must use theinsOptionsobject.Note

You can also specify the data type used in the filter as

double(default) orsingleby specifying theDataTypeproperty of theinsOptionsobject.

Object Functions

The insEKF object provides various object functions for implementing common

filter workflows, accessing filter states and covariances, and filter tuning.

These object functions support common filter workflows:

predict— Predict the filter state forward in time based on the motion model used in the filter.fuse— Fuse or correct the filter state using a measurement based on a sensor model previously added to the filter. You can use this object function multiple times if you need to fuse multiple measurements.correct— Correct the filter using direct state measurement of the filter state. A direct measurement contains a subset of the filter state vector elements. You can use this object function multiple times for multiple direct measurements.residual— Return the residual and residual covariance of a measurement based on the current state of the filter.estimateStates— Obtain the state estimates based on a timetable of sensor data. The object function processes the measurements one-by-one and returns the corresponding state estimates.

These object functions enable you to access various states and variables maintained by the filter:

stateinfo— Return or display the state components saved in theStateproperty of the filter. Using this function, you can observe what components the state consists of and the indices for all the state components.stateparts— Get or set parts of the state vector. Since the state vector contain many components, this object function enables you to get or set only a component of the whole state vector.statecovparts— Get or set parts of the state estimate error covariance matrix. Similar to thestatepartsfunction, this object function enables you to get or set only a part of the state estimate error covariance matrix.

To obtain more accurate state estimates, you often need to tune the filter parameters

to reduce estimate errors. The insEKF object provides these object

functions to facilitate filter tuning:

tune— Adjust theAdditiveProcessNoiseproperty of the filter object and measurement noise of the sensors to reduce the state estimation error between the filter estimates and the ground truth.You can use the

tunernoisefunction to obtain an example for the measure noise structure, required by thetunefunction.You can optionally use the

tunerconfigobject to specify tuning parameters, such as function tolerance and the cost function, as well as which elements of the process noise matrix to tuned.

createTunerCostTemplate— Creates a template for a tuner cost function that you can further modify to customize your own cost function.tunerCostFcnParam— Creates a required structure for tuning aninsEKFfilter with a custom cost function. The structure is useful when generating C-code for a cost function using MATLAB® Coder™.

You can use the copy and reset object functions

to conveniently create a new insEKF object based on an existing

insEKF object.

Example: Fuse Inertial Sensor Data Using insEKF

This example introduces the basic workflows for fusing inertial sensor data using the insEKF object, which supports a flexible fusion framework based on a continuous discrete Kalman filter.

Create insEKF Object

Create an insEKF object directly.

filter1 = insEKF

filter1 =

insEKF with properties:

State: [13×1 double]

StateCovariance: [13×13 double]

AdditiveProcessNoise: [13×13 double]

MotionModel: [1×1 insMotionOrientation]

Sensors: {[1×1 insAccelerometer] [1×1 insGyroscope]}

SensorNames: {'Accelerometer' 'Gyroscope'}

ReferenceFrame: 'NED'

From the Sensors property, note that the object contains two sensor models, insAccelerometer and insGyroscope by default. These enables you to fuse accelerometer and gyroscope data, respectively. From the MotionModel property, note that the object defaults to an insMotionOrientation model, which models rotation-only motion and not translational motion. Due to the specified motion model and sensor models, the state of the filter is a 13-by-1 vector. Get the components and corresponding indices from the state vector using the stateinfo object function.

stateinfo(filter1)

ans = struct with fields:

Orientation: [1 2 3 4]

AngularVelocity: [5 6 7]

Accelerometer_Bias: [8 9 10]

Gyroscope_Bias: [11 12 13]

Note that, in addition to the orientation and angular velocity states, the filter also includes the accelerometer bias and gyroscope bias.

You can explicitly specify the motion model and sensor models when constructing the filter. For example, create an insEKF object and specify the motion model as an insMotionPose object, which models both rotational motion and translational motion, and specify the sensors as an insAccelerometer, an insGPS, and another insGPS object. This enables the fusion of one set of accelerometer data and two sets of GPS data.

filter2 = insEKF(insAccelerometer,insGPS,insGPS,insMotionPose)

filter2 =

insEKF with properties:

State: [19×1 double]

StateCovariance: [19×19 double]

AdditiveProcessNoise: [19×19 double]

MotionModel: [1×1 insMotionPose]

Sensors: {[1×1 insAccelerometer] [1×1 insGPS] [1×1 insGPS]}

SensorNames: {'Accelerometer' 'GPS' 'GPS_1'}

ReferenceFrame: 'NED'

The State property is a 19-by-1 vector that contains these components:

stateinfo(filter2)

ans = struct with fields:

Orientation: [1 2 3 4]

AngularVelocity: [5 6 7]

Position: [8 9 10]

Velocity: [11 12 13]

Acceleration: [14 15 16]

Accelerometer_Bias: [17 18 19]

The SensorNames property enables you to indicate specific sensors when using various object functions of the filter. The filter generates the names for added sensors in a default format. To provide custom names for the sensors, you must use the insOptions object. The insOptions object can also specify the data type of variables used in the filter and the ReferenceFrame of the filter.

options = insOptions(SensorNamesSource="Property", ... SensorNames={'Sensor1','Sensor2','Sensor3'}, ... Datatype="single", ... ReferenceFrame="ENU"); filter3 = insEKF(insAccelerometer,insGPS,insGPS,insMotionPose,options)

filter3 =

insEKF with properties:

State: [19×1 single]

StateCovariance: [19×19 single]

AdditiveProcessNoise: [19×19 single]

MotionModel: [1×1 insMotionPose]

Sensors: {[1×1 insAccelerometer] [1×1 insGPS] [1×1 insGPS]}

SensorNames: {'Sensor1' 'Sensor2' 'Sensor3'}

ReferenceFrame: 'ENU'

You can also directly obtain the indices of a state component based on the sensor names. For example,

stateinfo(filter3,'Sensor1_Bias')ans = 1×3

17 18 19

Configure Filter Properties

Create a new insEKF object with an accelerometer and a gyroscope. Explicitly define these two sensors for later usage.

accSensor = insAccelerometer; gyroSensor = insGyroscope; filter = insEKF(accSensor,gyroSensor)

filter =

insEKF with properties:

State: [13×1 double]

StateCovariance: [13×13 double]

AdditiveProcessNoise: [13×13 double]

MotionModel: [1×1 insMotionOrientation]

Sensors: {[1×1 insAccelerometer] [1×1 insGyroscope]}

SensorNames: {'Accelerometer' 'Gyroscope'}

ReferenceFrame: 'NED'

Load prerecorded data for an accelerometer and a gyroscope. The sample rate of the recorded data is 100 Hz. It contains the sensor data, ground truth, and the initial orientation represented by a quaternion.

ld = load("accelGyroINSEKFData.mat")ld = struct with fields:

sampleRate: 100

sensorData: [300×2 timetable]

groundTruth: [300×1 timetable]

initOrient: [1×1 quaternion]

Before fusing the sensor data, you need to set up the initial orientation for the filter state. First, observe the state information.

stateinfo(filter)

ans = struct with fields:

Orientation: [1 2 3 4]

AngularVelocity: [5 6 7]

Accelerometer_Bias: [8 9 10]

Gyroscope_Bias: [11 12 13]

Query the index of a state component directly by using the corresponding sensor.

stateinfo(filter,accSensor,"Bias")ans = 1×3

8 9 10

Set only the Orientation component of the state vector using the stateparts object function.

quatElements = compact(ld.initOrient); % Convert the quaternion object to a vector of four elements stateparts(filter,"Orientation",quatElements); % Specify the Orientation state component stateparts(filter,"Orientation") % Show the specified Orientation state component

ans = 1×4

1.0000 -0.0003 0.0001 0.0002

Specify the covariance matrix corresponding to the orientation as a diagonal matrix.

statecovparts(filter,"Orientation",1e-2);Fuse Sensor Data

You can fuse sensor data by recursively calling the predict and fuse object functions.

Preallocate variables for saving estimated results.

N = size(ld.sensorData,1); estOrient = quaternion.zeros(N,1); dt = seconds(diff(ld.sensorData.Properties.RowTimes));

Predict the filter state, fuse the sensor data, and obtain the estimates.

for ii = 1:N if ii ~= 1 % Predict forward in time. predict(filter,dt(ii-1)); end % Fuse accelerometer data. fuse(filter,accSensor,ld.sensorData.Accelerometer(ii,:),1); % Fuse gyroscope data. fuse(filter,gyroSensor,ld.sensorData.Gyroscope(ii,:),1); % Extract the orientation state estimate using the stateparts object function. estOrient(ii) = quaternion(stateparts(filter,"Orientation")); end

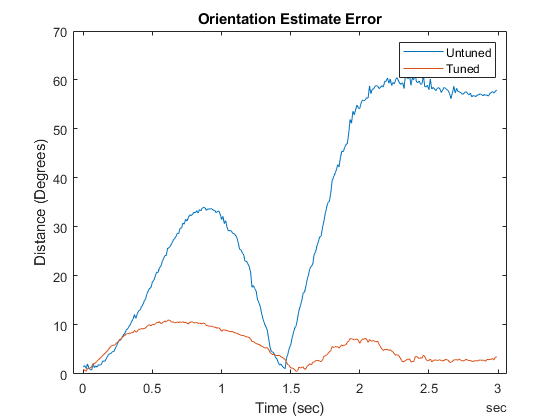

Visualize the estimate error, in quaternion distance, using the dist object function of the quaternion object.

figure times = ld.groundTruth.Properties.RowTimes; distance = rad2deg(dist(estOrient,ld.groundTruth.Orientation)); plot(times,distance) xlabel("Time (sec)") ylabel("Distance (Degrees)") title("Orientation Estimate Error")

The results indicate that the estimate errors are large. You can also use the estimateStates object function to process the sensor data and obtain the same results.

Tune Filter

Given that the estimation results are not ideal, you can try to tune the filter parameters to reduce estimate errors.

Create a measurement noise structure and a tunerconfig object used to configure the tuning parameters.

mnoise = tunernoise(filter); cfg = tunerconfig(filter,MaxIterations=10,ObjectiveLimit=1e-4);

Reinitialize the filter. Use the tune object function to tune the filter and obtain the tuned noise.

stateparts(filter,"Orientation",quatElements); statecovparts(filter,"Orientation",1e-2); tunedmn = tune(filter,mnoise,ld.sensorData,ld.groundTruth,cfg);

Iteration Parameter Metric

_________ _________ ______

1 AdditiveProcessNoise(1) 0.3787

1 AdditiveProcessNoise(15) 0.3761

1 AdditiveProcessNoise(29) 0.3695

1 AdditiveProcessNoise(43) 0.3655

1 AdditiveProcessNoise(57) 0.3533

1 AdditiveProcessNoise(71) 0.3446

1 AdditiveProcessNoise(85) 0.3431

1 AdditiveProcessNoise(99) 0.3428

1 AdditiveProcessNoise(113) 0.3427

1 AdditiveProcessNoise(127) 0.3426

1 AdditiveProcessNoise(141) 0.3298

1 AdditiveProcessNoise(155) 0.3206

1 AdditiveProcessNoise(169) 0.3200

1 AccelerometerNoise 0.3199

1 GyroscopeNoise 0.3198

2 AdditiveProcessNoise(1) 0.3126

2 AdditiveProcessNoise(15) 0.3098

2 AdditiveProcessNoise(29) 0.3018

2 AdditiveProcessNoise(43) 0.2988

2 AdditiveProcessNoise(57) 0.2851

2 AdditiveProcessNoise(71) 0.2784

2 AdditiveProcessNoise(85) 0.2760

2 AdditiveProcessNoise(99) 0.2744

2 AdditiveProcessNoise(113) 0.2744

2 AdditiveProcessNoise(127) 0.2743

2 AdditiveProcessNoise(141) 0.2602

2 AdditiveProcessNoise(155) 0.2537

2 AdditiveProcessNoise(169) 0.2527

2 AccelerometerNoise 0.2524

2 GyroscopeNoise 0.2524

3 AdditiveProcessNoise(1) 0.2476

3 AdditiveProcessNoise(15) 0.2432

3 AdditiveProcessNoise(29) 0.2397

3 AdditiveProcessNoise(43) 0.2381

3 AdditiveProcessNoise(57) 0.2255

3 AdditiveProcessNoise(71) 0.2226

3 AdditiveProcessNoise(85) 0.2221

3 AdditiveProcessNoise(99) 0.2202

3 AdditiveProcessNoise(113) 0.2201

3 AdditiveProcessNoise(127) 0.2201

3 AdditiveProcessNoise(141) 0.2090

3 AdditiveProcessNoise(155) 0.2070

3 AdditiveProcessNoise(169) 0.2058

3 AccelerometerNoise 0.2052

3 GyroscopeNoise 0.2052

4 AdditiveProcessNoise(1) 0.2051

4 AdditiveProcessNoise(15) 0.2027

4 AdditiveProcessNoise(29) 0.2019

4 AdditiveProcessNoise(43) 0.2000

4 AdditiveProcessNoise(57) 0.1909

4 AdditiveProcessNoise(71) 0.1897

4 AdditiveProcessNoise(85) 0.1882

4 AdditiveProcessNoise(99) 0.1871

4 AdditiveProcessNoise(113) 0.1870

4 AdditiveProcessNoise(127) 0.1870

4 AdditiveProcessNoise(141) 0.1791

4 AdditiveProcessNoise(155) 0.1783

4 AdditiveProcessNoise(169) 0.1751

4 AccelerometerNoise 0.1748

4 GyroscopeNoise 0.1747

5 AdditiveProcessNoise(1) 0.1742

5 AdditiveProcessNoise(15) 0.1732

5 AdditiveProcessNoise(29) 0.1712

5 AdditiveProcessNoise(43) 0.1712

5 AdditiveProcessNoise(57) 0.1626

5 AdditiveProcessNoise(71) 0.1615

5 AdditiveProcessNoise(85) 0.1598

5 AdditiveProcessNoise(99) 0.1590

5 AdditiveProcessNoise(113) 0.1589

5 AdditiveProcessNoise(127) 0.1589

5 AdditiveProcessNoise(141) 0.1517

5 AdditiveProcessNoise(155) 0.1508

5 AdditiveProcessNoise(169) 0.1476

5 AccelerometerNoise 0.1473

5 GyroscopeNoise 0.1470

6 AdditiveProcessNoise(1) 0.1470

6 AdditiveProcessNoise(15) 0.1470

6 AdditiveProcessNoise(29) 0.1463

6 AdditiveProcessNoise(43) 0.1462

6 AdditiveProcessNoise(57) 0.1367

6 AdditiveProcessNoise(71) 0.1360

6 AdditiveProcessNoise(85) 0.1360

6 AdditiveProcessNoise(99) 0.1350

6 AdditiveProcessNoise(113) 0.1350

6 AdditiveProcessNoise(127) 0.1350

6 AdditiveProcessNoise(141) 0.1289

6 AdditiveProcessNoise(155) 0.1288

6 AdditiveProcessNoise(169) 0.1262

6 AccelerometerNoise 0.1253

6 GyroscopeNoise 0.1246

7 AdditiveProcessNoise(1) 0.1246

7 AdditiveProcessNoise(15) 0.1244

7 AdditiveProcessNoise(29) 0.1205

7 AdditiveProcessNoise(43) 0.1203

7 AdditiveProcessNoise(57) 0.1125

7 AdditiveProcessNoise(71) 0.1122

7 AdditiveProcessNoise(85) 0.1117

7 AdditiveProcessNoise(99) 0.1106

7 AdditiveProcessNoise(113) 0.1104

7 AdditiveProcessNoise(127) 0.1104

7 AdditiveProcessNoise(141) 0.1058

7 AdditiveProcessNoise(155) 0.1052

7 AdditiveProcessNoise(169) 0.1035

7 AccelerometerNoise 0.1024

7 GyroscopeNoise 0.1014

8 AdditiveProcessNoise(1) 0.1014

8 AdditiveProcessNoise(15) 0.1012

8 AdditiveProcessNoise(29) 0.1012

8 AdditiveProcessNoise(43) 0.1005

8 AdditiveProcessNoise(57) 0.0948

8 AdditiveProcessNoise(71) 0.0948

8 AdditiveProcessNoise(85) 0.0938

8 AdditiveProcessNoise(99) 0.0934

8 AdditiveProcessNoise(113) 0.0931

8 AdditiveProcessNoise(127) 0.0931

8 AdditiveProcessNoise(141) 0.0896

8 AdditiveProcessNoise(155) 0.0889

8 AdditiveProcessNoise(169) 0.0867

8 AccelerometerNoise 0.0859

8 GyroscopeNoise 0.0851

9 AdditiveProcessNoise(1) 0.0851

9 AdditiveProcessNoise(15) 0.0850

9 AdditiveProcessNoise(29) 0.0824

9 AdditiveProcessNoise(43) 0.0819

9 AdditiveProcessNoise(57) 0.0771

9 AdditiveProcessNoise(71) 0.0771

9 AdditiveProcessNoise(85) 0.0762

9 AdditiveProcessNoise(99) 0.0759

9 AdditiveProcessNoise(113) 0.0754

9 AdditiveProcessNoise(127) 0.0754

9 AdditiveProcessNoise(141) 0.0734

9 AdditiveProcessNoise(155) 0.0724

9 AdditiveProcessNoise(169) 0.0702

9 AccelerometerNoise 0.0697

9 GyroscopeNoise 0.0689

10 AdditiveProcessNoise(1) 0.0689

10 AdditiveProcessNoise(15) 0.0686

10 AdditiveProcessNoise(29) 0.0658

10 AdditiveProcessNoise(43) 0.0655

10 AdditiveProcessNoise(57) 0.0622

10 AdditiveProcessNoise(71) 0.0620

10 AdditiveProcessNoise(85) 0.0616

10 AdditiveProcessNoise(99) 0.0615

10 AdditiveProcessNoise(113) 0.0607

10 AdditiveProcessNoise(127) 0.0606

10 AdditiveProcessNoise(141) 0.0590

10 AdditiveProcessNoise(155) 0.0578

10 AdditiveProcessNoise(169) 0.0565

10 AccelerometerNoise 0.0562

10 GyroscopeNoise 0.0557

filter.AdditiveProcessNoise

ans = 13×13

0.5849 0 0 0 0 0 0 0 0 0 0 0 0

0 0.6484 0 0 0 0 0 0 0 0 0 0 0

0 0 0.5634 0 0 0 0 0 0 0 0 0 0

0 0 0 1.4271 0 0 0 0 0 0 0 0 0

0 0 0 0 4.3574 0 0 0 0 0 0 0 0

0 0 0 0 0 2.9527 0 0 0 0 0 0 0

0 0 0 0 0 0 1.3071 0 0 0 0 0 0

0 0 0 0 0 0 0 4.3574 0 0 0 0 0

0 0 0 0 0 0 0 0 2.2415 0 0 0 0

0 0 0 0 0 0 0 0 0 0.6449 0 0 0

⋮

Batch-process the sensor data using the estimateStates object function. You can also recursively call the predict and fuse object functions to obtain the same results.

tunedEst = estimateStates(filter,ld.sensorData,tunedmn);

Compare the tuned and untuned estimates against the truth data. The results indicate that the tuning process greatly reduces the estimate errors.

distanceTuned = rad2deg(dist(tunedEst.Orientation,ld.groundTruth.Orientation)); hold on plot(times,distanceTuned) legend("Untuned","Tuned")