Verify Robustness of ONNX Network

This example shows how to verify the adversarial robustness of an imported ONNX™ deep neural network.

Neural networks can be susceptible to a phenomenon known as adversarial examples [1], where very small changes to an input can cause the network predictions to significantly change. For example, making small changes to the pixels in an image can cause the image to be misclassified. These changes are often imperceptible to humans.

A network is adversarially robust if the output of the network does not change significantly when the input is perturbed. For classification tasks, adversarial robustness means that the output of the fully connected layer with the highest value does not change, and therefore the predicted class does not change [2].

In this example, import a network from ONNX™ and verify its adversarial robustness.

This example requires the AI Verification Library for Deep Learning Toolbox™ and the Deep Learning Toolbox Converter for ONNX Model Format support packages. If these support packages are not installed, use the Add-On Explorer. To open the Add-On Explorer, go to the MATLAB® Toolstrip and click Add-Ons > Get Add-Ons.

Import ONNX Network

Adversarial training is a technique for training a network so that it is robust to adversarial examples [3]. Load an ONNX™ network that has been trained to be robust to adversarial examples. For an example showing how to train a robust network, see Train Image Classification Network Robust to Adversarial Examples.

net = importNetworkFromONNX("robustNetwork.onnx",GenerateCoderFiles=false);This ONNX network has a convolutional architecture with repeating sets of convolution, batch normalization and ReLU layers, followed by a global average pooling layer, and then a fully connected and softmax layer.

To verify a network, you will use the verifyNetworkRobustness function. Not all layers are supported by the verifyNetworkRobustness function. The imported network contains two unsupported layers: Transpose_To_SoftmaxLayer1000 and a CustomOutputLayer named softmax1000Output.

net.Layers

ans =

13×1 Layer array with layers:

1 'imageinput' Image Input 28×28×1 images

2 'conv_1' 2-D Convolution 10 3×3×1 convolutions with stride [2 2] and padding [0 0 0 0]

3 'batchnorm_1' Batch Normalization Batch normalization with 10 channels

4 'relu_1' ReLU ReLU

5 'conv_2' 2-D Convolution 20 3×3×10 convolutions with stride [2 2] and padding [0 0 0 0]

6 'batchnorm_2' Batch Normalization Batch normalization with 20 channels

7 'relu_2' ReLU ReLU

8 'conv_3' 2-D Convolution 40 3×3×20 convolutions with stride [2 2] and padding [0 0 0 0]

9 'batchnorm_3' Batch Normalization Batch normalization with 40 channels

10 'relu_3' ReLU ReLU

11 'gap' 2-D Global Average Pooling 2-D global average pooling

12 'Transpose_To_SoftmaxLayer1000' robustNetwork.Transpose_To_SoftmaxLayer1000 robustNetwork.Transpose_To_SoftmaxLayer1000

13 'softmax1000Output' Custom output ('UU') See the OutputInformation property to find the output dimension ordering that is produced by this layer.

To prepare the network for verification and replace unsupported layers, use the helper function prepareNetworkForVerification, found at the end of this example. For more information about supported layers, see verifyNetworkRobustness.

net = prepareNetworkForVerification(net);

Show the layers of the network.

net.Layers

ans =

12×1 Layer array with layers:

1 'imageinput' Image Input 28×28×1 images

2 'conv_1' 2-D Convolution 10 3×3×1 convolutions with stride [2 2] and padding [0 0 0 0]

3 'batchnorm_1' Batch Normalization Batch normalization with 10 channels

4 'relu_1' ReLU ReLU

5 'conv_2' 2-D Convolution 20 3×3×10 convolutions with stride [2 2] and padding [0 0 0 0]

6 'batchnorm_2' Batch Normalization Batch normalization with 20 channels

7 'relu_2' ReLU ReLU

8 'conv_3' 2-D Convolution 40 3×3×20 convolutions with stride [2 2] and padding [0 0 0 0]

9 'batchnorm_3' Batch Normalization Batch normalization with 40 channels

10 'relu_3' ReLU ReLU

11 'gap' 2-D Global Average Pooling 2-D global average pooling

12 'fc' Fully Connected 10 fully connected layer

Load Test Data

Load test images of digits with which to verify the network.

[XTest,TTest] = digitTest4DArrayData;

Verification of the whole test set can take a long time. Use a subset of the test data for verification.

numObservations = numel(TTest); numToVerify = 200; idx = randi(numObservations,numToVerify,1); X = XTest(:,:,:,idx); T = TTest(idx);

Convert the test images to a dlarray object with the data format "SSCB" (spatial, spatial, channel, batch), which represents image data.

X = dlarray(X,"SSCB");Verify Network Robustness

To verify the adversarial robustness of a deep learning network, use the verifyNetworkRobustness function.

To verify network robustness, the verifyNetworkRobustness function checks that, for all inputs between the specified input bounds, there does not exist an adversarial example. The absence of an adversarial example means that, for all images within the input set defined by the lower and upper input bounds, the predicted class label matches the specified label (usually the true class label).

For each set of input lower and upper bounds, the function returns one of these values:

"verified"— The network is robust to adversarial inputs between the specified bounds."violated"— The network is not robust to adversarial inputs between the specified bounds."unproven"— The function cannot prove whether the network is robust to adversarial inputs between the specified bounds.

Create lower and upper bounds for each of the test images. Verify the network robustness to an input perturbation between –0.05 and 0.05 for each pixel.

perturbation = 0.05; XLower = X - perturbation; XUpper = X + perturbation;

Verify the network robustness for the specified input bounds and true class labels.

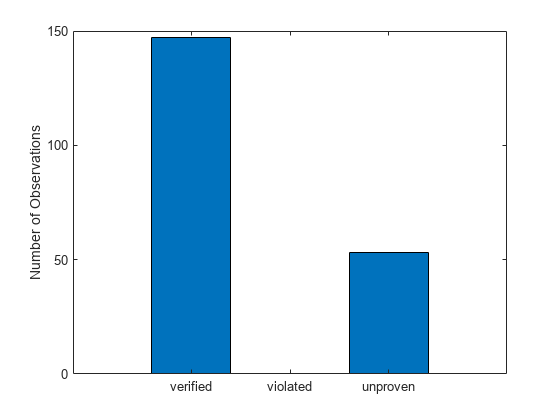

result = verifyNetworkRobustness(net,XLower,XUpper,T); summary(result)

result: 200×1 categorical

verified 151

violated 0

unproven 49

<undefined> 0

figure

bar(countcats(result))

xticklabels(categories(result))

ylabel("Number of Observations")

Supporting Functions

prepareNetworkForVerification

The prepareNetworkForVerification function prepares the ONNX™ model for verification by replacing or removing any unsupported layers. The function:

Replaces the unsupported

Transpose_To_SoftmaxLayer1000ONNX™ layer with a fully connected layer with the same weights and biases.Removes the

CustomOutputLayer.

This function is only valid for this ONNX™ model. You will need to update this function for your own model.

function net = prepareNetworkForVerification(net) % Extract the weights and biases from the unsupported Transpose_To_SoftmaxLayer1000 layer. layerWeights = extractdata(net.Learnables.Value{13}); layerBias = extractdata(net.Layers(12).Vars.fc_Add_B); % Create a new fully connected layer. fcLayer = fullyConnectedLayer(10,Weights=layerWeights,Bias=layerBias,Name="fc"); % Remove the unsupported Transpose_To_SoftmaxLayer1000 layer and the CustomOutputLayer layer. net = removeLayers(net,["Transpose_To_SoftmaxLayer1000","softmax1000Output"]); % Add the new fully connected layer and connect it. net = addLayers(net,fcLayer); net = connectLayers(net,"gap","fc"); % reset the net output name net.OutputNames = []; % Initialize the network. net = initialize(net); end

References

[1] Goodfellow, Ian J., Jonathon Shlens, and Christian Szegedy. “Explaining and Harnessing Adversarial Examples.” Preprint, submitted March 20, 2015. https://arxiv.org/abs/1412.6572.

[2] Singh, Gagandeep, Timon Gehr, Markus Püschel, and Martin Vechev. “An Abstract Domain for Certifying Neural Networks.” Proceedings of the ACM on Programming Languages 3, no. POPL (January 2, 2019): 1–30. https://doi.org/10.1145/3290354.

[3] Madry, Aleksander, Aleksandar Makelov, Ludwig Schmidt, Dimitris Tsipras, and Adrian Vladu. “Towards Deep Learning Models Resistant to Adversarial Attacks.” Preprint, submitted September 4, 2019. https://arxiv.org/abs/1706.06083.

See Also

dlnetwork | dlarray | estimateNetworkOutputBounds | verifyNetworkRobustness | importNetworkFromONNX

Topics

- Verify Robustness of Deep Learning Neural Network

- Interoperability Between Deep Learning Toolbox, TensorFlow, PyTorch, and ONNX

- Tips on Importing Models from TensorFlow, PyTorch, and ONNX

- Verification of Neural Networks

- Train Image Classification Network Robust to Adversarial Examples

- Verify an Airborne Deep Learning System