Detect Objects Using YOLOv4-tiny Network Deployed to FPGA

This example shows how to deploy a trained you only look once (YOLO) v4-tiny object detector to a target FPGA board.

Create YOLOv4-tiny Detector Object

In this example, you use a pretrained YOLOv4 object detector. First, download the Computer Vision Toolbox Model for YOLOv4 Object Detection. Then view the network:

name = "tiny-yolov4-coco";

vehicleDetector = yolov4ObjectDetector(name);

net = vehicleDetector.Networknet =

dlnetwork with properties:

Layers: [74×1 nnet.cnn.layer.Layer]

Connections: [80×2 table]

Learnables: [80×3 table]

State: [38×3 table]

InputNames: {'input_1'}

OutputNames: {'conv_31' 'conv_38'}

Initialized: 1

View summary with summary.

The network contains a resize2dLayer layer with a bilinear interpolation method. Deep Learning HDL Toolbox does not support the bilinear interpolation method. Change the interpolation method to nearest by creating a resize2dLayer layer with default properties and replace the existing resize2dLayer layer with a new layer.

layer_resize = resize2dLayer('Scale', 2); net = replaceLayer(net, 'up2d_35_new', layer_resize);

The existing network contains functionLayer layers to perform the actions of a slice layer. Deep Learning HDL Toolbox does not support the functionLayer layer. Replace these layers with the dlhdl.layer.sliceLayer.

x0 = dlhdl.layer.sliceLayer(Name = 'slice_5',Groups = 2,GroupId = 2); x1 = dlhdl.layer.sliceLayer(Name = 'slice_13',Groups = 2,GroupId = 2); x2 = dlhdl.layer.sliceLayer(Name = 'slice_21',Groups = 2,GroupId = 2); net = replaceLayer(net,'slice_5',x0); net = replaceLayer(net,'slice_13',x1); net = replaceLayer(net,'slice_21',x2); net = net.initialize; unzip vehicleDatasetImages.zip

Perform a test detection by using random images from the data set.

numImagesToProcess = 3; imageNames = dir(fullfile(pwd,'vehicleImages','*.jpg')); imageNames = {imageNames.name}'; rng(0); imageIndices = randi(length(imageNames),1,numImagesToProcess); for idx = 1:numImagesToProcess testImage = imread(fullfile(pwd,'vehicleImages',imageNames{imageIndices(idx)})); % Pre-process the input img = imresize(testImage,[416,416]); % Detect YOLOv4 vehicle [bboxes,scores,labels] = detect(vehicleDetector, img); pause(0.5) end I_ann = insertObjectAnnotation(img,'rectangle',bboxes,scores); h = figure('Name', 'YOLOv4-Tiny-Coco'); imshow(I_ann)

Deploy Single-Precision Network to FPGA

Define the target FPGA board programming interface by using the dlhdl.Target object. Specify that the interface is for a Xilinx board with an Ethernet interface.

hTarget = dlhdl.Target('Xilinx', 'Interface', 'Ethernet');

Prepare the network for deployment by creating a dlhdl.Workflow object. Specify the network and bitstream name. Ensure that the bitstream name matches the data type and the FPGA board. In this example, the target FPGA board is the Xilinx Zynq® UltraScale+™ MPSoC ZCU102 board. The bitstream uses single data type.

hW = dlhdl.Workflow("Network",net,"Bitstream",'zcu102_single',"Target",hTarget);

To compile the network and generate the instructions, weights, and biases for deployment, run the compile method of the dlhdl.Workflow object.

dn = compile(hW)

### Compiling network for Deep Learning FPGA prototyping ...

### Targeting FPGA bitstream zcu102_single.

### An output layer called 'Output1_conv_31' of type 'nnet.cnn.layer.RegressionOutputLayer' has been added to the provided network. This layer performs no operation during prediction and thus does not affect the output of the network.

### An output layer called 'Output2_conv_38' of type 'nnet.cnn.layer.RegressionOutputLayer' has been added to the provided network. This layer performs no operation during prediction and thus does not affect the output of the network.

### Optimizing network: Fused 'nnet.cnn.layer.BatchNormalizationLayer' into 'nnet.cnn.layer.Convolution2DLayer'

### The network includes the following layers:

1 'input_1' Image Input 416×416×3 images (SW Layer)

2 'conv_2' 2-D Convolution 32 3×3×3 convolutions with stride [2 2] and padding [1 1 1 1] (HW Layer)

3 'leaky_2' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

4 'conv_3' 2-D Convolution 64 3×3×32 convolutions with stride [2 2] and padding [1 1 1 1] (HW Layer)

5 'leaky_3' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

6 'conv_4' 2-D Convolution 64 3×3×64 convolutions with stride [1 1] and padding 'same' (HW Layer)

7 'leaky_4' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

8 'slice_5' dlhdl.layer.sliceLayer dlhdl.layer.sliceLayer (HW Layer)

9 'conv_6' 2-D Convolution 32 3×3×32 convolutions with stride [1 1] and padding 'same' (HW Layer)

10 'leaky_6' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

11 'conv_7' 2-D Convolution 32 3×3×32 convolutions with stride [1 1] and padding 'same' (HW Layer)

12 'leaky_7' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

13 'concat_8' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

14 'conv_9' 2-D Convolution 64 1×1×64 convolutions with stride [1 1] and padding 'same' (HW Layer)

15 'leaky_9' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

16 'concat_10' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

17 'maxPool_11' 2-D Max Pooling 2×2 max pooling with stride [2 2] and padding 'same' (HW Layer)

18 'conv_12' 2-D Convolution 128 3×3×128 convolutions with stride [1 1] and padding 'same' (HW Layer)

19 'leaky_12' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

20 'slice_13' dlhdl.layer.sliceLayer dlhdl.layer.sliceLayer (HW Layer)

21 'conv_14' 2-D Convolution 64 3×3×64 convolutions with stride [1 1] and padding 'same' (HW Layer)

22 'leaky_14' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

23 'conv_15' 2-D Convolution 64 3×3×64 convolutions with stride [1 1] and padding 'same' (HW Layer)

24 'leaky_15' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

25 'concat_16' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

26 'conv_17' 2-D Convolution 128 1×1×128 convolutions with stride [1 1] and padding 'same' (HW Layer)

27 'leaky_17' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

28 'concat_18' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

29 'maxPool_19' 2-D Max Pooling 2×2 max pooling with stride [2 2] and padding 'same' (HW Layer)

30 'conv_20' 2-D Convolution 256 3×3×256 convolutions with stride [1 1] and padding 'same' (HW Layer)

31 'leaky_20' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

32 'slice_21' dlhdl.layer.sliceLayer dlhdl.layer.sliceLayer (HW Layer)

33 'conv_22' 2-D Convolution 128 3×3×128 convolutions with stride [1 1] and padding 'same' (HW Layer)

34 'leaky_22' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

35 'conv_23' 2-D Convolution 128 3×3×128 convolutions with stride [1 1] and padding 'same' (HW Layer)

36 'leaky_23' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

37 'concat_24' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

38 'conv_25' 2-D Convolution 256 1×1×256 convolutions with stride [1 1] and padding 'same' (HW Layer)

39 'leaky_25' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

40 'concat_26' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

41 'maxPool_27' 2-D Max Pooling 2×2 max pooling with stride [2 2] and padding 'same' (HW Layer)

42 'conv_28' 2-D Convolution 512 3×3×512 convolutions with stride [1 1] and padding 'same' (HW Layer)

43 'leaky_28' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

44 'conv_29' 2-D Convolution 256 1×1×512 convolutions with stride [1 1] and padding 'same' (HW Layer)

45 'leaky_29' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

46 'conv_30' 2-D Convolution 512 3×3×256 convolutions with stride [1 1] and padding 'same' (HW Layer)

47 'leaky_30' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

48 'conv_31' 2-D Convolution 255 1×1×512 convolutions with stride [1 1] and padding 'same' (HW Layer)

49 'conv_34' 2-D Convolution 128 1×1×256 convolutions with stride [1 1] and padding 'same' (HW Layer)

50 'leaky_34' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

51 'layer' Resize Resize 2d layer with scale of [2 2]. (HW Layer)

52 'concat_36' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

53 'conv_37' 2-D Convolution 256 3×3×384 convolutions with stride [1 1] and padding 'same' (HW Layer)

54 'leaky_37' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

55 'conv_38' 2-D Convolution 255 1×1×256 convolutions with stride [1 1] and padding 'same' (HW Layer)

56 'Output1_conv_31' Regression Output mean-squared-error (SW Layer)

57 'Output2_conv_38' Regression Output mean-squared-error (SW Layer)

### Notice: The layer 'input_1' with type 'nnet.cnn.layer.ImageInputLayer' is implemented in software.

### Notice: The layer 'Output1_conv_31' with type 'nnet.cnn.layer.RegressionOutputLayer' is implemented in software.

### Notice: The layer 'Output2_conv_38' with type 'nnet.cnn.layer.RegressionOutputLayer' is implemented in software.

### Compiling layer group: conv_2>>leaky_4 ...

### Compiling layer group: conv_2>>leaky_4 ... complete.

### Compiling layer group: conv_6>>leaky_6 ...

### Compiling layer group: conv_6>>leaky_6 ... complete.

### Compiling layer group: conv_7>>leaky_7 ...

### Compiling layer group: conv_7>>leaky_7 ... complete.

### Compiling layer group: conv_9>>leaky_9 ...

### Compiling layer group: conv_9>>leaky_9 ... complete.

### Compiling layer group: maxPool_11>>leaky_12 ...

### Compiling layer group: maxPool_11>>leaky_12 ... complete.

### Compiling layer group: conv_14>>leaky_14 ...

### Compiling layer group: conv_14>>leaky_14 ... complete.

### Compiling layer group: conv_15>>leaky_15 ...

### Compiling layer group: conv_15>>leaky_15 ... complete.

### Compiling layer group: conv_17>>leaky_17 ...

### Compiling layer group: conv_17>>leaky_17 ... complete.

### Compiling layer group: maxPool_19>>leaky_20 ...

### Compiling layer group: maxPool_19>>leaky_20 ... complete.

### Compiling layer group: conv_22>>leaky_22 ...

### Compiling layer group: conv_22>>leaky_22 ... complete.

### Compiling layer group: conv_23>>leaky_23 ...

### Compiling layer group: conv_23>>leaky_23 ... complete.

### Compiling layer group: conv_25>>leaky_25 ...

### Compiling layer group: conv_25>>leaky_25 ... complete.

### Compiling layer group: maxPool_27>>leaky_29 ...

### Compiling layer group: maxPool_27>>leaky_29 ... complete.

### Compiling layer group: conv_30>>conv_31 ...

### Compiling layer group: conv_30>>conv_31 ... complete.

### Compiling layer group: conv_34>>leaky_34 ...

### Compiling layer group: conv_34>>leaky_34 ... complete.

### Compiling layer group: conv_37>>conv_38 ...

### Compiling layer group: conv_37>>conv_38 ... complete.

### Allocating external memory buffers:

offset_name offset_address allocated_space

_______________________ ______________ _________________

"InputDataOffset" "0x00000000" "79.2 MB"

"OutputResultOffset" "0x04f38000" "24.8 MB"

"SchedulerDataOffset" "0x067fe000" "10.4 MB"

"SystemBufferOffset" "0x0726e000" "11.4 MB"

"InstructionDataOffset" "0x07dd6000" "6.6 MB"

"ConvWeightDataOffset" "0x08468000" "50.8 MB"

"EndOffset" "0x0b728000" "Total: 183.2 MB"

### Network compilation complete.

dn = struct with fields:

weights: [1×1 struct]

instructions: [1×1 struct]

registers: [1×1 struct]

syncInstructions: [1×1 struct]

constantData: {}

ddrInfo: [1×1 struct]

resourceTable: [6×2 table]

To deploy the network on the Xilinx ZCU102 SoC hardware, run the deploy method of the dlhdl.Workflow object. This function uses the output of the compile function to program the FPGA board and download the network weights and biases. The deploy function starts programming the FPGA device and displays progress messages, and the required time to deploy the network.

hW.deploy

### Programming FPGA Bitstream using Ethernet... ### Attempting to connect to the hardware board at 192.168.1.101... ### Connection successful ### Programming FPGA device on Xilinx SoC hardware board at 192.168.1.101... ### Copying FPGA programming files to SD card... ### Setting FPGA bitstream and devicetree for boot... # Copying Bitstream zcu102_single.bit to /mnt/hdlcoder_rd # Set Bitstream to hdlcoder_rd/zcu102_single.bit # Copying Devicetree devicetree_dlhdl.dtb to /mnt/hdlcoder_rd # Set Devicetree to hdlcoder_rd/devicetree_dlhdl.dtb # Set up boot for Reference Design: 'AXI-Stream DDR Memory Access : 3-AXIM' ### Rebooting Xilinx SoC at 192.168.1.101... ### Reboot may take several seconds... ### Attempting to connect to the hardware board at 192.168.1.101... ### Connection successful ### Programming the FPGA bitstream has been completed successfully. ### Loading weights to Conv Processor. ### Conv Weights loaded. Current time is 18-Jan-2024 21:31:41

Convert the input image into a dlarray object and preprocess the image by using preprocessYOLOv4Input function.

dlimg = dlarray(single(img),"SSC");

dlimg = preprocessYOLOv4Input(dlimg);Get the activations of the network by using the predict method of the dlhdl.Workflow object.

hwprediction = cell(size(vehicleDetector.Network.OutputNames'));

[hwprediction{:},speed] = hW.predict(dlimg,'Profile','on' );### Finished writing input activations.

### Running single input activation.

Deep Learning Processor Profiler Performance Results

LastFrameLatency(cycles) LastFrameLatency(seconds) FramesNum Total Latency Frames/s

------------- ------------- --------- --------- ---------

Network 46260423 0.21027 1 45940307 4.8

conv_2 1193388 0.00542

conv_3 2308289 0.01049

conv_4 3379852 0.01536

conv_6 1000938 0.00455

conv_7 1001605 0.00455

conv_9 3368597 0.01531

maxPool_11 927010 0.00421

conv_12 3152610 0.01433

conv_14 842071 0.00383

conv_15 842147 0.00383

conv_17 3146009 0.01430

maxPool_19 497743 0.00226

conv_20 2973955 0.01352

conv_22 787920 0.00358

conv_23 787760 0.00358

conv_25 2973791 0.01352

memSeparator_0 323176 0.00147

maxPool_27 587201 0.00267

conv_28 3003340 0.01365

conv_29 1533063 0.00697

conv_30 1527012 0.00694

conv_31 1532738 0.00697

conv_34 405336 0.00184

layer 50036 0.00023

conv_37 5140510 0.02337

conv_38 2974102 0.01352

* The clock frequency of the DL processor is: 220MHz

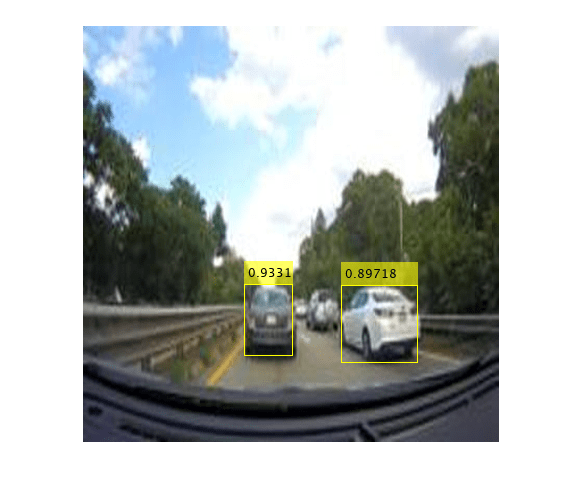

Process the FPGA output by using the processYOLOv4Output function and display the results.

anchorBoxes = vehicleDetector.AnchorBoxes; outputNames = vehicleDetector.Network.OutputNames; inputSize = vehicleDetector.InputSize; classNames = vehicleDetector.ClassNames; [bboxes, scores, labels] = processYOLOv4Output(vehicleDetector.AnchorBoxes,... inputSize, classNames, hwprediction, dlimg); % Choose the strongest bounding box to prevent display of overlapping boxes. [bboxesDisp, scoresDisp, ~] = selectStrongestBboxMulticlass(bboxes, scores,... labels , 'RatioType', 'Union', 'OverlapThreshold', 0.4); I_ann = insertObjectAnnotation(img,'rectangle',bboxesDisp,scoresDisp); h = figure('Name', 'YOLOv4'); imshow(I_ann)

Deploy Quantized Network to FPGA

Preprocess the input data set to prepare the images for int8 data type quantization.

if ~exist("vehicleImages_preprocessed","dir") % see if we've done this already movefile('vehicleImages','vehicleImages_preprocessed'); unzip vehicleDatasetImages.zip end data_path = fullfile(pwd,'vehicleImages_preprocessed'); imageData = imageDatastore(data_path); % Pre-process each image and save it back to the file for i=1:length(imageData.Files) im = imread(imageData.Files{i}); imPre = imresize(im, [416,416]); imPre = preprocessYOLOv4Input(imPre); imwrite(uint8(imPre), imageData.Files{i}); end

Quantize the network by using the dlquantizer object. Use the calibrate method to exercise the network by using sample inputs to collect the range information.

dlQuantObj = dlquantizer(net,'ExecutionEnvironment','FPGA'); dlQuantObj.calibrate(imageData);

Prepare the network for deployment by creating a dlhdl.Workflow object. Specify the quantized network and the bitstream name. The bitstream uses the int8 data type.

hW = dlhdl.Workflow("Network", dlQuantObj, "Bitstream", "zcu102_int8", "Target", hTarget);

To compile the network and generate the instructions, weights, and biases for deployment, run the compile method of the dlhdl.Workflow object.

dn = compile(hW)

### Compiling network for Deep Learning FPGA prototyping ...

### Targeting FPGA bitstream zcu102_int8.

### An output layer called 'Output1_conv_31' of type 'nnet.cnn.layer.RegressionOutputLayer' has been added to the provided network. This layer performs no operation during prediction and thus does not affect the output of the network.

### An output layer called 'Output2_conv_38' of type 'nnet.cnn.layer.RegressionOutputLayer' has been added to the provided network. This layer performs no operation during prediction and thus does not affect the output of the network.

### Optimizing network: Fused 'nnet.cnn.layer.BatchNormalizationLayer' into 'nnet.cnn.layer.Convolution2DLayer'

### The network includes the following layers:

1 'input_1' Image Input 416×416×3 images (SW Layer)

2 'conv_2' 2-D Convolution 32 3×3×3 convolutions with stride [2 2] and padding [1 1 1 1] (HW Layer)

3 'leaky_2' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

4 'conv_3' 2-D Convolution 64 3×3×32 convolutions with stride [2 2] and padding [1 1 1 1] (HW Layer)

5 'leaky_3' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

6 'conv_4' 2-D Convolution 64 3×3×64 convolutions with stride [1 1] and padding 'same' (HW Layer)

7 'leaky_4' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

8 'slice_5' dlhdl.layer.sliceLayer dlhdl.layer.sliceLayer (HW Layer)

9 'conv_6' 2-D Convolution 32 3×3×32 convolutions with stride [1 1] and padding 'same' (HW Layer)

10 'leaky_6' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

11 'conv_7' 2-D Convolution 32 3×3×32 convolutions with stride [1 1] and padding 'same' (HW Layer)

12 'leaky_7' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

13 'concat_8' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

14 'conv_9' 2-D Convolution 64 1×1×64 convolutions with stride [1 1] and padding 'same' (HW Layer)

15 'leaky_9' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

16 'concat_10' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

17 'maxPool_11' 2-D Max Pooling 2×2 max pooling with stride [2 2] and padding 'same' (HW Layer)

18 'conv_12' 2-D Convolution 128 3×3×128 convolutions with stride [1 1] and padding 'same' (HW Layer)

19 'leaky_12' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

20 'slice_13' dlhdl.layer.sliceLayer dlhdl.layer.sliceLayer (HW Layer)

21 'conv_14' 2-D Convolution 64 3×3×64 convolutions with stride [1 1] and padding 'same' (HW Layer)

22 'leaky_14' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

23 'conv_15' 2-D Convolution 64 3×3×64 convolutions with stride [1 1] and padding 'same' (HW Layer)

24 'leaky_15' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

25 'concat_16' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

26 'conv_17' 2-D Convolution 128 1×1×128 convolutions with stride [1 1] and padding 'same' (HW Layer)

27 'leaky_17' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

28 'concat_18' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

29 'maxPool_19' 2-D Max Pooling 2×2 max pooling with stride [2 2] and padding 'same' (HW Layer)

30 'conv_20' 2-D Convolution 256 3×3×256 convolutions with stride [1 1] and padding 'same' (HW Layer)

31 'leaky_20' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

32 'slice_21' dlhdl.layer.sliceLayer dlhdl.layer.sliceLayer (HW Layer)

33 'conv_22' 2-D Convolution 128 3×3×128 convolutions with stride [1 1] and padding 'same' (HW Layer)

34 'leaky_22' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

35 'conv_23' 2-D Convolution 128 3×3×128 convolutions with stride [1 1] and padding 'same' (HW Layer)

36 'leaky_23' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

37 'concat_24' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

38 'conv_25' 2-D Convolution 256 1×1×256 convolutions with stride [1 1] and padding 'same' (HW Layer)

39 'leaky_25' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

40 'concat_26' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

41 'maxPool_27' 2-D Max Pooling 2×2 max pooling with stride [2 2] and padding 'same' (HW Layer)

42 'conv_28' 2-D Convolution 512 3×3×512 convolutions with stride [1 1] and padding 'same' (HW Layer)

43 'leaky_28' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

44 'conv_29' 2-D Convolution 256 1×1×512 convolutions with stride [1 1] and padding 'same' (HW Layer)

45 'leaky_29' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

46 'conv_30' 2-D Convolution 512 3×3×256 convolutions with stride [1 1] and padding 'same' (HW Layer)

47 'leaky_30' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

48 'conv_31' 2-D Convolution 255 1×1×512 convolutions with stride [1 1] and padding 'same' (HW Layer)

49 'conv_34' 2-D Convolution 128 1×1×256 convolutions with stride [1 1] and padding 'same' (HW Layer)

50 'leaky_34' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

51 'layer' Resize Resize 2d layer with scale of [2 2]. (HW Layer)

52 'concat_36' Depth concatenation Depth concatenation of 2 inputs (HW Layer)

53 'conv_37' 2-D Convolution 256 3×3×384 convolutions with stride [1 1] and padding 'same' (HW Layer)

54 'leaky_37' Leaky ReLU Leaky ReLU with scale 0.1 (HW Layer)

55 'conv_38' 2-D Convolution 255 1×1×256 convolutions with stride [1 1] and padding 'same' (HW Layer)

56 'Output1_conv_31' Regression Output mean-squared-error (SW Layer)

57 'Output2_conv_38' Regression Output mean-squared-error (SW Layer)

### Notice: The layer 'input_1' with type 'nnet.cnn.layer.ImageInputLayer' is implemented in software.

### Notice: The layer 'Output1_conv_31' with type 'nnet.cnn.layer.RegressionOutputLayer' is implemented in software.

### Notice: The layer 'Output2_conv_38' with type 'nnet.cnn.layer.RegressionOutputLayer' is implemented in software.

### Compiling layer group: conv_2>>leaky_4 ...

### Compiling layer group: conv_2>>leaky_4 ... complete.

### Compiling layer group: conv_6>>leaky_6 ...

### Compiling layer group: conv_6>>leaky_6 ... complete.

### Compiling layer group: conv_7>>leaky_7 ...

### Compiling layer group: conv_7>>leaky_7 ... complete.

### Compiling layer group: conv_9>>leaky_9 ...

### Compiling layer group: conv_9>>leaky_9 ... complete.

### Compiling layer group: maxPool_11>>leaky_12 ...

### Compiling layer group: maxPool_11>>leaky_12 ... complete.

### Compiling layer group: conv_14>>leaky_14 ...

### Compiling layer group: conv_14>>leaky_14 ... complete.

### Compiling layer group: conv_15>>leaky_15 ...

### Compiling layer group: conv_15>>leaky_15 ... complete.

### Compiling layer group: conv_17>>leaky_17 ...

### Compiling layer group: conv_17>>leaky_17 ... complete.

### Compiling layer group: maxPool_19>>leaky_20 ...

### Compiling layer group: maxPool_19>>leaky_20 ... complete.

### Compiling layer group: conv_22>>leaky_22 ...

### Compiling layer group: conv_22>>leaky_22 ... complete.

### Compiling layer group: conv_23>>leaky_23 ...

### Compiling layer group: conv_23>>leaky_23 ... complete.

### Compiling layer group: conv_25>>leaky_25 ...

### Compiling layer group: conv_25>>leaky_25 ... complete.

### Compiling layer group: maxPool_27>>leaky_29 ...

### Compiling layer group: maxPool_27>>leaky_29 ... complete.

### Compiling layer group: conv_30>>conv_31 ...

### Compiling layer group: conv_30>>conv_31 ... complete.

### Compiling layer group: conv_34>>leaky_34 ...

### Compiling layer group: conv_34>>leaky_34 ... complete.

### Compiling layer group: conv_37>>conv_38 ...

### Compiling layer group: conv_37>>conv_38 ... complete.

### Allocating external memory buffers:

offset_name offset_address allocated_space

_______________________ ______________ ________________

"InputDataOffset" "0x00000000" "39.6 MB"

"OutputResultOffset" "0x0279c000" "6.2 MB"

"SchedulerDataOffset" "0x02dd4000" "3.0 MB"

"SystemBufferOffset" "0x030e0000" "2.9 MB"

"InstructionDataOffset" "0x033bd000" "3.3 MB"

"ConvWeightDataOffset" "0x03715000" "13.7 MB"

"EndOffset" "0x044ce000" "Total: 68.8 MB"

### Network compilation complete.

dn = struct with fields:

weights: [1×1 struct]

instructions: [1×1 struct]

registers: [1×1 struct]

syncInstructions: [1×1 struct]

constantData: {}

ddrInfo: [1×1 struct]

resourceTable: [6×2 table]

To deploy the network on the hardware, run the deploy method of the dlhdl.Workflow object.

hW.deploy

### Programming FPGA Bitstream using Ethernet... ### Attempting to connect to the hardware board at 192.168.1.101... ### Connection successful ### Programming FPGA device on Xilinx SoC hardware board at 192.168.1.101... ### Copying FPGA programming files to SD card... ### Setting FPGA bitstream and devicetree for boot... # Copying Bitstream zcu102_int8.bit to /mnt/hdlcoder_rd # Set Bitstream to hdlcoder_rd/zcu102_int8.bit # Copying Devicetree devicetree_dlhdl.dtb to /mnt/hdlcoder_rd # Set Devicetree to hdlcoder_rd/devicetree_dlhdl.dtb # Set up boot for Reference Design: 'AXI-Stream DDR Memory Access : 3-AXIM' ### Rebooting Xilinx SoC at 192.168.1.101... ### Reboot may take several seconds... ### Attempting to connect to the hardware board at 192.168.1.101... ### Connection successful ### Programming the FPGA bitstream has been completed successfully. ### Loading weights to Conv Processor. ### Conv Weights loaded. Current time is 18-Jan-2024 21:35:10

Get the activations of the network using the predict method of the dlhdl.Workflow object.

hwprediction = cell(size(vehicleDetector.Network.OutputNames'));

[hwprediction{:},speed] = hW.predict(dlimg,'Profile','on' );### Finished writing input activations.

### Running single input activation.

Deep Learning Processor Profiler Performance Results

LastFrameLatency(cycles) LastFrameLatency(seconds) FramesNum Total Latency Frames/s

------------- ------------- --------- --------- ---------

Network 13505613 0.05402 1 13419673 18.6

conv_2 613674 0.00245

conv_3 712571 0.00285

conv_4 940825 0.00376

slice_5 210172 0.00084

conv_6 297565 0.00119

conv_7 297503 0.00119

conv_9 936891 0.00375

maxPool_11 373035 0.00149

conv_12 854799 0.00342

slice_13 105831 0.00042

conv_14 234361 0.00094

conv_15 234227 0.00094

conv_17 853371 0.00341

maxPool_19 208335 0.00083

conv_20 775766 0.00310

slice_21 52481 0.00021

conv_22 213165 0.00085

conv_23 213514 0.00085

conv_25 775916 0.00310

memSeparator_0 89294 0.00036

maxPool_27 219552 0.00088

conv_28 780302 0.00312

conv_29 405659 0.00162

conv_30 402281 0.00161

conv_31 405040 0.00162

conv_34 112118 0.00045

layer 24682 0.00010

conv_37 1386418 0.00555

conv_38 776041 0.00310

* The clock frequency of the DL processor is: 250MHz

Note that the throughput is significantly higher with the quantized network than the single precision version.

Process the FPGA output by using the processYOLOv4Output function and display the results.

[bboxes, scores, labels] = processYOLOv4Output(vehicleDetector.AnchorBoxes, inputSize, classNames, hwprediction, dlimg); % Choose the strongest bounding box to prevent display of overlapping boxes. [bboxesDisp, scoresDisp, ~] = selectStrongestBboxMulticlass(bboxes, scores, labels ,... 'RatioType', 'Union', 'OverlapThreshold', 0.4); I_ann = insertObjectAnnotation(img,'rectangle',bboxesDisp,scoresDisp); h = figure('Name', 'YOLOv4 INT8'); imshow(I_ann)

Helper Functions

function output = preprocessYOLOv4Input(image) % Pre-process the input image = imresize(image,[416,416]); output = single(rescale(image)); end function [bboxes, scores, labels] = processYOLOv4Output(anchorBoxes, inputSize, classNames, features, img); % This function converts the feature maps from multiple detection heads to % bounding boxes, scores and labels % Breaks down the raw output from predict function into Confidence score, % X, Y, Width, Height and Class probabilities for each output from % detection head predictions = iYolov4Transform(features, anchorBoxes); % Initialize parameters for post-processing params.Threshold = 0.5; params.NetworkInputSize = inputSize; % Post-process the predictions to get bounding boxes, scores and labels [bboxes, scores, labels] = postprocessMultipleDetections(anchorBoxes, classNames, predictions, params); end function [bboxes, scores, labels] = postprocessMultipleDetections(anchorBoxes,classNames, YPredData, params) % Post-process the predictions to get bounding boxes, scores and labels % YpredData is a (x,8) cell array, where x = number of detection heads % Information in each column is: % column 1 -> confidence scores % column 2 to column 5 -> X offset, Y offset, Width, Height of anchor boxes % column 6 -> class probabilities % column 7-8 -> copy of width and height of anchor boxes % Initialize parameters for post-processing classes = classNames; predictions = YPredData; extractPredictions = cell(size(predictions)); % Extract dlarray data for i = 1:size(extractPredictions,1) for j = 1:size(extractPredictions,2) extractPredictions{i,j} = extractdata(predictions{i,j}); end end % Storing the values of columns 2 to 5 of extractPredictions % Columns 2 to 5 represent information about X-coordinate, Y-coordinate, Width % and Height of predicted anchor boxes extractedCoordinates = cell(size(predictions,1),4); for i = 1:size(predictions,1) for j = 2:5 extractedCoordinates{i,j-1} = extractPredictions{i,j}; end end % Convert predictions from grid cell coordinates to box coordinates. boxCoordinates = anchorBoxGenerator(anchorBoxes, extractedCoordinates, params.NetworkInputSize); % Replace grid cell coordinates in extractPredictions with box coordinates for i = 1:size(YPredData,1) for j = 2:5 extractPredictions{i,j} = single(boxCoordinates{i,j-1}); end end % 1. Convert bboxes from spatial to pixel dimension % 2. Combine the prediction from different heads. % 3. Filter detections based on threshold. % Reshaping the matrices corresponding to confidence scores and % bounding boxes detections = cell(size(YPredData,1),6); for i = 1:size(detections,1) for j = 1:5 detections{i,j} = reshapePredictions(extractPredictions{i,j}); end end % Reshaping the matrices corresponding to class probablities numClasses = repmat({numel(classes)},[size(detections,1),1]); for i = 1:size(detections,1) detections{i,6} = reshapeClasses(extractPredictions{i,6},numClasses{i,1}); end % cell2mat converts the cell of matrices into one matrix, this combines the % predictions of all detection heads detections = cell2mat(detections); % Getting the most probable class and corresponding index [classProbs, classIdx] = max(detections(:,6:end),[],2); detections(:,1) = detections(:,1).*classProbs; detections(:,6) = classIdx; % Keep detections whose confidence score is greater than threshold. detections = detections(detections(:,1) >= params.Threshold,:); [bboxes, scores, labels] = getBoxes(detections); end function [bboxes, scores, classIds] = getBoxes(detections) % Resizes the anchor boxes, filters anchor boxes based on size and apply % NMS to eliminate overlapping anchor boxes % Obtain bounding boxes and class data for pre-processed image scores = detections(:,1); bboxPred = detections(:,2:5); classIds = detections(:,6); % Resize boxes to actual image size scale = [416 416 416 416]; bboxPred = bboxPred.*scale; % Convert x and y position of detections from centre to top-left. bboxes = convertCenterToTopLeft(bboxPred); end function x = reshapePredictions(pred) % Reshapes the matrices corresponding to scores, X, Y, Width and Height to % make them compatible for combining the outputs of different detection % heads [h,w,c,n] = size(pred); x = reshape(pred,h*w*c,1,n); end function x = reshapeClasses(pred,numClasses) % Reshapes the matrices corresponding to the class probabilities, to make it % compatible for combining the outputs of different detection heads [h,w,c,n] = size(pred); numAnchors = c/numClasses; x = reshape(pred,h*w,numClasses,numAnchors,n); x = permute(x,[1,3,2,4]); [h,w,c,n] = size(x); x = reshape(x,h*w,c,n); end function bboxes = convertCenterToTopLeft(bboxes) % Convert x and y position of detections from centre to top-left. bboxes(:,1) = bboxes(:,1) - bboxes(:,3)/2 + 0.5; bboxes(:,2) = bboxes(:,2) - bboxes(:,4)/2 + 0.5; bboxes = floor(bboxes); bboxes(bboxes<1) = 1; end function tiledAnchors = anchorBoxGenerator(anchorBoxes, YPredCell, inputImageSize) % Convert grid cell coordinates to box coordinates. % Generate tiled anchor offset. tiledAnchors = cell(size(YPredCell)); for i = 1:size(YPredCell,1) anchors = anchorBoxes{i,:}; [h,w,~,n] = size(YPredCell{i,1}); [tiledAnchors{i,2},tiledAnchors{i,1}] = ndgrid(0:h-1,0:w-1,1:size(anchors,1),1:n); [~,~,tiledAnchors{i,3}] = ndgrid(0:h-1,0:w-1,anchors(:,2),1:n); [~,~,tiledAnchors{i,4}] = ndgrid(0:h-1,0:w-1,anchors(:,1),1:n); end for i = 1:size(YPredCell,1) [h,w,~,~] = size(YPredCell{i,1}); tiledAnchors{i,1} = double((tiledAnchors{i,1} + YPredCell{i,1})./w); tiledAnchors{i,2} = double((tiledAnchors{i,2} + YPredCell{i,2})./h); tiledAnchors{i,3} = double((tiledAnchors{i,3}.*YPredCell{i,3})./inputImageSize(2)); tiledAnchors{i,4} = double((tiledAnchors{i,4}.*YPredCell{i,4})./inputImageSize(1)); end end function predictions = iYolov4Transform(YPredictions, anchorBoxes) % This function breaks down the raw output from predict function into % Confidence score, X, Y, Width, % Height and Class probabilities for each output from detection head predictions = cell(size(YPredictions,1),size(YPredictions,2) + 2); for idx = 1:size(YPredictions,1) % Get the required info on feature size. numChannelsPred = size(YPredictions{idx},3); %number of channels in a feature map numAnchors = size(anchorBoxes{idx},1); %number of anchor boxes per grid numPredElemsPerAnchors = numChannelsPred/numAnchors; channelsPredIdx = 1:numChannelsPred; predictionIdx = ones([1,numAnchors.*5]); % X positions. startIdx = 1; endIdx = numChannelsPred; stride = numPredElemsPerAnchors; predictions{idx,2} = YPredictions{idx}(:,:,startIdx:stride:endIdx,:); predictionIdx = [predictionIdx startIdx:stride:endIdx]; % Y positions. startIdx = 2; endIdx = numChannelsPred; stride = numPredElemsPerAnchors; predictions{idx,3} = YPredictions{idx}(:,:,startIdx:stride:endIdx,:); predictionIdx = [predictionIdx startIdx:stride:endIdx]; % Width. startIdx = 3; endIdx = numChannelsPred; stride = numPredElemsPerAnchors; predictions{idx,4} = YPredictions{idx}(:,:,startIdx:stride:endIdx,:); predictionIdx = [predictionIdx startIdx:stride:endIdx]; % Height. startIdx = 4; endIdx = numChannelsPred; stride = numPredElemsPerAnchors; predictions{idx,5} = YPredictions{idx}(:,:,startIdx:stride:endIdx,:); predictionIdx = [predictionIdx startIdx:stride:endIdx]; % Confidence scores. startIdx = 5; endIdx = numChannelsPred; stride = numPredElemsPerAnchors; predictions{idx,1} = YPredictions{idx}(:,:,startIdx:stride:endIdx,:); predictionIdx = [predictionIdx startIdx:stride:endIdx]; % Class probabilities. classIdx = setdiff(channelsPredIdx,predictionIdx); predictions{idx,6} = YPredictions{idx}(:,:,classIdx,:); end for i = 1:size(predictions,1) predictions{i,7} = predictions{i,4}; predictions{i,8} = predictions{i,5}; end % Apply activation to the predicted cell array % Apply sigmoid activation to columns 1-3 (Confidence score, X, Y) for i = 1:size(predictions,1) for j = 1:3 predictions{i,j} = sigmoid(predictions{i,j}); end end % Apply exponentiation to columns 4-5 (Width, Height) for i = 1:size(predictions,1) for j = 4:5 predictions{i,j} = exp(predictions{i,j}); end end % Apply sigmoid activation to column 6 (Class probabilities) for i = 1:size(predictions,1) for j = 6 predictions{i,j} = sigmoid(predictions{i,j}); end end end

See Also

dlhdl.Workflow | dlhdl.Target | compile | deploy | predict