Deploy Classification Application Using Mobilenet-V3 TensorFlow Lite Model on Host and Raspberry Pi

This example shows how to simulate and generate code for a classification application that performs inference using a TensorFlow™ Lite model. This example uses a pretrained TensorFlow Lite model for the image classification network Mobilenet-V3 that is available on the TensorFlow webpage for pretrained models: https://ai.google.dev/edge/litert/models/trained. This workflow can be used for both int8 and float TensorFlow Lite models.

This example also shows how to import data from Python®.

This example is supported for host Windows® and Linux® platforms.

Download Model

Run this script to download the image classification network Mobilenet-V3 from the URL mentioned below.

if ~exist("mobilenetv3/1.tflite","file") disp('Downloading MobilenetV3 model file...'); url = "https://www.kaggle.com/models/google/mobilenet-v3/TfLite/small-100-224-classification-metadata/1/download/"; websave("mobilenetv3.tar.gz", url); untar("mobilenetv3.tar.gz","mobilenetv3") end

Downloading MobilenetV3 model file...

The tflite_classification_predict Entry-Point Function

The loadTFLiteModel function loads the Mobilenet-V3 model into a TFLiteModel object. The properties of this object contain information about the model such as the number and size of inputs and outputs of the model.

net = loadTFLiteModel('mobilenetv3/1.tflite');

disp(net.InputSize); {[224 224 3]}

In this example, you generate code for the entry-point function tflite_classification_predict.m. This function loads the Mobilenet-V3 model into a persistent network object by using the loadTFLiteModel function.

To optimize performance, after creating the network object, set the NumThreads property based on the number of threads available on your target hardware.

The tflite_classification_predict function performs prediction by passing the network object to the predict function. Subsequent calls to this function reuse this persistent object.

type tflite_classification_predict.mfunction out = tflite_classification_predict(in)

% Copyright 2023-2024 The MathWorks, Inc.

persistent net;

if isempty(net)

net = loadTFLiteModel('mobilenetv3/1.tflite');

% To optimize performance, set NumThreads property based on the number

% of threads available on the hardware board

net.NumThreads = 4;

end

net.Mean = 0;

net.StandardDeviation = 255;

out = net.predict(in);

end

Read Labels Map

Read the labels file associated with TFLite Model.

labelsFile = importdata('labels.txt');Read and Preprocess Input Image

Read the image that you intend to classify.

I = imread('peppers.png');

imshow(I);Alternatively, you can import the input data from Python. In the supporting files for this example, a Python input image is saved as the pythoninputImage.mat file.

Python only supports TFlite models that are in the NHWC format (for non-RNN models), or in the NTC and NC formats (for RNN models). By contrast, MATLAB® accepts the format HWCN for non-RNN models, and CNT and CN for RNN models. Here, N - Batch size, H - Height, W - Width, C - Channels, T - Sequence length.

So, if you import the input from Python, you must convert it to the shape that MATLAB accepts.

Load input shape read by Python from the pythoninputImage.mat file. This input is stored in the pythoninputformat variable.

load('pythoninputImage.mat'); The python input has the shape NHWC and its size is [1 224 224 3]. Convert the input to the shape HWCN that MATLAB accepts.

I1 = ConvertPythonTFLiteInput(net, pythoninputformatInput);

If the input image is not imported from python, reshape it based on the input shape of the TFLite model.

I1 = imresize(I,[224 224]);

Workflow 1: Perform Classification by Using Simulation on Host

Run the simulation by passing the input image I1 to the entry-point function.

output = tflite_classification_predict(I1);

Workflow 2: Perform Classification by Running Generated MEX on Host

Additional Prerequisites

MATLAB® Coder™

This example uses the codegen command to generate a MEX function that runs on the host platform.

Generate MEX Function

To generate a MEX function for a specified entry-point function, create a code configuration object for a MEX. Set the target language to C++.

cfg = coder.config('mex'); cfg.TargetLang = 'C++';

Run the codegen command to generate the MEX function tflite_classification_predict_mex on the host platform.

codegen -config cfg tflite_classification_predict -args ones(224,224,3,'single')

Code generation successful.

Run Generated MEX

Run the generated MEX by passing the input image I1.

output = tflite_classification_predict_mex(single(I1));

Workflow 3: Generate Code for Classification Application, Deploy and Run on Raspberry Pi

Additional Prerequisites

MATLAB Coder™

Embedded Coder®

MATLAB Support Package for Raspberry Pi® Hardware. To install this support package, use the Add-On Explorer.

Third-Party Prerequisites

Raspberry Pi hardware

TFLite library (on the target ARM® hardware)

On the Raspberry Pi hardware, set the environment variable TFLITE_PATH to the location of the TFLite library. For more information on how to build the TFLite library and set the environment variables, see Prerequisites for Deep Learning with TensorFlow Lite Models (Deep Learning Toolbox).

Set Up Connection with Raspberry Pi

Use the MATLAB Support Package for Raspberry Pi Hardware function raspi to create a connection to the Raspberry Pi.

The raspi function reuses these settings from the most recent successful connection to the Raspberry Pi hardware. This example establishes an SSH connection to the Raspberry Pi hardware using the settings stored in memory.

r = raspi;

If this is the first time connecting to a Raspberry Pi board or if you want to connect to a different board, use the following line of code:

r = raspi('raspiname','username','password');

Replace raspiname with the name of your Raspberry Pi board, username with your user name, and password with your password.

Copy TFLite Model to Target Hardware

Copy the TFLite model to the Raspberry Pi board. On the hardware board, set the environment variable TFLITE_MODEL_PATH to the location of the TFLite model. For more information on setting environment variables, see Prerequisites for Deep Learning with TensorFlow Lite Models (Deep Learning Toolbox).

In the following commands, replace targetDir with the destination folder of TFLite model on the Raspberry Pi board.

targetDir = '/home/pi'; r.putFile('mobilenetv3/1.tflite',targetDir)

Generate PIL MEX Function

To generate a PIL MEX function for a specified entry-point function, create a code configuration object for a static library and set the verification mode to 'PIL'. Set the target language to C++.

cfg = coder.config('lib','ecoder',true); cfg.TargetLang = 'C++'; cfg.VerificationMode = 'PIL';

Create a coder.hardware object for Raspberry Pi and attach it to the code generation configuration object.

hw = coder.hardware('Raspberry Pi');

cfg.Hardware = hw;On the MATLAB, run the codegen command to generate a PIL MEX function tflite_classification_predict_pil.

codegen -config cfg tflite_classification_predict -args ones(224,224,3,'single')

Run Generated PIL

Run the generated MEX by passing the input image I1.

output = tflite_classification_predict_pil(single(I1));

### Starting application: 'codegen\lib\tflite_classification_predict\pil\tflite_classification_predict.elf'

To terminate execution: clear tflite_classification_predict_pil

### Launching application tflite_classification_predict.elf...

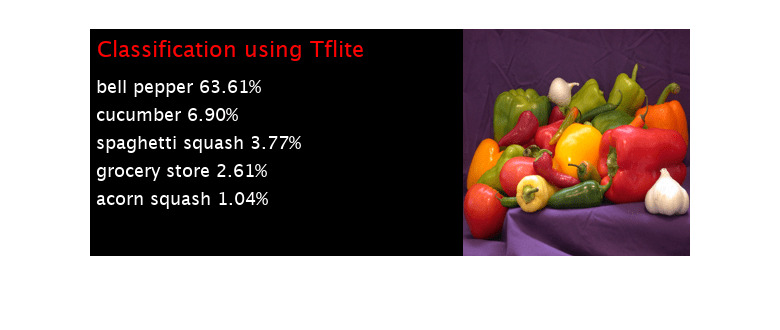

Get Top Five Labels

[~, top5] = maxk(output,5); disp(labelsFile(top5));

{'bell pepper' }

{'cucumber' }

{'spaghetti squash'}

{'grocery store' }

{'acorn squash' }

Get Prediction Scores

This network does not contain a softmax layer. So, run the softmax function to get the accuracy values. If your network has a softmax layer, you can skip this step.

predictionScores = softmax(output);

Display Prediction Scores on Image

DisplayPredictionsOnImage(predictionScores, I);

See Also

Functions

codegen|predict(Deep Learning Toolbox) |loadTFLiteModel(Deep Learning Toolbox) |TFLiteModel(Deep Learning Toolbox)

Topics

- Prerequisites for Deep Learning with TensorFlow Lite Models (Deep Learning Toolbox)

- Deploy Super Resolution Application That Uses TensorFlow Lite (TFLite) Model on Host and Raspberry Pi (Deep Learning Toolbox)