Compress Networks Learnables in bfloat16 Format

Deep learning networks use single-precision floating-point datatype to store information, such as input, weights, activations, etc. Each element stored in single-precision format takes 32 bits in computer memory. The memory footprint required to store a deep learning network is very large. The Brain Floating Point Format (bfloat16) is a truncated version of the single-precision floating-point format. It only occupies 16 bits in computer memory. bfloat16 preserves approximately the same number range as single-precision floating-point by retaining same number of exponent bits (8 bits). bfloat16 has reduced accuracy in the fraction because it has only 7 fraction bits leading to accuracy loss.

For Deep Learning models that are resilient to precision loss, compressing learnables from single-precision to bfloat16 greatly reduces memory usage with little change in accuracy. The process does not need data or preprocessing step and it also improves inference speed. This enables deployment of large deep learning networks to devices that have low computational power and less memory resources. Hardware that supports single-precision floating-point datatype now can use bfloat16, with no requirement of bfloat16 support from the processor. For example, bfloat16 learnables compression could be used on any ARM-M, ARM-A, Intel processors.

Learnable compression in bfloat16 format is only supported for generating generic C/C++ code (that does not depend on third-party libraries).

Supported Layers and Classes

You can perform learnables compression in bfloat16 format and generate generic C/C++ code for these layers:

Bidirectional LSTM layer (

bilstmLayer(Deep Learning Toolbox))2-D convolutional layer (

convolution2dLayer(Deep Learning Toolbox))Fully connected layer (

fullyConnectedLayer(Deep Learning Toolbox))Channel-wise convolution layer (

groupedConvolution2dLayer(Deep Learning Toolbox))Gated recurrent unit (GRU) layer (

gruLayer(Deep Learning Toolbox))Gated recurrent unit (GRU) projected layer (

gruProjectedLayer(Deep Learning Toolbox))Long short-term memory (LSTM) layer (

lstmLayer(Deep Learning Toolbox))LSTM projected layer (

lstmProjectedLayer(Deep Learning Toolbox))

Generate Code

Generate code with learnables compression in bfloat16 format by setting the LearnablesCompression property of your coder.DeepLearningCodeConfig object dlcfg,

dlcfg = coder.DeepLearningConfig(TargetLibrary = 'none'); dlcfg.LearnablesCompression = 'bfloat16';

Alternatively, in the MATLAB®

Coder™ app or the Configuration Parameters dialog box, on the Deep Learning tab, set Target library to none. Then set the Learnables Compression property to bfloat16.

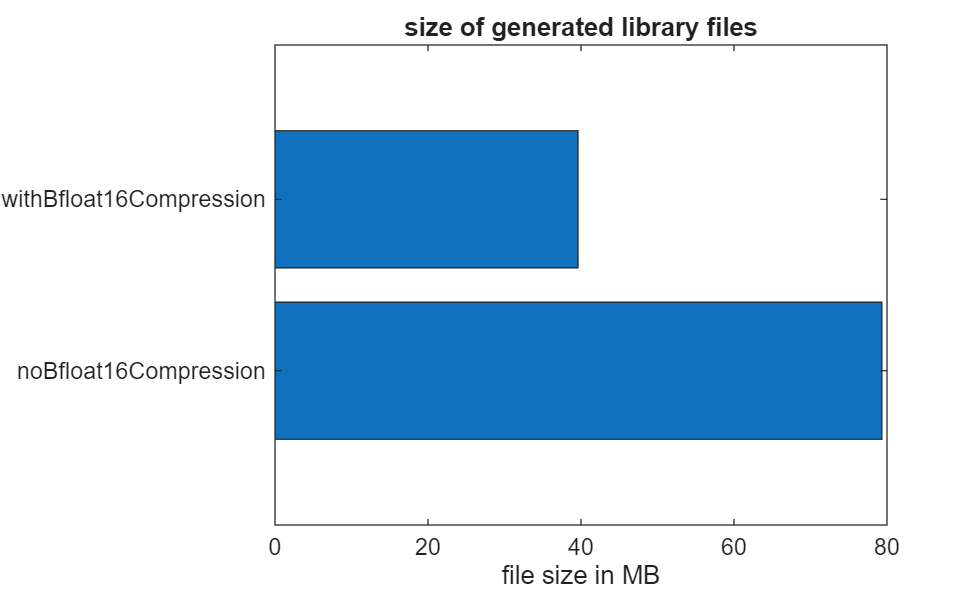

Compare Generated File Sizes With and Without Bfloat16 Compression

This example shows how using bfloat16 compression affects the file size of generated binary files. Examine the entry-point function darknet_detect. The darknet_detect function uses the imagePretrainedNetwork (Deep Learning Toolbox) function to load a pretrained DarkNet-19 network. The function creates a dlarray object that inputs and outputs variables of the single data type. For more information, see Code Generation for dlarray.

type darknet_detect.mfunction [out] = darknet_detect(in)

% Copyright 2024 The MathWorks, Inc.

%#codegen

persistent dlnet;

dlIn = dlarray(single(in),'SSC');

if isempty(dlnet)

dlnet = imagePretrainedNetwork('darknet19');

end

dlOut = predict(dlnet,dlIn);

out = extractdata(dlOut);

end

Create a random image of size 256-by-256-by-3.

img = randi([0, 255], 256, 256, 3, 'uint8');Create a code generation configuration object that generates a dynamic link library (DLL). The default compression type for the deep learning configuration object is none.

cfg = coder.config('dll');

cfg.DeepLearningConfig.LearnablesCompressionYou can generate the SIMD code to leverage the AVX512F intrinsics by setting InstructionSetExtensions to AVX512F. You can also choose to use different instruction set extensions or to use none. For more information on optimizing the generated code, see Leverage target hardware instruction set extensions.

cfg.InstructionSetExtensions = "AVX512F";

Run the codegen command to generate library files without bfloat learnables compression.

codegen -config cfg darknet_detect -args {img} -d noBfloat16Compression

To enable bfloat16 learnables compression, set the LearnablesCompression property of the deep learning configuration object to bfloat16.

cfg.DeepLearningConfig.LearnablesCompression = "bfloat16";Run the codegen command to generate library files that use the bfloat16 learnables compression.

codegen -config cfg darknet_detect -args {img} -d withBfloat16Compression

Compare the sizes of the generated binary files.

compareBinariesSizeInFolders("noBfloat16Compression", "withBfloat16Compression");

Folder "noBfloat16Compression" contains 79.34 MB binaries. Folder "withBfloat16Compression" contains 39.61 MB binaries. Folder "withBfloat16Compression" binary size is 50.08% smaller.

See Also

Functions

Objects

Topics

- Specify Code Configuration Parameters Interactively

- Code Generation for Sequence-to-Sequence Classification with Learnables Compression

- Optimize C/C++ Code Performance for Deep Learning Applications without Deep Learning Libraries

References

[1] Google Cloud Blog. “BFloat16: The Secret to High Performance on Cloud TPUs.” Accessed January 26, 2023. https://cloud.google.com/blog/products/ai-machine-learning/bfloat16-the-secret-to-high-performance-on-cloud-tpus.