unenroll

Description

Examples

Download the Berlin Database of Emotional Speech [1]. The database contains 535 utterances spoken by 10 actors intended to convey one of the following emotions: anger, boredom, disgust, anxiety/fear, happiness, sadness, or neutral. The emotions are text independent.

url = "http://emodb.bilderbar.info/download/download.zip"; downloadFolder = tempdir; datasetFolder = fullfile(downloadFolder,"Emo-DB"); if ~exist(datasetFolder,"dir") disp("Downloading Emo-DB (40.5 MB) ...") unzip(url,datasetFolder) end

Create an audioDatastore that points to the audio files.

ads = audioDatastore(fullfile(datasetFolder,"wav"));The file names are codes indicating the speaker id, text spoken, emotion, and version. The website contains a key for interpreting the code and additional information about the speakers such as gender and age. Create a table with the variables Speaker and Emotion. Decode the file names into the table.

filepaths = ads.Files; emotionCodes = cellfun(@(x)x(end-5),filepaths,"UniformOutput",false); emotions = replace(emotionCodes,{'W','L','E','A','F','T','N'}, ... {'Anger','Boredom','Disgust','Anxiety','Happiness','Sadness','Neutral'}); speakerCodes = cellfun(@(x)x(end-10:end-9),filepaths,"UniformOutput",false); labelTable = table(categorical(speakerCodes),categorical(emotions),VariableNames=["Speaker","Emotion"]); summary(labelTable)

Variables:

Speaker: 535×1 categorical

Values:

03 49

08 58

09 43

10 38

11 55

12 35

13 61

14 69

15 56

16 71

Emotion: 535×1 categorical

Values:

Anger 127

Anxiety 69

Boredom 81

Disgust 46

Happiness 71

Neutral 79

Sadness 62

labelTable is in the same order as the files in audioDatastore. Set the Labels property of the audioDatastore to labelTable.

ads.Labels = labelTable;

Read a signal from the datastore and listen to it. Display the speaker ID and emotion of the audio signal.

[audioIn,audioInfo] = read(ads); fs = audioInfo.SampleRate; sound(audioIn,fs) audioInfo.Label

ans=1×2 table

Speaker Emotion

_______ _________

03 Happiness

Split the datastore into a training set and a test set. Assign two speakers to the test set and the remaining to the training set.

testSpeakerIdx = ads.Labels.Speaker=="12" | ads.Labels.Speaker=="13"; adsTrain = subset(ads,~testSpeakerIdx); adsTest = subset(ads,testSpeakerIdx);

Read all the training and testing audio data into cell arrays. If your data can fit in memory, training is usually faster to input cell arrays to an i-vector system rather than datastores.

trainSet = readall(adsTrain); trainLabels = adsTrain.Labels.Emotion; testSet = readall(adsTest); testLabels = adsTest.Labels.Emotion;

Create an i-vector system that does not apply speech detection. When DetectSpeech is set to true (the default), only regions of detected speech are used to train the i-vector system. When DetectSpeech is set to false, the entire input audio is used to train the i-vector system. The usefulness of applying speech detection depends on the data input to the system.

emotionRecognizer = ivectorSystem(SampleRate=fs,DetectSpeech= false)

false)emotionRecognizer =

ivectorSystem with properties:

InputType: 'audio'

SampleRate: 16000

DetectSpeech: 0

Verbose: 1

EnrolledLabels: [0×2 table]

Call trainExtractor using the training set.

rng default trainExtractor(emotionRecognizer,trainSet, ... UBMNumComponents =256, ... UBMNumIterations =

5, ... ... TVSRank =

128, ... TVSNumIterations =

5);

Calculating standardization factors .....done. Training universal background model ........done. Training total variability space ........done. i-vector extractor training complete.

Copy the emotion recognition system for use later in the example.

sentimentRecognizer = copy(emotionRecognizer);

Call trainClassifier using the training set.

rng default trainClassifier(emotionRecognizer,trainSet,trainLabels, ... NumEigenvectors =32, ... ... PLDANumDimensions =

16, ... PLDANumIterations =

10);

Extracting i-vectors ...done. Training projection matrix .....done. Training PLDA model .............done. i-vector classifier training complete.

Call calibrate using the training set. In practice, the calibration set should be different than the training set.

calibrate(emotionRecognizer,trainSet,trainLabels)

Extracting i-vectors ...done. Calibrating CSS scorer ...done. Calibrating PLDA scorer ...done. Calibration complete.

Enroll the training labels into the i-vector system.

enroll(emotionRecognizer,trainSet,trainLabels)

Extracting i-vectors ...done. Enrolling i-vectors ..........done. Enrollment complete.

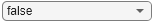

You can use detectionErrorTradeoff as a quick sanity check on the performance of a multilabel closed-set classification system. However, detectionErrorTradeoff provides information more suitable to open-set binary classification problems, for example, speaker verification tasks.

detectionErrorTradeoff(emotionRecognizer,testSet,testLabels)

Extracting i-vectors ...done. Scoring i-vector pairs ...done. Detection error tradeoff evaluation complete.

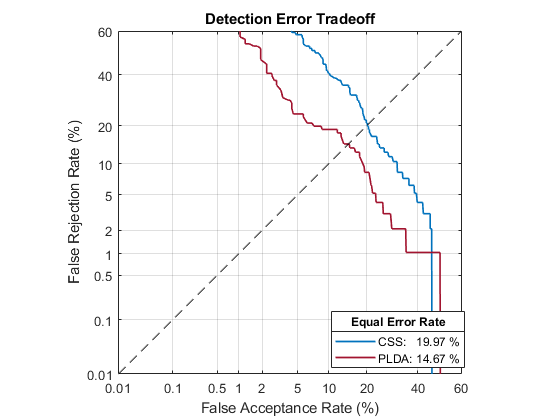

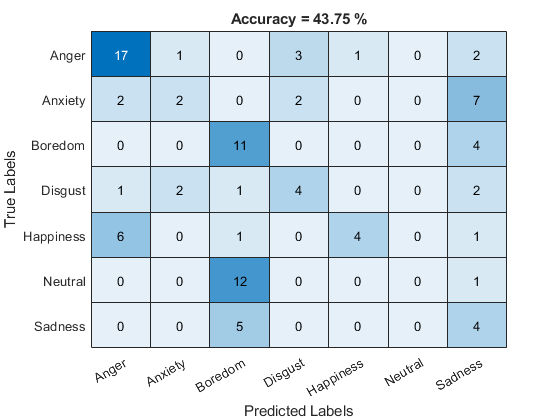

For a more detailed view of the i-vector system's performance in a multilabel closed set application, you can use the identify function and create a confusion matrix. The confusion matrix enables you to identify which emotions are misidentified and what they are misidentified as. Use the supporting function plotConfusion to display the results.

trueLabels = testLabels; predictedLabels = trueLabels; scorer ="plda"; for ii = 1:numel(testSet) tableOut = identify(emotionRecognizer,testSet{ii},scorer); predictedLabels(ii) = tableOut.Label(1); end plotConfusion(trueLabels,predictedLabels)

Call info to inspect how emotionRecognizer was trained and evaluated.

info(emotionRecognizer)

i-vector system input Input feature vector length: 60 Input data type: double trainExtractor Train signals: 439 UBMNumComponents: 256 UBMNumIterations: 5 TVSRank: 128 TVSNumIterations: 5 trainClassifier Train signals: 439 Train labels: Anger (103), Anxiety (56) ... and 5 more NumEigenvectors: 32 PLDANumDimensions: 16 PLDANumIterations: 10 calibrate Calibration signals: 439 Calibration labels: Anger (103), Anxiety (56) ... and 5 more detectionErrorTradeoff Evaluation signals: 96 Evaluation labels: Anger (24), Anxiety (13) ... and 5 more

Next, modify the i-vector system to recognize emotions as positive, neutral, or negative. Update the labels to only include the categories negative, positive, and categorical.

trainLabelsSentiment = trainLabels; trainLabelsSentiment(ismember(trainLabels,categorical(["Anger","Anxiety","Boredom","Sadness","Disgust"]))) = categorical("Negative"); trainLabelsSentiment(ismember(trainLabels,categorical("Happiness"))) = categorical("Positive"); trainLabelsSentiment = removecats(trainLabelsSentiment); testLabelsSentiment = testLabels; testLabelsSentiment(ismember(testLabels,categorical(["Anger","Anxiety","Boredom","Sadness","Disgust"]))) = categorical("Negative"); testLabelsSentiment(ismember(testLabels,categorical("Happiness"))) = categorical("Positive"); testLabelsSentiment = removecats(testLabelsSentiment);

Train the i-vector system classifier using the updated labels. You do not need to retrain the extractor. Recalibrate the system.

rng default trainClassifier(sentimentRecognizer,trainSet,trainLabelsSentiment, ... NumEigenvectors =64, ... ... PLDANumDimensions =

32, ... PLDANumIterations =

10);

Extracting i-vectors ...done. Training projection matrix .....done. Training PLDA model .............done. i-vector classifier training complete.

calibrate(sentimentRecognizer,trainSet,trainLabels)

Extracting i-vectors ...done. Calibrating CSS scorer ...done. Calibrating PLDA scorer ...done. Calibration complete.

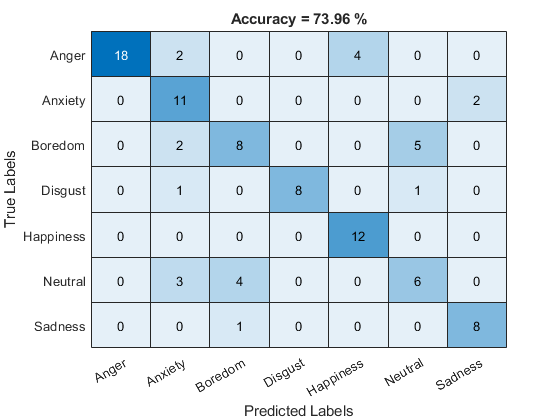

Enroll the training labels into the system and then plot the confusion matrix for the test set.

enroll(sentimentRecognizer,trainSet,trainLabelsSentiment)

Extracting i-vectors ...done. Enrolling i-vectors ......done. Enrollment complete.

trueLabels = testLabelsSentiment; predictedLabels = trueLabels; scorer ="plda"; for ii = 1:numel(testSet) tableOut = identify(sentimentRecognizer,testSet{ii},scorer); predictedLabels(ii) = tableOut.Label(1); end plotConfusion(trueLabels,predictedLabels)

An i-vector system does not require the labels used to train the classifier to be equal to the enrolled labels.

Unenroll the sentiment labels from the system and then enroll the original emotion categories in the system. Analyze the system's classification performance.

unenroll(sentimentRecognizer) enroll(sentimentRecognizer,trainSet,trainLabels)

Extracting i-vectors ...done. Enrolling i-vectors ..........done. Enrollment complete.

trueLabels = testLabels; predictedLabels = trueLabels; scorer ="plda"; for ii = 1:numel(testSet) tableOut = identify(sentimentRecognizer,testSet{ii},scorer); predictedLabels(ii) = tableOut.Label(1); end plotConfusion(trueLabels,predictedLabels)

Supporting Functions

function plotConfusion(trueLabels,predictedLabels) uniqueLabels = unique(trueLabels); cm = zeros(numel(uniqueLabels),numel(uniqueLabels)); for ii = 1:numel(uniqueLabels) for jj = 1:numel(uniqueLabels) cm(ii,jj) = sum((trueLabels==uniqueLabels(ii)) & (predictedLabels==uniqueLabels(jj))); end end heatmap(uniqueLabels,uniqueLabels,cm) colorbar off ylabel('True Labels') xlabel('Predicted Labels') accuracy = mean(trueLabels==predictedLabels); title(sprintf("Accuracy = %0.2f %%",accuracy*100)) end

References

[1] Burkhardt, F., A. Paeschke, M. Rolfes, W.F. Sendlmeier, and B. Weiss, "A Database of German Emotional Speech." In Proceedings Interspeech 2005. Lisbon, Portugal: International Speech Communication Association, 2005.

Input Arguments

i-vector system, specified as an object of type ivectorSystem.

Classification labels used by an i-vector system, specified as one of these:

A categorical array

A cell array of character vectors

A string array

Data Types: categorical | cell | string

Version History

Introduced in R2021a

See Also

trainExtractor | trainClassifier | calibrate | enroll | detectionErrorTradeoff | verify | identify | ivector | info | addInfoHeader | release | ivectorSystem | speakerRecognition

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Select a Web Site

Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select: .

You can also select a web site from the following list

How to Get Best Site Performance

Select the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

Americas

- América Latina (Español)

- Canada (English)

- United States (English)

Europe

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)