Frame-Based Video Pipeline

This example shows a video processing pipeline implemented in MATLAB® using frame-based functions. This design supports HDL code generation.

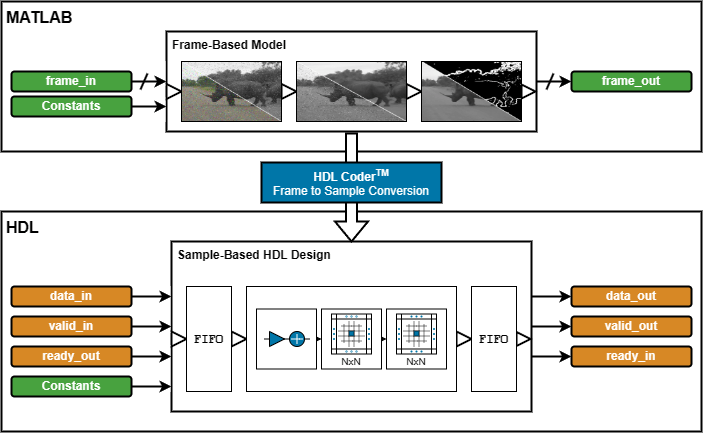

The HDL Coder™ frame-to-sample optimization allows you to combine frame-based video processing functions, element-wise operations, and custom neighborhood functions using hdl.npufun and hdl.iterfun to rapidly prototype and develop algorithms for HDL code generation using MATLAB.

This example refactors a MATLAB video processing algorithm to use frame-based video processing functions that support HDL code generation. A MATLAB testbench verifies the refactored algorithm against the original. Then, the example expands the design to add support for subsampled YCbCr I/O. Finally, the example shows how to generate HDL code and a HDL testbench for the YCbCr-compatible video processing pipeline.

MATLAB Reference

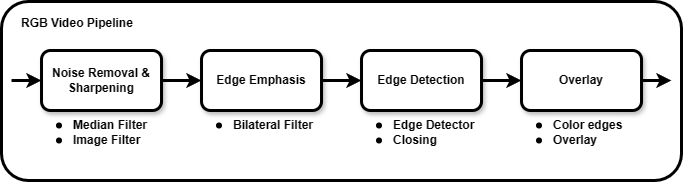

The example adapts an existing MATLAB Image Processing Toolbox™ video processing algorithm for hardware deployment. The algorithm removes noise and sharpens a video before detecting and overlaying edges:

The Noise Removal and Sharpening stage uses a median filter to remove noise while not further degrading sharpness. The median filtered frame is sharpened using a 2D image filter with an inverted Laplacian kernel. The Edge Emphasis stage uses a bilateral filter to smooth the sharpened frame while retaining edges. The Edge Detection stage uses a Sobel edge detector and morphological closing to detect and improve the connectivity of edges. The Overlay stage converts the binary edges to the desired color and overlays them onto the median-filtered RGB frames.

This code excerpt shows a uint8 Image Processing Toolbox implementation of the algorithm:

function [..] = FBVP_iptPipeline(..) % --- Setup % Noise removal & sharpening hSharp = [ ... % Sharpening filter coefficients 0 0 0; ... 0 1 0; ... 0 0 0] - fspecial('laplacian', 0.2); % Edge detection closingSE = strel('disk', 1); % Morphological closing structuring element edgeColor = cat(3, 255, 0, 255); % 1-by-1-by-3 RGB triplet % --- Noise Removal & Sharpening median = zeros(size(frameIn), 'like', frameIn); for ii = 1 : 3 median(:,:,ii) = medfilt2(frameIn(:,:,ii)); % 3-by-3 median filter end gray = rgb2gray(median); % RGB to grayscale sharp = imfilter(gray, hSharp); % Sharpening filter % --- Emphasize Edges bilateral = imbilatfilt(sharp, 36864, 0.9, NeighborhoodSize=5); % Bilateral filter % --- Edge Detection edges = edge(bilateral, 'Sobel', 12/255); % Sobel edge detector closed = imclose(edges, closingSE); % Morphological closing % --- Edge Overlay rgbEdges = uint8(closed .* edgeColor); % Color edges frameOut = median + rgbEdges; % Overlay

Frame-Based HDL Design

Convert the coefficients of the floating-point sharpening filter, hSharp, and RGB edge color, edgeColor, to fixed-point data type. You can represent hSharp using a fixdt(1,16,12) and edgeColor using a uint8. Refer to Manual Fixed-Point Conversion Best Practices (Fixed-Point Designer) (Fixed-Point Designer™) for general guidance.

In this algorithm the Image Filter, hdlimfilter, and Bilateral Filter, hdlimbilatfilt, behave differently from their respective Image Processing Toolbox functions:

The Image Filter, hdlimfilter, requires that the filter coefficients be in the range [-1, 1] and that the sum of the absolute coefficients is less than or equal to 1. Rescale the coefficients, hSharp, by dividing them by the maximum absolute value of the coefficients. Apply a gain to the output of the Image Filter to counteract the gain introduced by rescaling the coefficients.

The Bilateral Filter,

hdlimbilatfilt, uses a Look-Up Table (LUT) based implementation for hardware efficiency and so does not numerically matchimbilatfilt.hdlimbilatfiltalso requires intensity standard deviation rather than the degree of smoothing input used withimbilatfilt. Convert theimbilatfiltdegreeOfSmoothing input tohdlimbilatfilt's intensityStdDev by dividing the square root of degreeOfSmoothing by the input word length.

The frame-based functions in this example do not support 3D I/O. Configure the median filter and Overlay stage to handle individual color channels with separate variables. The top-level design function FBVP_rgbDUT.m also requires 2D inputs and outputs.

This code excerpt shows the refactored algorithm:

function [..] = FBVP_rgbDUT(..) % --- Setup % Noise removal & sharpening hSharp = ([ ... % Sharpening filter coefficients 0 0 0; ... 0 1 0; ... 0 0 0] - fspecial('laplacian', 0.2)) ./ max(abs(fspecial('laplacian', 0.2)) + 1, [], "all"); % Edge detection closingSE = strel("disk", 1); % Morphological closing structuring element edgeColor = uint8([255, 0, 255]); % 1-by-3 RGB triplet % --- Noise Removal & Sharpening medianR = hdlmedfilt2(RIn); % 3-by-3 median filter medianG = hdlmedfilt2(GIn); medianB = hdlmedfilt2(BIn); gray = hdlrgb2gray(medianR, medianG, medianB); % RGB to grayscale sharp = hdlimfilter(gray, hSharp, 'Replicate', 0); % Sharpening filter sharp = sharp * 4; % Account for hSharp scaling % --- Emphasize Edges bilateral = hdlimbilatfilt(sharp, sqrt(36864)/2^8, 0.9, 5, 'Replicate', 0); % Bilateral filter % --- Edge Detection edges = hdledge(bilateral, 'sobel', 12); % Sobel edge detector closed = hdlimclose(edges, closingSE.Neighborhood); % Morphological closing % --- Edge Overlay closedUint = uint8(closed); % Color edges edgesR = closedUint * edgeColor(1); edgesG = closedUint * edgeColor(2); edgesB = closedUint * edgeColor(3); ROut = medianR + edgesR; % Overlay GOut = medianG + edgesG; BOut = medianB + edgesB;

Simulation

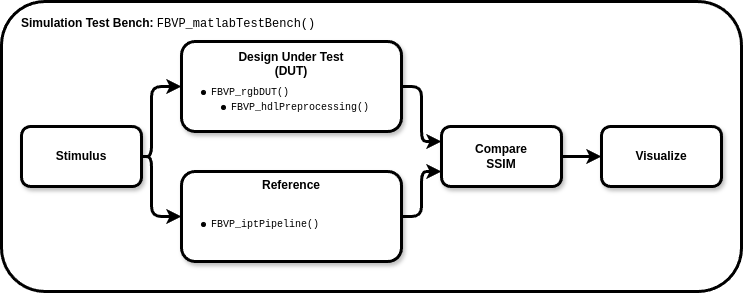

To verify the refactored code and fixed-point conversion create a testbench to compare the reference algorithm and hardware design. The functions in this example can differ from Image Processing Toolbox due to being optimized for hardware. A best practice when developing or adapting your own algorithms for hardware is to create a testbench and iteratively refactor your algorithm.

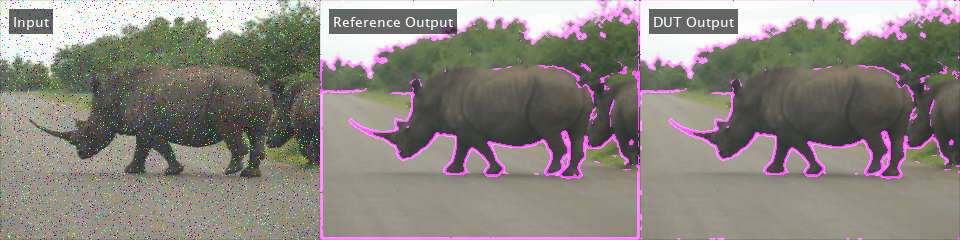

In the testbench, FBVP_matlabTestBench.m, the videoIn object reads frames from a video source, and the imfilter and imnoise functions are used to apply a blur effect and introduce salt & pepper noise respectively. The testbench calls the Design Under Test (DUT), FrameBasedVideoPipelineMATLABDesign.m, to process each frame. The reference design, FBVP_iptPipeline.m, performs the same operations as the DUT using Image Processing Toolbox functions. The testbench compares the DUT and reference frame by calculating the Structural SIMilarity (SSIM) index and verifying that this exceeds the minimum threshold. The viewer object displays the corrupted input frame, reference output, and DUT output. Upon completion of the testbench the processed frame rate is printed to the Command Window

function [..] = FBVP_matlabTestBench(..) tic; for ii = 1 : numFrames % --- Stimulus frameIn(:) = videoIn.readFrame; % Source frame frameIn(:) = imfilter(frameIn, fspecial("gaussian", 3, 0.5)); % Gaussian blur frameIn(:) = imnoise(frameIn, "salt & pepper"); % Salt & pepper noise % --- Design Under Test (DUT) % Exercise DUT [R, G, B] = FBVP_rgbDUT(frameIn(:,:,1), frameIn(:,:,2), frameIn(:,:,3)); frameOut = cat(3, R, G, B); % --- Reference frameReference = FBVP_iptPipeline(frameIn); % --- Compare frameSSIM(:) = ssim(frameReference, frameOut); if frameSSIM < ssimThreshold fprintf("frame #%u: reference and design output Structural SIMilarity (SSIM) index %.3g%% is less than the %.3g%% threshold.\n", ... ii, 100 * frameSSIM, 100 * ssimThreshold); return; end % --- Visualize frameIn = insertText(frameIn, [10 10], {'Input'}, FontSize=14, FontColor=[1 1 1], TextBoxColor=[0 0 0]); frameReference = insertText(frameReference, [10 10], {'Reference Output'}, FontSize=14, FontColor=[1 1 1], TextBoxColor=[0 0 0]); frameOut = insertText(frameOut, [10 10], {'DUT Output'}, FontSize=14, FontColor=[1 1 1], TextBoxColor=[0 0 0]); viewer(cat(2, frameIn, frameReference, frameOut)); end t = toc; fprintf('\n%u frames have been processed in %.2f seconds.\n', numFrames, t); fprintf('Average frame rate is %.2f frames/second.\n', numFrames/t);

To enable MEX generation of the testbench functions that do not support C code generation such as fprintf are declared as extrinsic. Extrinsic functions are excluded from MEX generation and are executed using interpreted mode.

To simulate the design, generate MEX of the MATLAB testbench, FBVP_matlabTestBench.m, and execute it:

codegen("FBVP_matlabTestBench.m");

FBVP_matlabTestBench_mex;100 frames have been processed in 15.55 seconds. Average frame rate is 6.43 frames/second.

Visually inspect the DUT and reference output and verify that the testbench completes successfully. The testbench has passed if all frames exceed the SSIM threshold and the processed frame rate is printed to the Command Window

Debugging simulations with large frame sizes is impractical in interpreted mode due to long simulation time. However, debugging a MEX simulation is challenging due to lack of debug access into the code. To avoid these scenarios, a best practice is to develop and verify the algorithm and testbench using a thumbnail frame size. In most cases, the HDL-targeted design can be implemented with no dependence on frame size. In addition to using smaller frame sizes, when refactoring from an existing reference design, small iterative changes allow for faster identification of errors and targeted debugging. Once you are confident that the design and testbench are working correctly, increase the frame size in the testbench and use MATLAB Coder to accelerate the simulation. To increase the frame size the testbench requires only minor changes.

Chrominance Preprocessing & Postprocessing

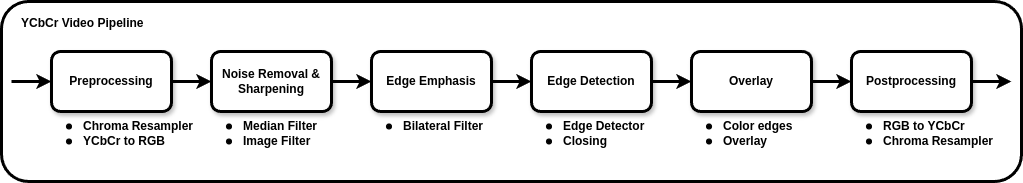

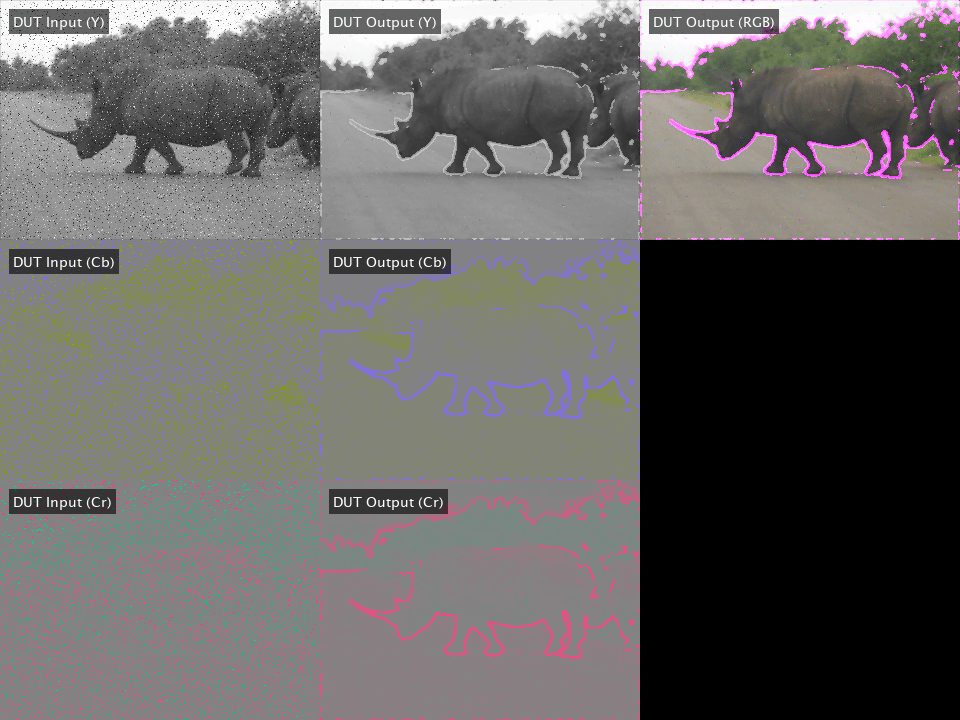

To deploy to hardware that utilizes a subsampled chrominance-based colour space, such as 4:2:2 YCbCr, color space conversion and chroma resampling must be performed at the input and output of the RGB-based algorithm.

Enhance the MATLAB design function to introduce support for YCbCr 4:2:2 I/O. The design function FrameBasedVideoPipelineHDLDesign.m includes the preprocessing and postprocessing stages. The Preprocessing stage uses hdlresmplchroma to resample the input YCbCr 4:2:2 to YCbCr 4:4:4 allowing for color space conversion to RGB using hdlycbcr2rgb. The output of the algorithm is converted back to YCbCr using hdlrgb2ycbcr before the chroma components are resampled from 4:4:4 to 4:2:2 to produce the final YCbCr 4:2:2 output.

This code excerpt shows the Preprocessing and Postprocessing stages:

function [..] = FBVP_ycbcrDUT(..) % --- Setup % Noise removal & sharpening... % Edge detection... % --- Preprocessing (YCbCr 4:2:2 I/O Only) [Y, Cb, Cr] = hdlresmplchroma(Y, Cb, Cr); % 4:2:2 to 4:4:4 [R, G, B ] = hdlycbcr2rgb(Y, Cb, Cr); % YCbCr to RGB % --- Noise Removal & Sharpening... % --- Emphasize Edges... % --- Edge Detection... % --- Edge Overlay... % --- Postprocessing (YCbCr 4:2:2 I/O Only) [YOut, CbOut, CrOut] = hdlrgb2ycbcr(R, G, B); % RGB to YCbCr [YOut, CbOut, CrOut] = hdlResampleChroma444To422FixedPoint(YOut, CbOut, CrOut); % 4:4:4 to 4:2:2

The reference design, FBVP_iptPipeline.m, can be enhanced to accept YCbCr 4:2:2 inputs for validation of the Preprocessing and Postprocessing stages. This can be achieved using the rgb2ycbcr and imadjust Image Processing Toolbox functions with a custom chroma resampling function or the vision.ChromaResampler Computer Vision Toolbox™ System object. This has not been modeled for this example.

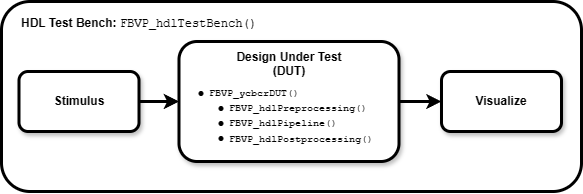

To verify the preprocessing, postprocessing and generated HDL a HDL testbench is required. The HDL testbench, FBVP_hdlTestBench.m, is derived from the MATLAB testbench. The HDL testbench converts the corrupted input video frame from the RGB to YCbCr color space, and subsamples the chroma components to model the expected AXI-Stream input. The testbench calls the HDL DUT, FBVP_ycbcrDUT.m, to process each YCbCr 4:2:2 frame. The viewer object displays the YCbCr input, DUT output, and an RGB version of the DUT output.

To simulate the HDL design, generate MEX of the HDL testbench, FBVP_hdlTestBench.m, and execute it:

codegen("FBVP_hdlTestBench.m");

FBVP_hdlTestBench_mex;

HDL Code Generation & Verification

Once your design is working in simulation, use HDL Coder to generate HDL for the FrameBasedVideoPipelineHDLDesign function.

Generating HDL using the HDL Coder frame-to-sample optimization generates an entity with I/O suited for AXI-Stream interfaces and provides an input and output FIFO for memory contention and backpressure. The size of the FIFOs can be configured in the HDL Coder settings.

Use the hdlsetuptoolpath (HDL Coder) function for configuring synthesis tools and the vsim or nclaunch (HDL Verifier™) functions to configure HDL simulators.

Programmatic Code Generation & Simulation

Enter the following commands to create a MATLAB Coder configuration object, hdlcfg, which generates HDL and a HDL testbench, and simulates the generate code using ModelSim®. To enable the frame-to-sample optimization, set the AggressiveDataflowConversion and FrameToSampleConversion coder.Hdlconfig properties to true. For details of the properties of the HDL configuration object, see coder.HdlConfig (HDL Coder).

% Default MATLAB Coder HDL config hdlcfg = coder.config('hdl'); % Sources hdlcfg.DesignFunctionName = "FBVP_ycbcrDUT.m"; hdlcfg.TestBenchName = "FBVP_hdlTestBench.m"; % Enable testbench generation and simulation hdlcfg.GenerateHDLTestBench = true; hdlcfg.SimulateGeneratedCode = true; % Enable frame-to-sample conversion hdlcfg.AggressiveDataflowConversion = true; hdlcfg.FrameToSampleConversion = true; % DUT argument prototype frameIn = VideoReader("rhinos.avi").readFrame; % Generate HDL & HDL testbench, then simulate codegen -config hdlcfg -args {frameIn(:,:,1), frameIn(:,:,2), frameIn(:,:,3)}

Graphical Interface Code Generation & Simulation

Enter the following command to create a new HDL Coder™ project,

coder -hdlcoder -new FrameBasedVideoPipelineProject

Add the file FBVP_ycbcrDUT.m to the project as the MATLAB Function and FBVP_hdlTestBench.m as the MATLAB testbench.

Refer to Get Started with MATLAB to HDL Workflow (HDL Coder) for a tutorial on creating and populating MATLAB HDL Coder projects.

Launch the Workflow Advisor. In the Workflow Advisor, enable the Frame to Sample optimization:

HDL Code Generation > Optimizations > General > Aggressive Dataflow Conversion

HDL Code Generation > Frame to Sample Conversion > Enable Frame to Sample Conversion (Requires Aggressive Dataflow Conversion)

Right-click the Code Generation step. Choose the option Run to selected task to run all the steps from the beginning through HDL code generation.

Examine the generated HDL code by clicking the links in the log window.

Enable HDL testbench generation and simulation by selecting the Generate test bench and Simulate generated test bench options in the Verification - Verify with HDL Test Bench step. Right-click the Verification - Verify with HDL Test Bench step. Choose the option Run to selected task to run all the required steps from the beginning through simulation of the generated HDL code.

Resources

The design was synthesized and put through place and route on an AMD Zynq® Ultrascale+™ MPSoC ZCU102.

Resource | Usage | Usage (%) |

LUT |

|

|

LUTRAM |

|

|

FF |

|

|

BRAM |

|

|

DSP |

|

|

Fmax |

|

|

When configured for a 1080p input, the design achieves the required timing constraints for operating at 60 frames per second. The generated HDL shows discrete entities for any frame-based function that uses hdl.npufun or hdl.iterfun, as such the frame-based Vision HDL Toolbox functions hdlrgb2gray, hdlrgb2ycbcr, and hdlycbcr2rgb are found in the DUT entity.

See Also

The design functions implemented in this example can be used in Simulink through the MATLAB Function block. See the Frame-Based Video Pipeline in Simulink example for more details.