Automate Ground Truth Labeling for Object Detection

This example shows how to create an automation algorithm to automatically label data for object detection using a pretrained object detector.

Overview

The Image Labeler, Video Labeler, and Ground Truth Labeler (Automated Driving Toolbox) apps provide an easy way to interactively label data for training or validating image classifiers, object detectors, semantic, and instance segmentation networks. These apps include several built-in automation algorithms and provides an interface to define custom automation algorithms to accelerate the labeling process.

In this example, a custom automation algorithm is created to label objects using a pretrained YOLO v4 object detector in the Image Labeler app. The procedure shown in this example can replicated in the Video Labeler and Ground Truth Labeler apps. For more information, see Create Automation Algorithm for Labeling.

Create Object Detection Algorithm

In this example, you will be using Getting Started with YOLO v4.

The pretrained object detector uses CSPDarkNet-53 as the backbone network and is trained on the MS-COCO dataset and can identify 80 different objects. For simplicity, you will reduce 80 classes to 12 super-classes in the automation algorithm.

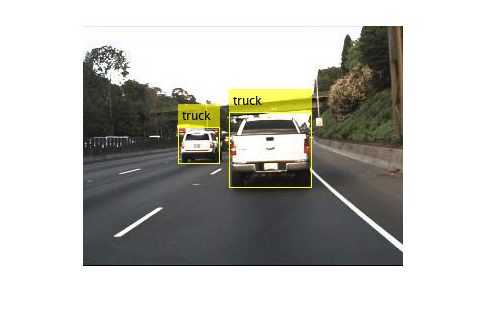

Try out the YOLO v4 object detector on a sample image.

% Create YOLO v4 object detector. detector = yolov4ObjectDetector("csp-darknet53-coco"); % Detect objects in an unknown image by using the detector. img = imread("highway.png"); [bboxes,scores,labels] = detect(detector,img); % Display the detection results. detectedImg = insertObjectAnnotation(img,"Rectangle",bboxes,labels); figure imshow(detectedImg)

% Free memory by deleting the detector. clear detector

Integrate Object Detection Algorithm into Image Labeler

Incorporate YOLO v4 detector in the Image Labeler app by creating an automation class in MATLAB® that inherits from the abstract base class vision.labeler.AutomationAlgorithm. This base class defines the API that the app uses to configure and run the algorithm. The Image Labeler app provides a convenient way to obtain an initial automation class template. The YOLOv4AutomationAlgorithm class is based on this template and provides a ready-to-use automation class for object detection.

In this section, some of the key properties and methods of the Automation class are discussed.

The properties section of the automation class specifies the custom properties needed to run the algorithm. The Model property holds the pretrained detector. The Label and LabelIDs properties store the labels and their corresponding ids for different object categories. The Map property is a dictionary that contains LabelIDs as keys and Labels as values. The Threshold property determines the lower bound on the prediction value for the detection to be selected for labeling.

properties

Model

% Threshold for the object detection score

Threshold = 0.3

% Label class names (super-classes)

Labels = {'person','vehicle','outdoor','animal','accessory','sports', ...

'kitchen','food','furniture','electonic','appliance','indoor' };

% IDs corresponding to the labels. Note that be group together

% similar classes into superclasses defined below:

% ["person"] = person

% ["bicycle", "car", "motorbike", "aeroplane", "bus", "train", "truck", "boat"] = vehicle

% ["traffic light", "fire hydrant", "stop sign", "parking meter", "bench"] = outdoor

% ["bird", "cat", "dog", "horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe"] = animal

% ["backpack", "umbrella", "handbag", "tie", "suitcase"]; = accessory

% ["frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard", "tennis racket"] = sports

% ["bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl"] = kitchen

% ["banana", "apple", "sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake"] = food

% ["chair", "sofa", "pottedplant", "bed", "diningtable", "toilet"] = furniture

% ["tvmonitor", "laptop", "mouse", "remote" , "keyboard", "cell phone"] = electronic

% ["microwave", "oven", "toaster", "sink", "refrigerator"] =appliance

% ["book", "clock", "vase", "scissors", "teddy bear", "hair drier", "toothbrush"] = indoor

LabelIDs = {1, 2:9, 10:14, 15:24, 25:29, 30:39, 40:46, 47:56, 57:62, 63:68, 69:73, 74:80};

% Dictionary containing LabelID to Label mapping.

Map = dictionary;

end

In the YOLOv4AutomationAlgorithm class, the initialize function has been customized to load the pretrained object detection network and populate the dictionary.

function initialize(algObj, ~)

% Load the detector.

algObj.Model = yolov4ObjectDetector("csp-darknet53-coco");

% Populate the dictionary for mapping label IDs with label names.

for i=1:80

idx = find(cellfun(@(x) ismember(i,x),algObj.LabelIDs));

algObj.Map(i) = algObj.Labels(idx);

end

end

Next, the run function defines the core object detection algorithm of this automation class. run is called for each image frame, and expects the automation class to return a set of labels. The run function in YOLOv4AutomationAlgorithm contains the logic for bounding box detection and logic for passing the detection boxes to the Image Labeler.

function autoLabels = run(algObj, I)

% Perform detection.

[bboxes, scores, labels] = detect(algObj.Model,I , Threshold=algObj.Threshold);

autoLabels = struct('Name', cell(1, size(bboxes, 1) ), ...

'Type', cell(1, size(bboxes, 1) ),'Position',zeros([1 4]));

for i=1:size(bboxes, 1)

% Add the predicted label to outputs.

currentLabel = algObj.Map(double(labels(i)));

autoLabels(i).Name = currentLabel{:};

autoLabels(i).Type = labelType.Rectangle;

autoLabels(i).Position = bboxes(i,:);

end

end

This algorithm does not require any cleanup, so the terminate function is empty.

Use Object Detection Automation Class in the App

The properties and methods described in the previous section have been implemented in the YOLOv4AutomationAlgorithm class file. To use this class in the app:

Create the folder structure

+vision/+labelerunder the current folder, and copy the automation class into it.

mkdir('+vision/+labeler');

copyfile('YOLOv4AutomationAlgorithm.m','+vision/+labeler');

Open the Image Labeler app with predefined ground truth data. The helper function, generateGroundTruth, creates a groundTruth object holding a set of sample images and label definitions.

gTruth = generateGroundTruth; imageLabeler(gTruth)

Click Algorithm > YOLOv4 Object Detection Automation If you do not see this option, ensure that the current working folder has a folder called

+vision/+labeler, with a file named YOLOv4AutomationAlgorithm.min it.Click Automate. A new panel will open, displaying directions for using the algorithm.

Click Run. The automated algorithm executes on each image, detecting various categories. After the run is completed, use the slider or arrow keys to scroll through all the images and verify the result of the automation algorithm.

Automation for object labeling for the images is now complete. You can now proceed with labeling other objects of interest. Once you are satisfied with the object labelings, click Accept to save and export the results of this labeling run.

Conclusion

This example demonstrated how to use YOLO V4 pre-trained object detector to accelerate labeling of objects in Image Labeler app using the AutomationAlgorithm interface. The model can be replaced with any other detector by modifying the automation algorithm class accordingly.

References

Alexey Bochkovskiy, Chien-Yao Wang, and Hong-Yuan Mark Liao. “YOLOv4: Optimal Speed and Accuracy of Object Detection.” 2020, arXiv:2004.10934. https://arxiv.org/abs/2004.10934..

Lin, T., et al. "Microsoft COCO: Common objects in context. arXiv 2014." arXiv preprint arXiv:1405.0312 (2014).