setActor

Set actor of reinforcement learning agent

Description

Examples

Modify Actor Parameter Values

Assume that you have an existing trained reinforcement learning agent. For this example, load the trained agent from Compare DDPG Agent to LQR Controller.

load("DoubleIntegDDPG.mat","agent")

Obtain the actor function approximator from the agent.

actor = getActor(agent);

Obtain the learnable parameters from the actor.

params = getLearnableParameters(actor)

params=2×1 cell array

{[-15.5717 -7.1444]}

{[ 0]}

Modify the parameter values. For this example, simply multiply all of the parameters by 2.

modifiedParams = cellfun(@(x) x*2,params,"UniformOutput",false);Set the parameter values of the actor to the new modified values.

actor = setLearnableParameters(actor,modifiedParams);

Set the actor in the agent to the new modified actor.

setActor(agent,actor);

Display the new parameter values.

getLearnableParameters(getActor(agent))

ans=2×1 cell array

{[-31.1433 -14.2887]}

{[ 0]}

Modify Deep Neural Networks in Reinforcement Learning Agent

Create an environment with a continuous action space and obtain its observation and action specifications. For this example, load the environment used in the example Compare DDPG Agent to LQR Controller.

Load the predefined environment.

env = rlPredefinedEnv("DoubleIntegrator-Continuous");Obtain observation and action specifications.

obsInfo = getObservationInfo(env); actInfo = getActionInfo(env);

Create a PPO agent from the environment observation and action specifications. This agent uses default deep neural networks for its actor and critic.

agent = rlPPOAgent(obsInfo,actInfo);

To modify the deep neural networks within a reinforcement learning agent, you must first extract the actor and critic function approximators.

actor = getActor(agent); critic = getCritic(agent);

Extract the deep neural networks from both the actor and critic function approximators.

actorNet = getModel(actor); criticNet = getModel(critic);

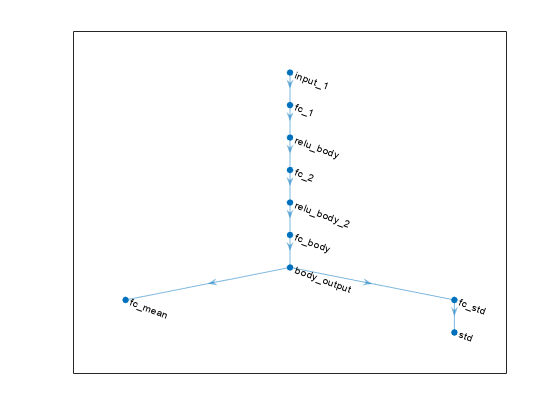

Plot the actor network.

plot(actorNet)

To validate a network, use analyzeNetwork. For example, validate the critic network.

analyzeNetwork(criticNet)

You can modify the actor and critic networks and save them back to the agent. To modify the networks, you can use the Deep Network Designer app. To open the app for each network, use the following commands.

deepNetworkDesigner(criticNet) deepNetworkDesigner(actorNet)

In Deep Network Designer, modify the networks. For example, you can add additional layers to your network. When you modify the networks, do not change the input and output layers of the networks returned by getModel. For more information on building networks, see Build Networks with Deep Network Designer.

To validate the modified network in Deep Network Designer, you must click on Analyze, under the Analysis section. To export the modified network structures to the MATLAB® workspace, generate code for creating the new networks and run this code from the command line. Do not use the exporting option in Deep Network Designer. For an example that shows how to generate and run code, see Create DQN Agent Using Deep Network Designer and Train Using Image Observations.

For this example, the code for creating the modified actor and critic networks is in the createModifiedNetworks helper script.

createModifiedNetworks

Each of the modified networks includes an additional fullyConnectedLayer and reluLayer in their main common path. Plot the modified actor network.

plot(modifiedActorNet)

After exporting the networks, insert the networks into the actor and critic function approximators.

actor = setModel(actor,modifiedActorNet); critic = setModel(critic,modifiedCriticNet);

Finally, insert the modified actor and critic function approximators into the actor and critic objects.

agent = setActor(agent,actor); agent = setCritic(agent,critic);

Input Arguments

agent — Reinforcement learning agent

rlPGAgent | rlDDPGAgent | rlTD3Agent | rlACAgent | rlSACAgent | rlPPOAgent | rlTRPOAgent

Reinforcement learning agent that contains an actor, specified as one of the following:

rlPGAgentobjectrlDDPGAgentobjectrlTD3AgentobjectrlACAgentobjectrlSACAgentobjectrlPPOAgentobjectrlTRPOAgentobject

Note

agent is an handle object. Therefore is updated by

setActor whether agent is returned as an

output argument or not. For more information about handle objects, see Handle Object Behavior.

Note

if agent is an rlMBPOAgent

object, to set the actor, use

setActor(agent.BaseAgent,actor).

actor — Actor

rlContinuousDeterministicActorrlDiscreteCategoricalActorrlContinuousGaussianActor

rlContinuousDeterministicActorrlDiscreteCategoricalActorrlContinuousGaussianActorActor object, specified as one of the following:

rlContinuousDeterministicActorobject — Specify whenagentis anrlDDPGAgentorrlTD3AgentobjectrlDiscreteCategoricalActorobject — Specify whenagentis anrlACAgent,rlPGAgent,rlPPOAgent,rlTRPOAgentorrlSACAgentobject for an environment with a discrete action space.rlContinuousGaussianActorobject — Specify whenagentis anrlACAgent,rlPGAgent,rlPPOAgent,rlTRPOAgentorrlSACAgentobject for an environment with a continuous action space.

The input and outputs of the approximation model in the actor (typically, a neural network) must match the observation and action specifications of the original agent.

To create an actor, use one of the following methods:

Create the actor using the corresponding function approximator object.

Obtain the existing actor from an agent using

getActor.

Output Arguments

agent — Updated reinforcement learning agent

rlPGAgent | rlDDPGAgent | rlTD3Agent | rlACAgent | rlSACAgent | rlPPOAgent | rlTRPOAgent

Updated agent, returned as an agent object. Note that agent is

an handle object. Therefore its actor is updated by setActor

whether agent is returned as an output argument or not. For more

information about handle objects, see Handle Object Behavior.

Version History

Introduced in R2019a

See Also

Functions

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Select a Web Site

Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select: .

You can also select a web site from the following list:

How to Get Best Site Performance

Select the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

Americas

- América Latina (Español)

- Canada (English)

- United States (English)

Europe

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)