Gas Mileage Prediction

This example shows how to predict fuel consumption for automobiles using data from previously recorded observations.

Automobile miles per gallon (MPG) prediction is a typical nonlinear regression problem, in which several automobile features are used to predict fuel consumption in MPG. The training data for this example is available in the University of California, Irvine Machine Learning Repository and contains data collected from automobiles of various makes and models.

In this data set, the six input variables are number of cylinders, displacement, horsepower, weight, acceleration, and model year. The output variable to predict is the fuel consumption in MPG. In this example, you do not use the make and model information from the data set.

Partition Data

Obtain the data set from the original data file autoGas.dat using the loadGasData function.

[data,input_name] = loadGasData;

Partition the data set into a training set (odd-indexed samples) and a validation set (even-indexed samples).

trn_data = data(1:2:end,:); val_data = data(2:2:end,:);

Select Inputs

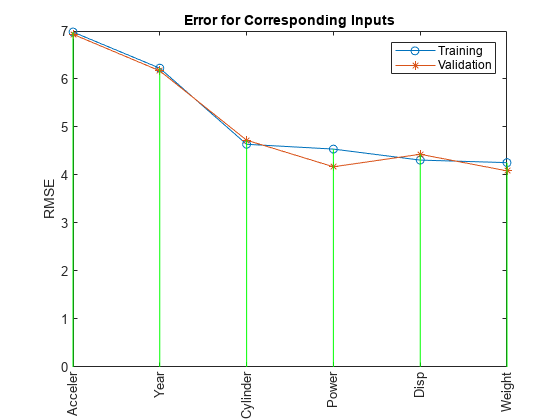

Use the exhaustiveSearch function to perform an exhaustive search within the available inputs to select the set of inputs that most influence the fuel consumption. Use the first argument of exhaustiveSearch to specify the number of inputs per combination (1 for this example). exhaustiveSearch builds an ANFIS model for each combination, trains it for one epoch, and reports the performance achieved. First, use exhaustiveSearch to determine which variable by itself can best predict the output.

exhaustiveSearch(1,trn_data,val_data,input_name);

Train 6 ANFIS models, each with 1 inputs selected from 6 candidates... Model 1: Cylinder, Error: trn = 4.6400, val = 4.7255 Model 2: Disp, Error: trn = 4.3106, val = 4.4316 Model 3: Power, Error: trn = 4.5399, val = 4.1713 Model 4: Weight, Error: trn = 4.2577, val = 4.0863 Model 5: Acceler, Error: trn = 6.9789, val = 6.9317 Model 6: Year, Error: trn = 6.2255, val = 6.1693

The graph indicates that the Weight variable has the least root mean squared error. In other words, it can best predict MPG.

For Weight, the training and validation errors are comparable, indicating little overfitting. Therefore, you can likely use more than one input variable in your ANFIS model.

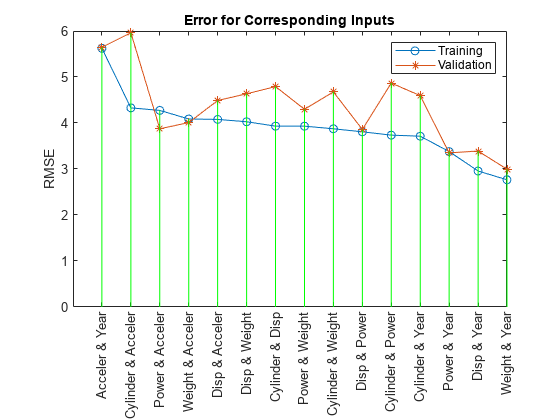

Although models using Weight and Disp individually have the lowest errors, a combination of these two variables does not necessarily produce the minimal training error. To identify which combination of two input variables results in the lowest error, use exhaustiveSearch to search every combination.

input_index = exhaustiveSearch(2,trn_data,val_data,input_name);

Train 15 ANFIS models, each with 2 inputs selected from 6 candidates... ANFIS model 1: Cylinder Disp, Error: trn = 3.9320, val = 4.7920 ANFIS model 2: Cylinder Power, Error: trn = 3.7364, val = 4.8683 ANFIS model 3: Cylinder Weight, Error: trn = 3.8741, val = 4.6763 ANFIS model 4: Cylinder Acceler, Error: trn = 4.3287, val = 5.9625 ANFIS model 5: Cylinder Year, Error: trn = 3.7129, val = 4.5946 ANFIS model 6: Disp Power, Error: trn = 3.8087, val = 3.8594 ANFIS model 7: Disp Weight, Error: trn = 4.0271, val = 4.6351 ANFIS model 8: Disp Acceler, Error: trn = 4.0782, val = 4.4890 ANFIS model 9: Disp Year, Error: trn = 2.9565, val = 3.3905 ANFIS model 10: Power Weight, Error: trn = 3.9310, val = 4.2973 ANFIS model 11: Power Acceler, Error: trn = 4.2740, val = 3.8738 ANFIS model 12: Power Year, Error: trn = 3.3796, val = 3.3505 ANFIS model 13: Weight Acceler, Error: trn = 4.0875, val = 4.0095 ANFIS model 14: Weight Year, Error: trn = 2.7657, val = 2.9954 ANFIS model 15: Acceler Year, Error: trn = 5.6242, val = 5.6481

The results from exhaustiveSearch indicate that Weight and Year form the optimal combination of two input variables. However, the difference between the training and validation errors is larger than the difference for either variable alone, indicating that including more variables increases overfitting. Run exhaustiveSearch with combinations of three input variables to see whether these differences increase further with greater model complexity.

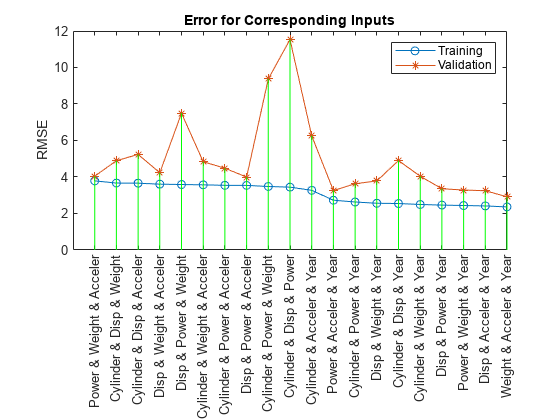

exhaustiveSearch(3,trn_data,val_data,input_name);

Train 20 ANFIS models, each with 3 inputs selected from 6 candidates... ANFIS model 1: Cylinder Disp Power, Error: trn = 3.4446, val = 11.5329 ANFIS model 2: Cylinder Disp Weight, Error: trn = 3.6686, val = 4.8920 ANFIS model 3: Cylinder Disp Acceler, Error: trn = 3.6610, val = 5.2384 ANFIS model 4: Cylinder Disp Year, Error: trn = 2.5463, val = 4.9001 ANFIS model 5: Cylinder Power Weight, Error: trn = 3.4797, val = 9.3757 ANFIS model 6: Cylinder Power Acceler, Error: trn = 3.5432, val = 4.4804 ANFIS model 7: Cylinder Power Year, Error: trn = 2.6300, val = 3.6300 ANFIS model 8: Cylinder Weight Acceler, Error: trn = 3.5708, val = 4.8378 ANFIS model 9: Cylinder Weight Year, Error: trn = 2.4951, val = 4.0434 ANFIS model 10: Cylinder Acceler Year, Error: trn = 3.2698, val = 6.2616 ANFIS model 11: Disp Power Weight, Error: trn = 3.5879, val = 7.4977 ANFIS model 12: Disp Power Acceler, Error: trn = 3.5395, val = 3.9953 ANFIS model 13: Disp Power Year, Error: trn = 2.4607, val = 3.3563 ANFIS model 14: Disp Weight Acceler, Error: trn = 3.6075, val = 4.2308 ANFIS model 15: Disp Weight Year, Error: trn = 2.5617, val = 3.7857 ANFIS model 16: Disp Acceler Year, Error: trn = 2.4149, val = 3.2480 ANFIS model 17: Power Weight Acceler, Error: trn = 3.7884, val = 4.0474 ANFIS model 18: Power Weight Year, Error: trn = 2.4371, val = 3.2850 ANFIS model 19: Power Acceler Year, Error: trn = 2.7276, val = 3.2580 ANFIS model 20: Weight Acceler Year, Error: trn = 2.3603, val = 2.9152

This plot shows the result of selecting three inputs. Here, the combination of Weight, Year, and Acceler produces the lowest training error. However, the training and validation errors are not substantially lower than that of the best two-input model, which indicates that the newly added variable Acceler does not improve the prediction much. As simpler models usually generalize better, use the two-input ANFIS for further exploration.

Extract the selected input variables from the original training and validation data sets.

new_trn_data = trn_data(:,[input_index, size(trn_data,2)]); new_val_data = val_data(:,[input_index, size(val_data,2)]);

Train ANFIS Model

The function exhaustiveSearch trains each ANFIS for only a single epoch to quickly find the right inputs. Now that the inputs are fixed, you can train the ANFIS model for more epochs.

Use the genfis function to generate an initial FIS from the training data, then use anfis to fine-tune it.

in_fis = genfis(new_trn_data(:,1:end-1),new_trn_data(:,end)); anfisOpt = anfisOptions( ... InitialFIS=in_fis, ... EpochNumber=100,... StepSizeDecreaseRate=0.5,... StepSizeIncreaseRate=1.5,... ValidationData=new_val_data,... DisplayANFISInformation=0,... DisplayErrorValues=0,... DisplayStepSize=0,... DisplayFinalResults=0); [trn_out_fis,trn_error,step_size,val_out_fis,val_error] = ... anfis(new_trn_data,anfisOpt);

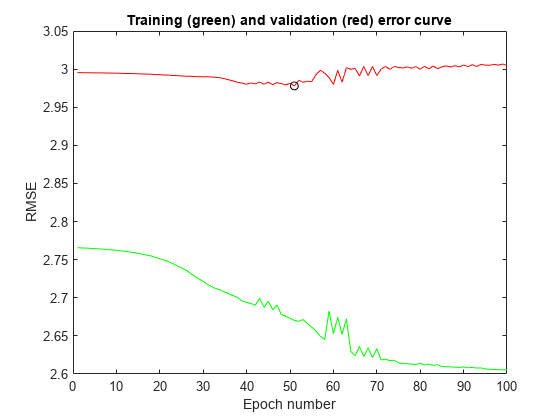

anfis returns the training and validation errors. Plot the training and validation errors over the course of the training process.

[a,b] = min(val_error); plot(1:100,trn_error,"g-",1:100,val_error,"r-",b,a,"ko") title("Training (green) and validation (red) error curve") xlabel("Epoch number") ylabel("RMSE")

This plot shows the error curves for 100 epochs of ANFIS training. The green curve gives the training errors and the red curve gives the validation errors. The minimal validation error occurs at about epoch 45, which is indicated by a circle. Notice that the validation error curve goes up after 50 epochs, indicating that further training overfits the data and produces increasingly worse generalization.

Analyze ANFIS Model

First, compare the performance of the ANFIS model with that of a linear model using their respective validation RMSE values.

The ANFIS prediction can be compared against a linear regression model by comparing their respective RMSE (Root mean square) values against validation data.

% Perform linear regression N = size(trn_data,1); A = [trn_data(:,1:6) ones(N,1)]; B = trn_data(:,7); coef = A\B; % Solve for regression parameters from training data Nc = size(val_data,1); A_ck = [val_data(:,1:6) ones(Nc,1)]; B_ck = val_data(:,7); lr_rmse = norm(A_ck*coef-B_ck)/sqrt(Nc); fprintf("\nRMSE against validation data\nANFIS : %1.3f\tLinear Regression : %1.3f\n",... a,lr_rmse);

RMSE against validation data ANFIS : 2.978 Linear Regression : 3.444

The ANFIS model has a lower validation RMSE and therefore outperforms the linear regression model.

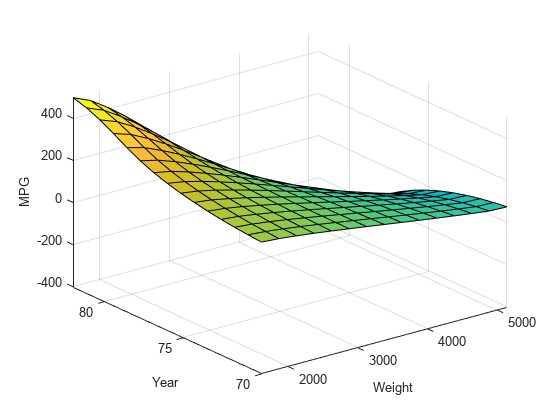

The variable val_out_fis is a snapshot of the ANFIS model at the minimal validation error during the training process. Plot an output surface of the model.

val_out_fis.Inputs(1).Name = "Weight"; val_out_fis.Inputs(2).Name = "Year"; val_out_fis.Outputs(1).Name = "MPG"; gensurf(val_out_fis)

The output surface is nonlinear and monotonic and illustrates how the ANFIS model responds to varying values of Weight and Year.

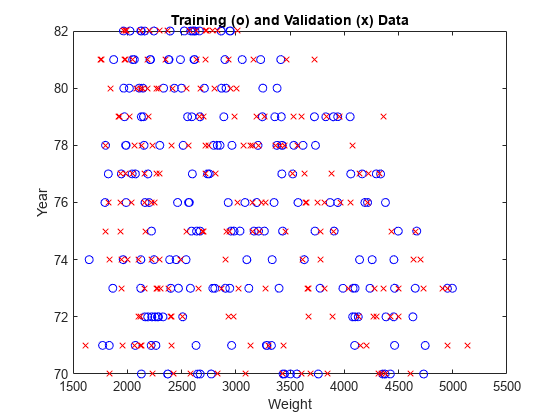

The surface indicates that, for vehicles manufactured in or after 1978, heavier automobiles are more efficient. Plot the data distribution to see any potential gaps in the input data that might cause this counterintuitive result.

plot(new_trn_data(:,1),new_trn_data(:,2),"bo", ... new_val_data(:,1),new_val_data(:,2),"rx") xlabel("Weight") ylabel("Year") title("Training (o) and Validation (x) Data")

The lack of training data for heavier vehicles manufactured in later years causes the anomalous results. Because data distribution strongly affects prediction accuracy, take the data distribution into account when you interpret the ANFIS model.