Deep Learning–Based Motion Capture System Helps Study Cheetahs in the Wild

Understanding Cheetahs’ Amazing Maneuverability Through Robotic Research

The wild cheetah mother and her three cubs hunt a springbok inside the massive Kgalagadi Transfrontier Park that straddles South Africa and Botswana. The cats rapidly bring down their prey, their agility and fast acceleration a decisive advantage. From a safe distance, University of Cape Town biomechanics professor and African Robotics Unit (ARU) director, Amir Patel, trains his lab’s motion capture system on the scene.

Patel and his team at the ARU study how cheetahs move to build better, more agile robots. “The cheetah is the pinnacle of maneuverability,” Patel says. “Understanding it compels us to devise new ways of measuring motion and force or performing optimization.”

The focus on the fastest terrestrial animal has resulted in numerous advancements. The researchers developed novel techniques in robotics, multibody modeling, feedback control, trajectory optimization, computer vision, and deep learning.

Cheetahs are exceptionally agile, can accelerate rapidly, and can turn on a dime. (Video credit: University of Cape Town, African Robotics Unit)

While Patel had been using MATLAB® and Simulink® for years, a Campus-Wide License allows him and fellow researchers at the university to take fuller advantage of the platform.

“We choose tools based on the problems we study,” Patel said. “When we got the site-wide license, I thought, okay, now we can use everything.”

The team also discovered that MATLAB and Simulink provide seamless interoperability with other programming languages such as Python® and hardware such as Speedgoat®.

Patel collaborated with Dr. Mackenzie Mathis and Dr. Alexander Mathis and applied their open-source toolbox (DeepLabCut™) for noninvasive animal behavior tracking to the task of tracking cheetahs running in the wild. This research was featured on the cover of Nature Protocols.

Patel then received a Google Research Scholar Award in the machine perception category for his deep learning–based motion capture system, WildPose. MathWorks also offered support for the lab’s research. Patel’s work generated two patents, launched a university spinoff, and sparked enormous interest from roboticists, sports scientists, clinicians, biologists, ecologists, and conservation managers.

An Unexpected Tailwind

In the wild, cheetahs have been clocked sprinting 120 kilometers (75 miles) per hour, making them the fastest animal on land over short distances. The big cats can accelerate rapidly and chase prey with liquid ease, which is unsurprising given their long legs and necks, small heads, and claws at the ready.

About 18% of the world’s cheetahs live in South Africa. As an engineer, Amir Patel became fascinated with the local animal early on. When starting his Ph.D. at the University of Cape Town, he planned to use cheetah data to develop a full robot.

“Imagine being in a car and putting your arm out the window. You feel that force. We determined that the cheetah uses this aerodynamic drag to stabilize its body during high-speed turns.”

“There was no information on how it uses its tail or flexible spine or models to explain how cheetahs maneuver,” he remembered.

Patel zeroed in on the long and furry tail. Back then, tail data was based on other animals. Scientists were unsure about its weight and inertia. The prevailing thinking among wildlife experts was that the tails were heavy and had high inertia to act like counterweights.

Then, one of his Ph.D. collaborators, Professor Emily Lane at the National Zoological Gardens in Pretoria, asked him to observe an autopsy of a cheetah that had died of natural causes. Patel took photographs and measurements during the procedure.

“I expected the tail to be large,” he said. “But it was skinny and only about 2% of the cheetah’s body mass.”

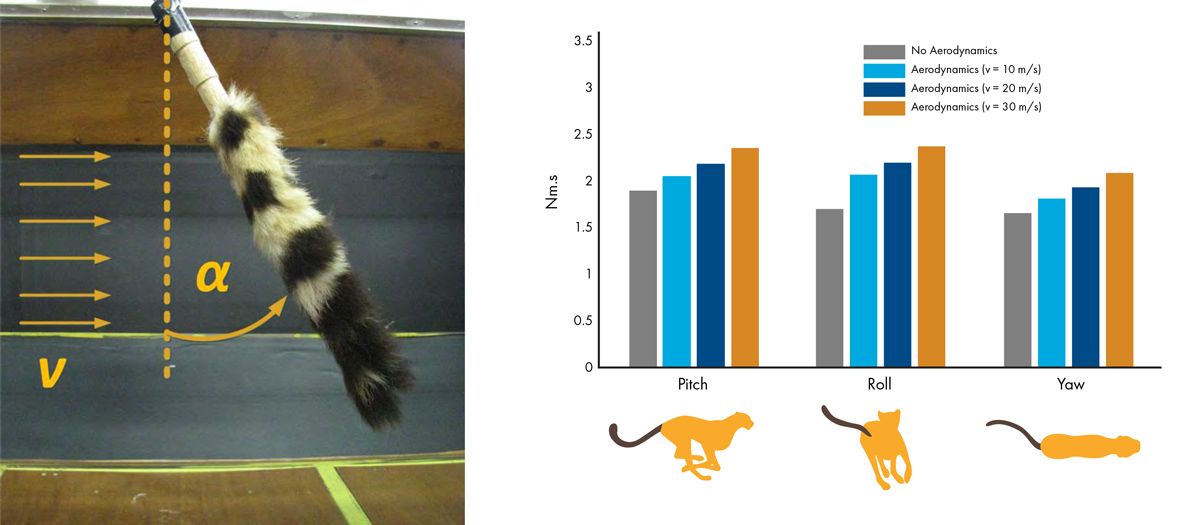

To better understand the fur’s aerodynamic effects, Patel placed tails from deceased cheetahs provided by Lane in a wind tunnel. He used MATLAB and Simulink for the modeling.

“Imagine being in a car and putting your arm out the window. You feel that force,” he said. “We determined that the cheetah uses this aerodynamic drag to stabilize its body during high-speed turns.”

Roboticists often draw inspiration from nature, but Patel found himself doing robotics-inspired biology, using his robotics background to shed new light on the golden-eyed animal.

Bioinspiration Leads to Pistons

At the ARU, Patel, one of four principal investigators, studies the cheetah with his students from different angles: creating mathematical models of mechanical systems, sensing and measuring what the animal does, and building robots that serve as mechanical models for complex movements. Cheetahs’ changing speed makes their locomotion trickier to study than consistent animal movements.

He and other ARU engineers built a bipedal robot named “Baleka,” which comes from a Xhosa word meaning “to sprint.” Their design leveraged trajectory optimization techniques with the result that their robot achieved more vertical agility than a human.

At the 2023 International Conference on Robotics and Automation (ICRA), Patel and his student Christopher Mailer presented the design and control of a novel quadrupedal robot prototype, Kemba. Named for the Zulu “inkemba,” or sword, the hybrid pneumatic-electric prototype platform can jump at a height of around 2.2 times its leg length.

“When we watched videos, we saw that cheetahs are not trying to finely control their feet, they’re just trying to push off as hard as they can,” Patel said. “A pneumatic piston applies force in a similar manner.”

Patel reflected that he and his students share backgrounds in feedback control, so thinking about block diagrams and signals moving from one system to the next feels natural.

Jumping test of the bipedal robot Baleka. (Video credit: University of Cape Town, African Robotics Unit)

Although roboticists typically avoid pneumatic pistons because they’re difficult to control precisely, the ARU team embraced that effect. They utilized Simscape Multibody™ and Simulink to model pneumatic pistons on Kemba’s knees, simulate the four-legged robot’s expected movement, and design controllers for it.

Patel reflected that he and his students share backgrounds in feedback control, so thinking about block diagrams and signals moving from one system to the next feels natural. The team designed Kemba in mechanical CAD software for multibody modeling and then ported the designs into Simscape™.

Model-Based Design allows Patel and his students to look at acceleration signals in real time, which he called extremely useful, especially for debugging. This block diagram environment slashed development time for the team, enabling rapid testing and experimentation, system optimization, and code automation.

“Simulink Real-Time™ is game-changing. In the old days, I coded manually, and something always goes wrong with that,” he said. “Now we have a Speedgoat real-time control system that easily integrates with Simulink Real-Time. We just click a button, and it runs on the robot. That made development nice and fast.”

At ICRA, the scientists showed that Kemba has the potential for acceleration and bounding. The rigid-spine robot can jump as high as 1 meter (3.28 feet).

Contact order matters for cheetahs and robots alike. Even with a robotic gripper or hand, ideal contact order could make all the difference in moving or manipulating an object. While on a sabbatical at Carnegie Mellon in 2018, Patel floated an idea for a more accurate contact optimization algorithm.

Kemba accelerating from rest into a bounding gait. (Video credit: University of Cape Town, African Robotics Unit)

A few scientists he spoke with responded, “No, you can’t do that. A few groups tried that two years ago and it doesn’t work.” He went ahead anyway. The resulting paper, “Contact-Implicit Trajectory Optimization Using Orthogonal Collocation,” in IEEE Robotics and Automation Letters, became one of his most-cited publications and was the 2020 runner-up for the best paper award for the IEEE Technical Committee on Model-Based Optimization for Robotics.

“There is an advantage to being geographically isolated,” Patel mused. “I have a bit of an underdog mentality.”

Long-Range Motion Capture System

Cheetahs, facing many threats, are teetering on the edge of extinction. Despite their incredible speed, they are timid predators who compete with scavengers to retain prey. Climate change, habitat loss, poachers, and conflict with humans decimated wild populations.

The lab forged a highly collaborative relationship with MathWorks. Feedback from the ARU researchers already inspired new features for Computer Vision Toolbox and Lidar Toolbox that are being incorporated into the products.

“There’s a big push for breeding and conservation programs to bring the numbers up,” Patel said. The African Robotics Unit works with three cheetah centers (Cheetah Outreach, Cheetah Conservation & Research Centre, and Ashia) in the area where the cheetahs are accustomed to people.

Existing biomechanics techniques for understanding human movements can’t be applied directly to wildlife. This meant reimagining how to measure the cats’ motion. “I thought we’d just buy some collars and put them on cheetahs,” he admitted. “But when an animal wears a collar, there’s no information about its head, legs, spine, or tail.”

A backward-facing GPS-based camera system the ARU scientists developed for captive cheetahs that enabled sensor fusion worked well … until the big cats stopped agreeing to don it. Another hurdle: Cheetahs at the centers move slower at 65 kph (40 mph) than ones in the wild, which reach 144 kph (89 mph).

“In the lab, you can put electrodes on a person or an animal and measure when they activate muscles, but you can’t really touch animals in the wild,” Patel said. “Then I had this crazy idea to be able to measure wild animals from far distances—the whole body, the kinematics of the skeleton.”

The ARU began working with national parks to take the lab into the wild, where the researchers seek to collect more representative data from cheetahs going full speed. Achieving granular motion capture without electrodes or wearables poses mighty challenges, though. Sometimes the cats prove elusive in Kgalagadi Transfrontier Park, emerging briefly only to disappear a moment later over the sand dunes.

Markerless (noninvasive) 3D reconstruction of a cheetah running. Multiple views of the cheetah are labeled in 2D and then fused by the full trajectory estimation (FTE) algorithm to produce 3D skeletal motion. (Video credit: University of Cape Town, African Robotics Unit)

Patel and the ARU team then developed a long-range motion capture system using lidar and telescopic lenses, and by exploiting multisensor fusion, they were able to produce 3D biomechanical data at long distances.

MATLAB toolboxes became essential for project components such as 3D reconstruction, where the team combined lidar and camera information for sensor fusion. Control System Toolbox™ helped illuminate the input-output relationship between the prey’s motion and that of different cheetah body parts or the entire cheetah body.

“We use Computer Vision Toolbox a lot for calibration,” Patel noted. “At the MathWorks Research Summit, everybody was raving about how that camera calibration is the best.” He traveled to Natick, Massachusetts, for the annual summit, where he conversed with MathWorks co-founder Cleve Moler and gave a keynote talk.

The lab forged a highly collaborative relationship with MathWorks. Feedback from the ARU researchers already inspired new features for Computer Vision Toolbox™ and Lidar Toolbox™ that are being incorporated into the products.

Beyond cheetahs, the ARU captured other animals in motion including giraffes, lions, and springbok. After the team validates their system, they hope to release it to the world.

While on a sabbatical as a visiting professor at the University of Oxford, Patel concentrated on improving animal vital sign measurement in the wilderness. Some diseases can jump from domestic to wild animals and spread so rapidly that ecologists often don’t know until it’s too late, especially in low-resource areas. Smarter, more accessible remote monitoring could provide an early warning system.

These remote systems might benefit human health outcomes, too, allowing clinicians to measure things like muscle activation in patients without having to prep skin and adhere expensive electrodes. Patel sees potential for the system to be used in spinal cord injury rehabilitation and prosthetics fitting.

Sports scientists expressed interest in the ARU’s patented wearable motion capture system. Another patent for the lab’s affordable 3D force plate system covering large areas led to a new university spinoff company currently in development. Next, a group at the lab is working on giving Kemba an active spine.

Patel’s group is also embarking on exploring inverse reinforcement learning and optimal control. “While we can observe the motion, we’re still not sure why the cheetah does what it does,” he said. “Is the cat trying to conserve energy when running, increase maneuverability, or intercept its prey in the shortest time? Inverse reinforcement learning will help us understand what it’s trying to optimize.”

The cats continue to enthrall him.

“During my Ph.D., I changed my mindset to become someone who wants to understand this animal,” he said. “It’s been over 13 years, and I haven’t looked back.”

Read Other Stories

ROBOTICS

A Team of Nine Undergraduate Students Builds Innovative Jumping Robot for Their Final Project

Small, Agile “Ascento” Climbs Stairs and Avoids Obstacles

ROBOTICS

Exploring the World’s Largest Ecosystem — 500 Meters Below the Ocean Surface

Nat Geo Develops Underwater Robotic Camera to Explore the Deep

ACADEMIA

Decoding Butterfly Flight with High-Speed Cameras and a Wind Tunnel

Fluid Dynamics Help Show Why Butterflies Flutter